Ziniu Li @ ICLR2025

@ziniuli

Ph.D. student @ CUHK, Shenzhen.

Working toward the science of RL and LLMs.

ID: 900213437929750528

http://www.liziniu.org 23-08-2017 04:29:43

145 Tweet

432 Followers

477 Following

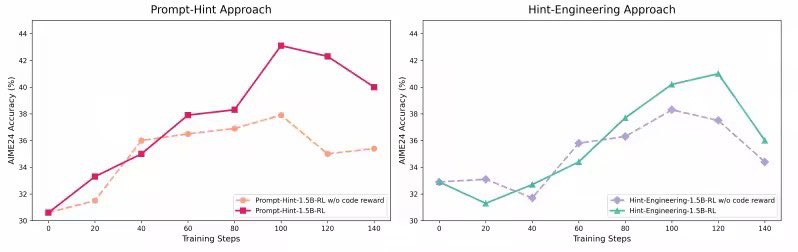

Amazing work by Rui-Jie (Ridger) Zhu ,more resources to investigate the mechanism behind the hybrid linear attention. Resources: Paper: arxiv.org/pdf/2507.06457 Huggingface CKPT Link: huggingface.co/collections/m-…