Zijun Wu

@zijunwu88

PhD student @AmiiThinks @ualberta / previous AS @AWS

ID: 1708562995004182528

https://khalilbalaree.github.io/ 01-10-2023 19:22:31

23 Tweet

354 Followers

619 Following

Looking forward to presenting our #ICML paper advocating multi-token prediction and correcting what it really means to say "next-token prediction cannot do what humans do" --- which is often argued poorly. Gregor Bachmann and I just updated the camera ready version on arxiv.

Wrote about extrinsic hallucinations during the July 4th break. lilianweng.github.io/posts/2024-07-… Here is what ChatGPT suggested as a fun tweet for the blog: 🚀 Dive into the wild world of AI hallucinations! 🤖 Discover how LLMs can conjure up some seriously creative (and sometimes

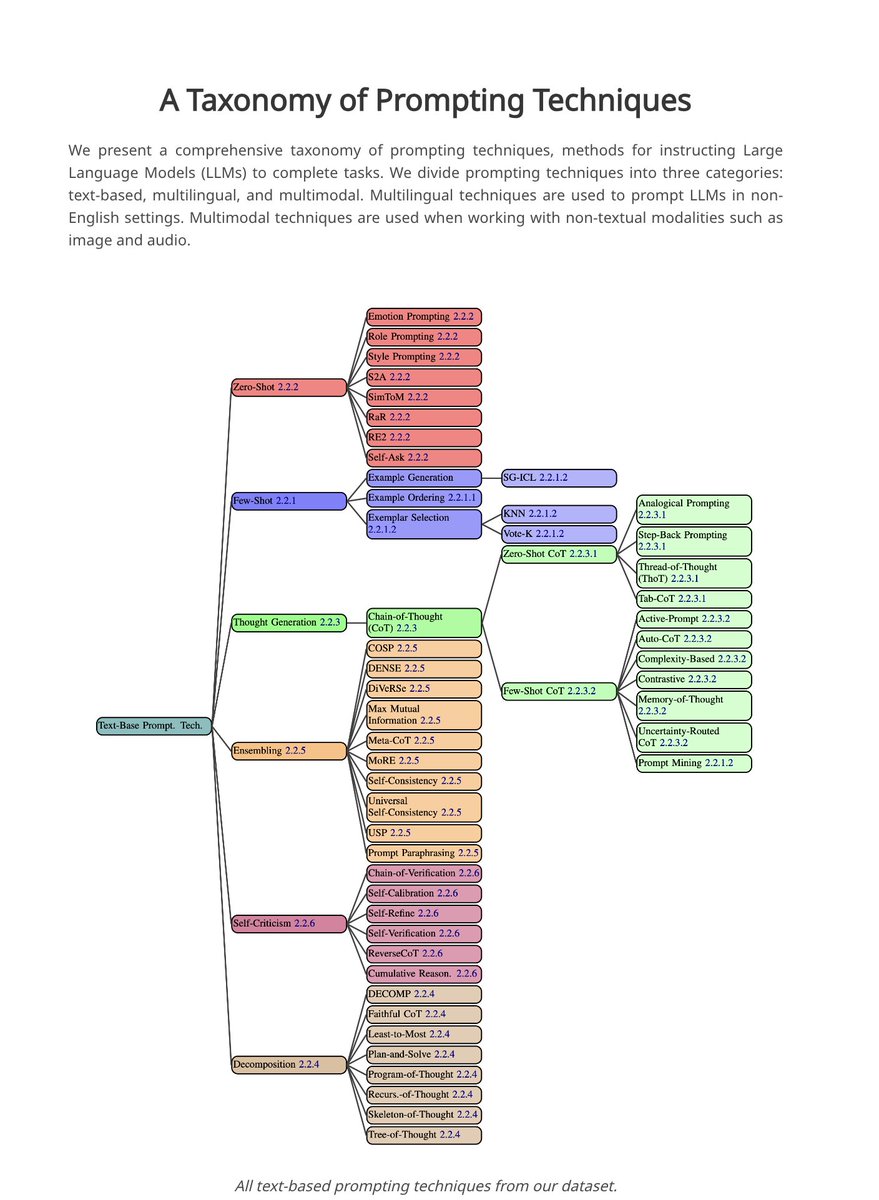

Alexander | AI Operations jack morris Thanks for mentioning our work! 🙌 It offers a fresh perspective on prompt transferability in the non-discrete setting. We hope it can inspire more research in this direction.