Zifeng Wang

@zifengwang315

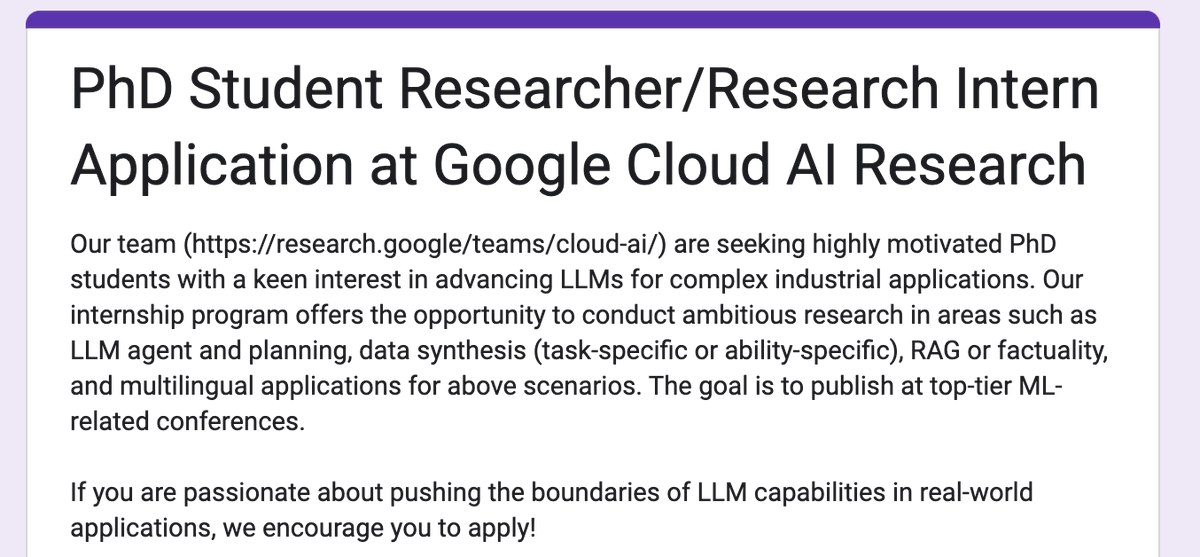

Research Scientist @Google, PhD in Machine Learning @Northeastern. Large Language Models, Continual learning, Data & Parameter-efficient learning.

ID: 1191572397826199553

http://kingspencer.github.io 05-11-2019 04:26:18

48 Tweet

690 Followers

539 Following

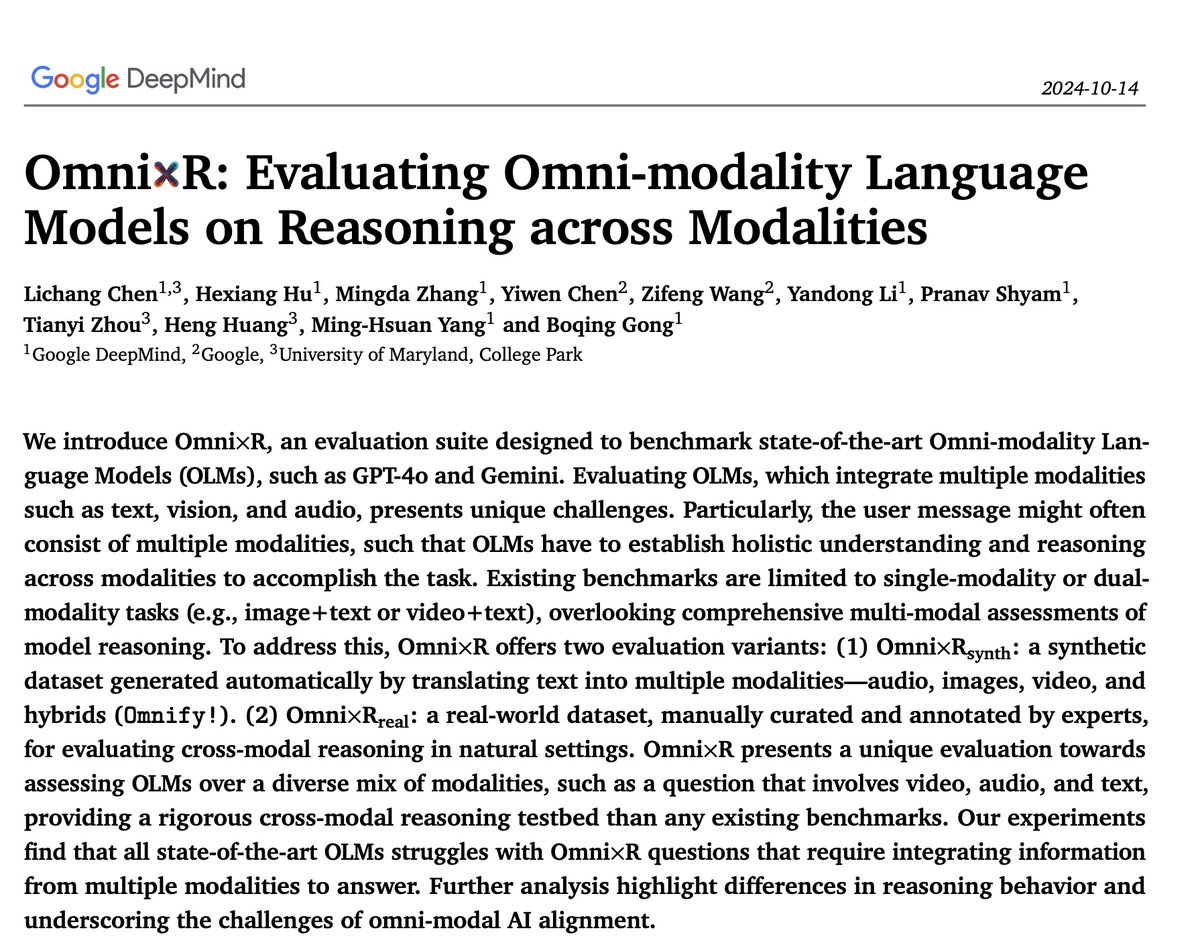

🚨 Excited to share our latest work Google DeepMind: OmnixR, an evaluation suite for evaluating the reasoning of Omni-Modality Language Models' (OLMs) across modality. We observe the significant reasoning performance drops of all the SoTA OLMs on other modalities compared to the

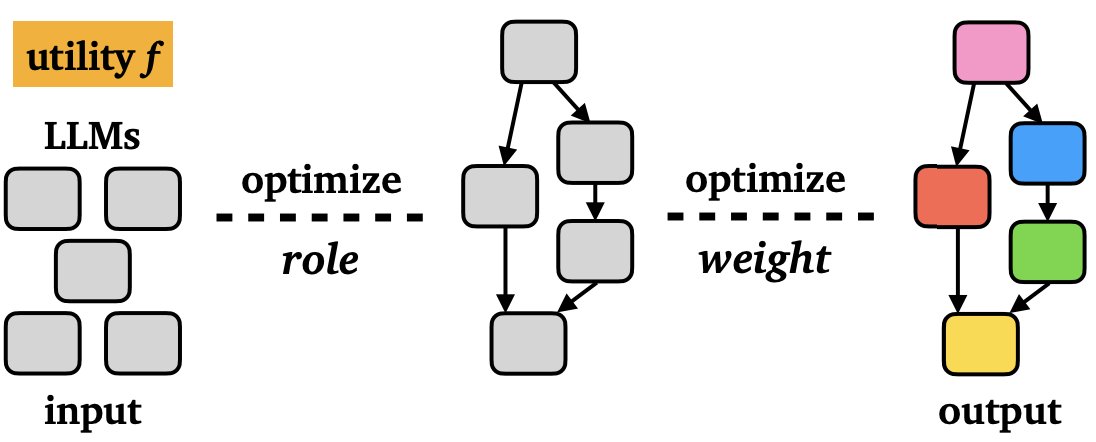

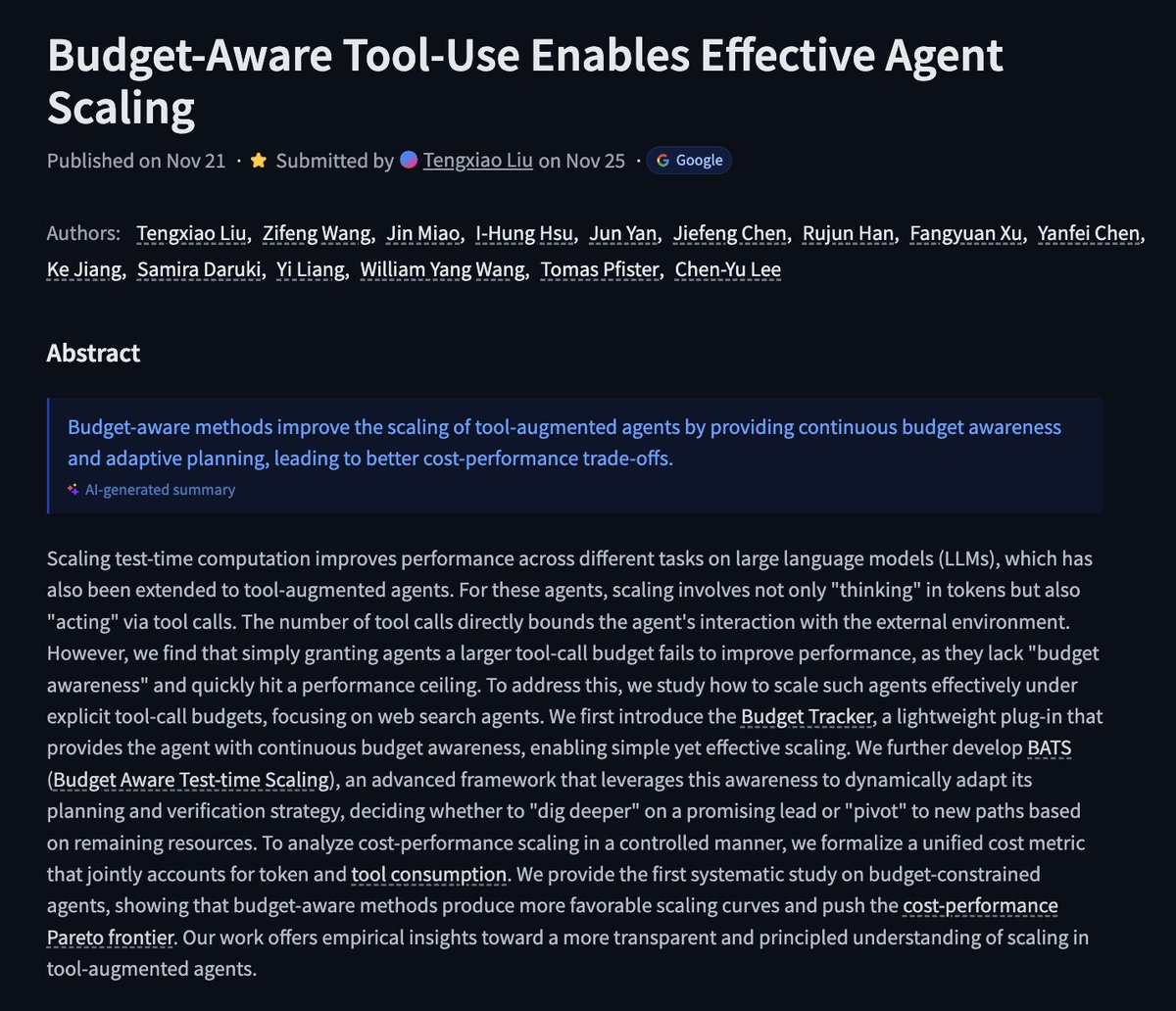

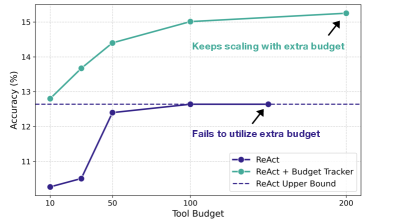

New from University of Washington and Google DeepMind: Model Swarms, a collaborative search algorithm that adapts LLM experts to single task, multi-task domains, and reward models via swarm intelligence. Talk to the team Zifeng Wang Chen-Yu Lee Yejin Choi tsvetshop here! alphaxiv.org/abs/2410.11163…