Zhuohan Li

@zhuohan123

👨🏻💻 cs phd @ 🌁 uc berkeley |

building @vllm_project |

machine learning systems |

the real agi is the friends we made along the way

ID: 243517888

https://zhuohan.li 27-01-2011 06:29:13

187 Tweet

5,5K Followers

797 Following

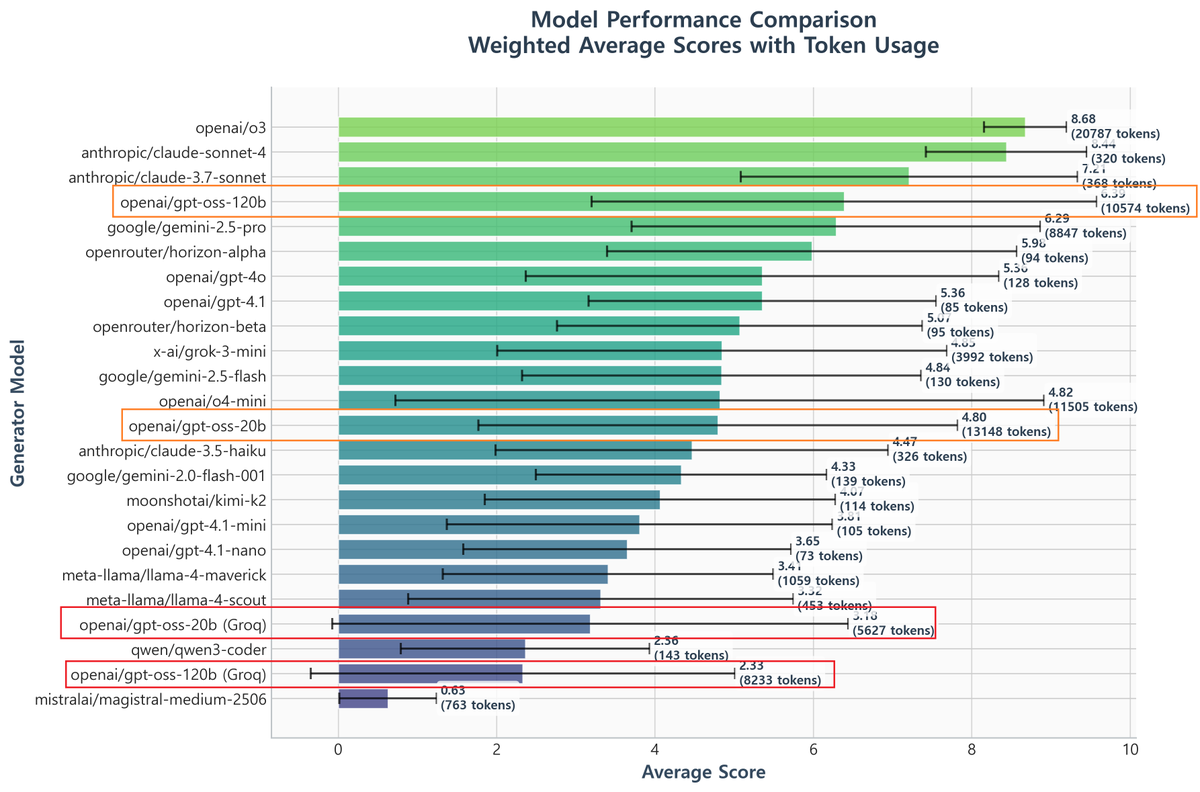

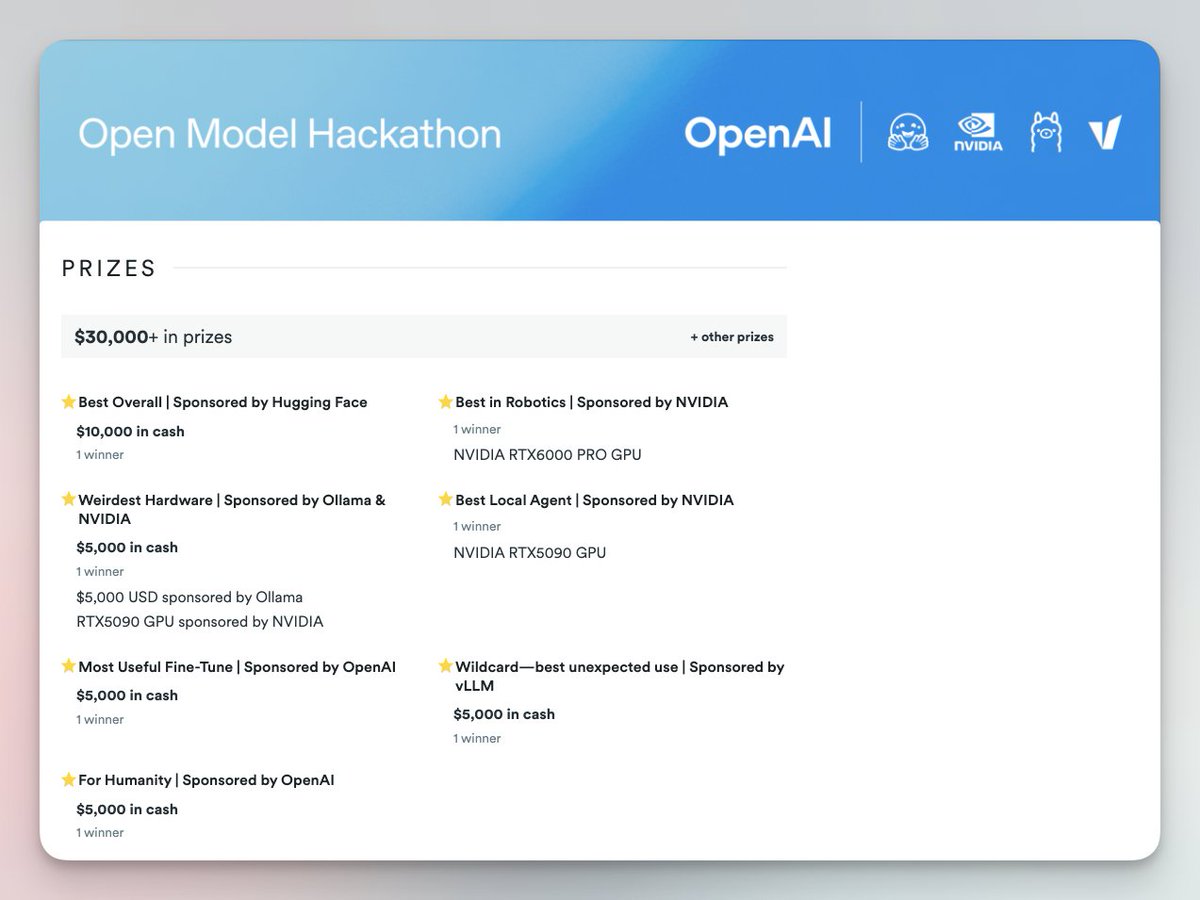

Lots of conflicting takes about gpt-oss (yay open-source in the spotlight)! We’re powering the official @openai demo gpt-oss.com with HF inference providers thanks to Fireworks AI, Cerebras, Groq Inc and Together AI so we have a front-row seat of

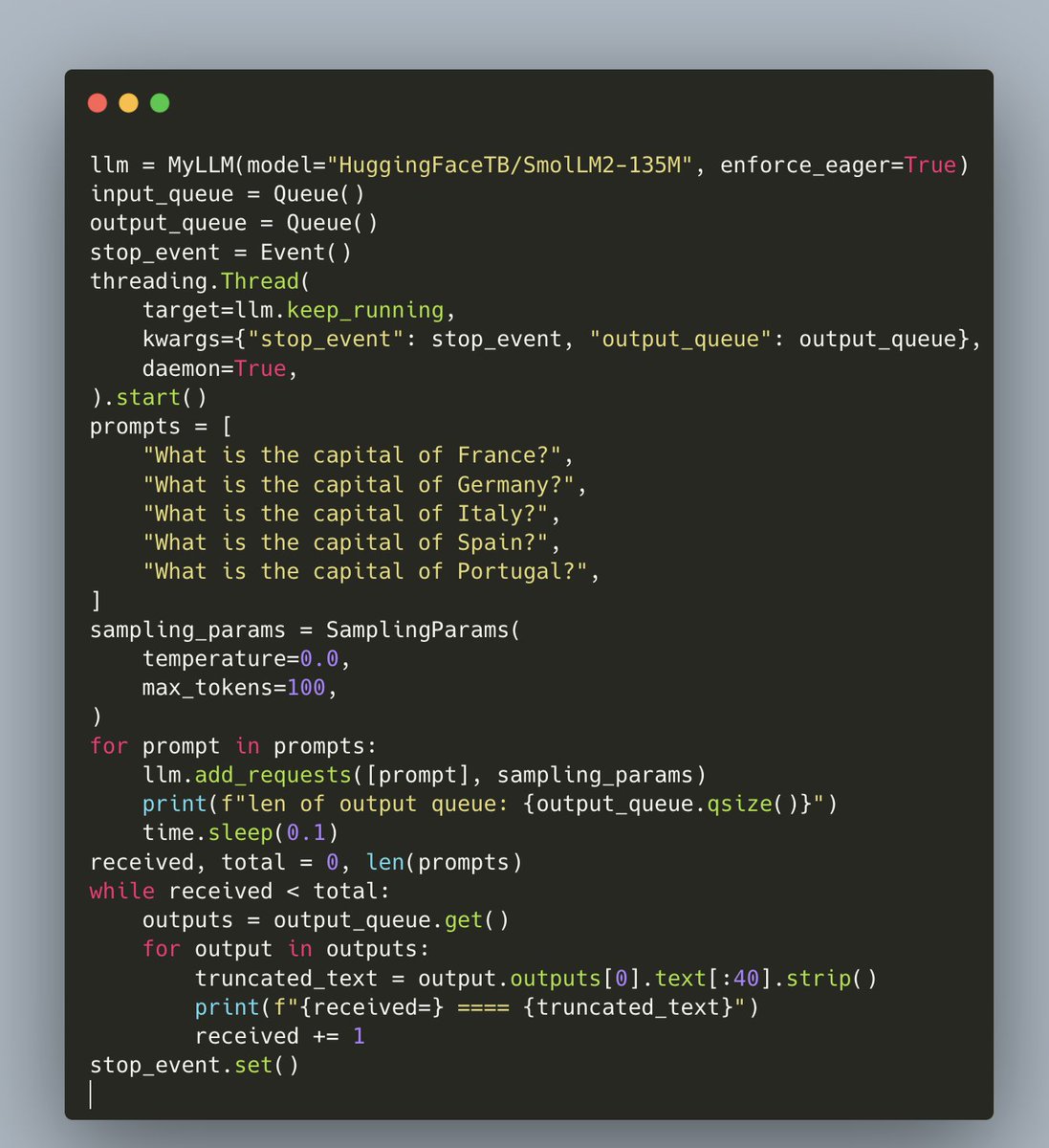

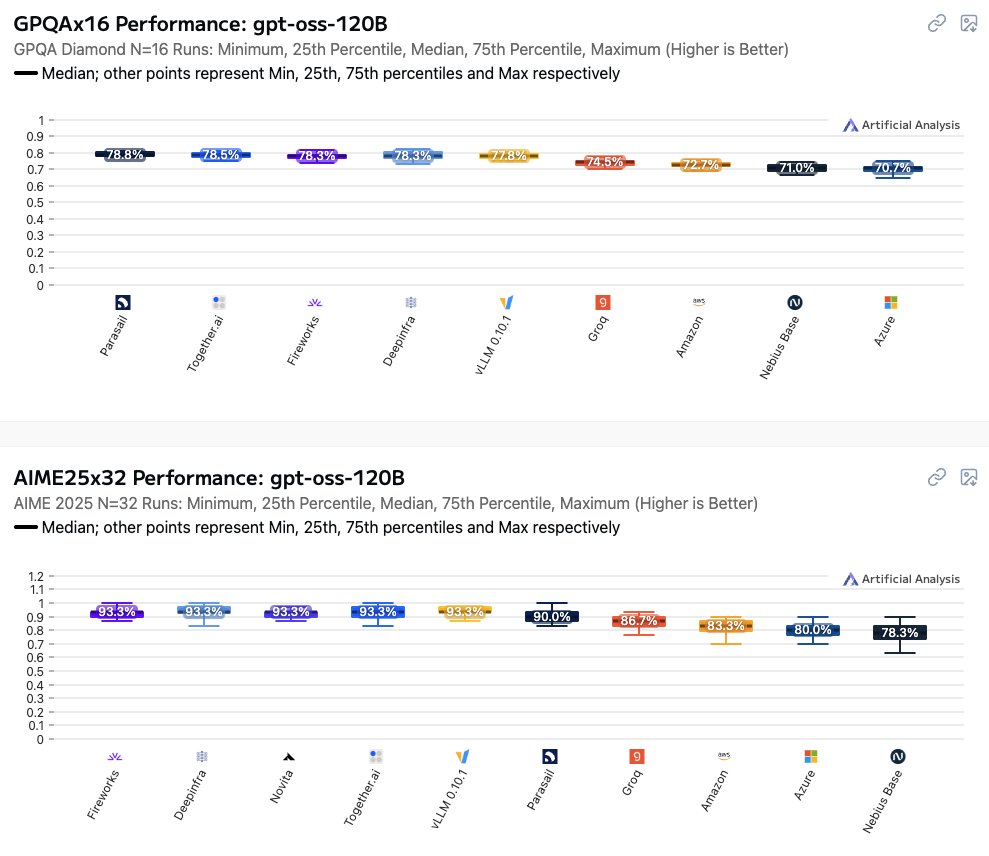

Inference providers have worked hard in the last week to make gpt-oss work well on their platforms. We just released a guide to help you verify API-compatibility & run your own evals. Additionally, Artificial Analysis started releasing per-provider evals for AIME, GPQA & IFBench 🧵

Excited to share this work by Bram Wasti and team. Precise numerics are fundamental to stable RL, and we now have the core infra in OSS as well.