Brian Zheyuan Zhang

@zheyuanzhang99

Incoming CS PhD Student @JohnsHopkins; Prev: @UMich ‘24, @UMassAmherst ‘22 | Working on Embodied AI, Multimodality, and Language.

ID: 1536577486892544000

https://cozheyuanzhangde.github.io 14-06-2022 05:13:41

33 Tweet

87 Takipçi

364 Takip Edilen

What it would take to train a VLM to perform in-context learning (ICL) over egocentric videos? At #EMNLP2024 get the details on EILEV by Michigan SLED Lab's Peter Yu Peter Yu, Brian Zheyuan Zhang, @Hu_FY_, Shane Storks, PhD, Joyce Chai. 📰 arxiv.org/abs/2311.17041

How well can VLMs detect and explain humans' procedural mistakes, like in cooking or assembly? 🧑🍳🧑🔧 My new pre-print with Itamar Bar-Yossef, Yayuan Li, Brian Zheyuan Zhang, Jason Corso, and Joyce Chai (Michigan SLED Lab MichiganAI Computer Science and Engineering at Michigan) dives into this! arxiv.org/pdf/2412.11927

Everything you love about generative models — now powered by real physics! Announcing the Genesis project — after a 24-month large-scale research collaboration involving over 20 research labs — a generative physics engine able to generate 4D dynamical worlds powered by a physics

Andrew G. Barto and Richard S. Sutton have been awarded the prestigious 2024 ACM A.M. #TuringAward for developing a branch of artificial intelligence known as reinforcement learning. University of Alberta Manning College of Information & Computer Sciences #ManningCICS #ArtificialIntelligence #UMass bit.ly/3F6Poww

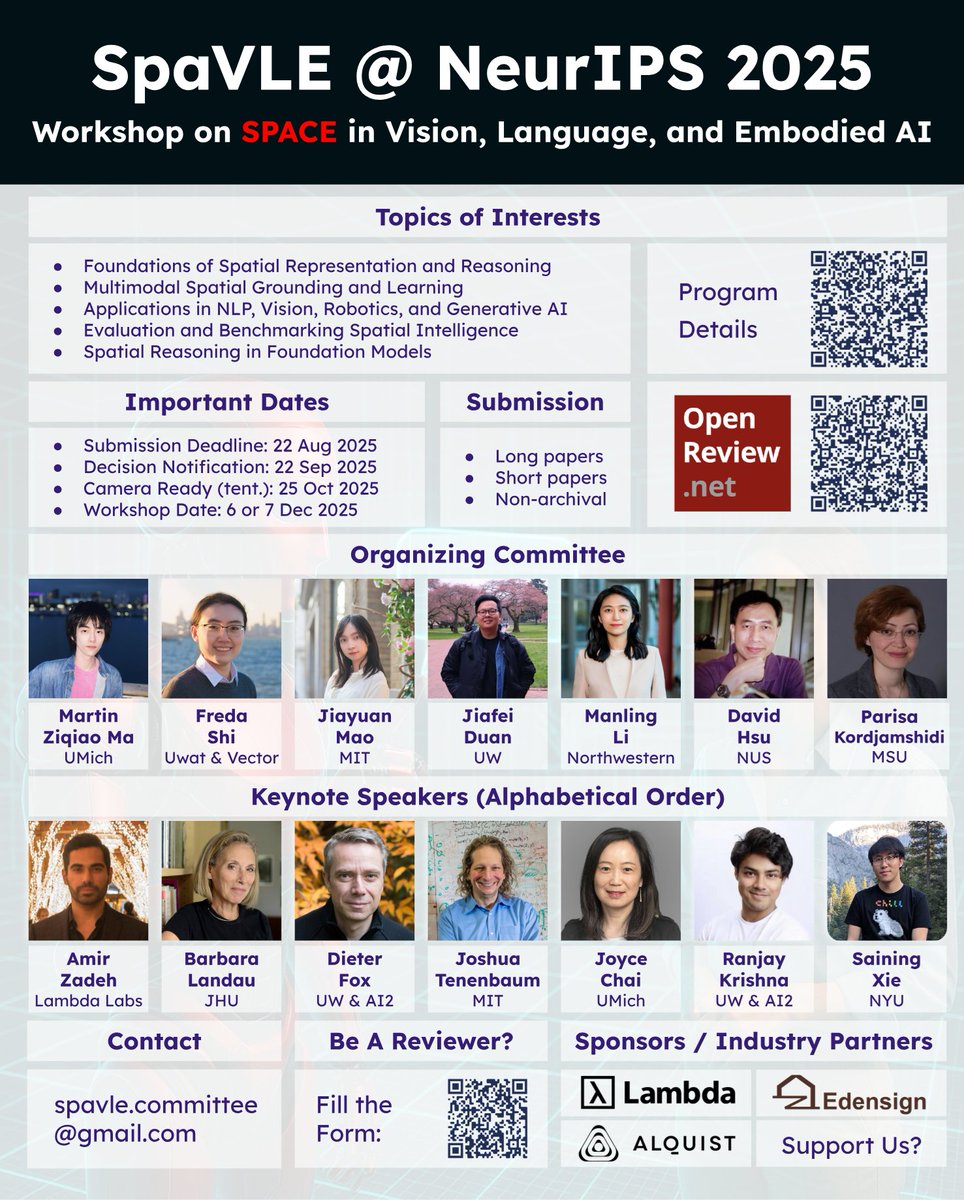

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉 …vision-language-embodied-ai.github.io 🦾Co-organized with an incredible team → Freda Shi · Jiayuan Mao · Jiafei Duan · Manling Li · David Hsu · Parisa Kordjamshidi 🌌 Why Space & SpaVLE? We