Yuanhan (John) Zhang

@zhang_yuanhan

The 3rd year Ph.D. @MMLabNTU

ID: 1302042473116495874

https://zhangyuanhan-ai.github.io/ 05-09-2020 00:35:05

210 Tweet

862 Takipçi

252 Takip Edilen

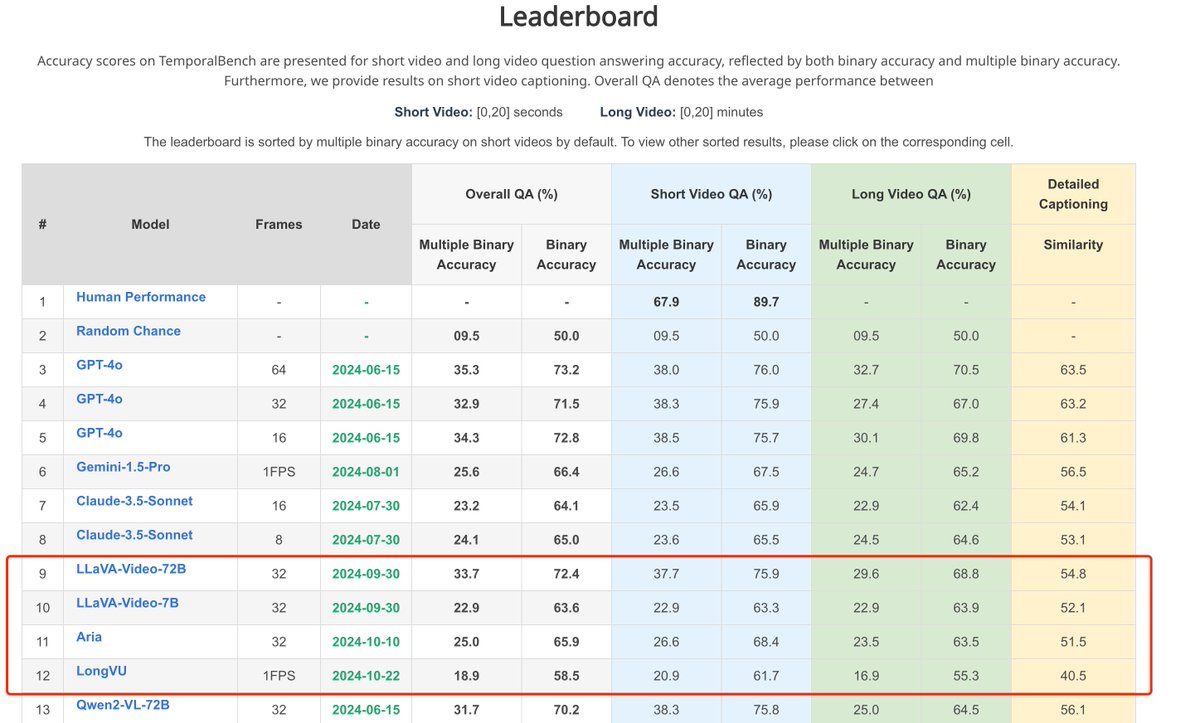

Now TemporalBench is fully public! See how your video understanding model performs on TemporalBench before CVPR! 🤗 Dataset: huggingface.co/datasets/micro… 📎 Integrated to lmms-eval (systematic eval): github.com/EvolvingLMMs-L… (great work by Chunyuan Li Yuanhan (John) Zhang ) 📗 Our

Excellent project led by Jingkang Yang @NTU🇸🇬! Come to see how your video LMM performs in egocentric life.

We are excited to partner with AI at Meta to welcome Llama 4 Maverick (402B) & Scout (109B) natively multimodal Language Models on the Hugging Face Hub with Xet 🤗 Both MoE models trained on up-to 40 Trillion tokens, pre-trained on 200 languages and significantly outperforms its

Impressive and solid work from Kunchang Kunchang Li. Looking forward its video understanding ability in the future.

![penghao wu (@penghaowu2) on Twitter photo 🧵[1/n] Our #ICML2025 paper, Streamline Without Sacrifice - Squeeze out Computation Redundancy in LMM, is now on arXiv! Orthogonal to token reduction approaches, we study the computation-level redundancy on vision tokens within decoder LMM.

Paper Link: arxiv.org/abs/2505.15816 🧵[1/n] Our #ICML2025 paper, Streamline Without Sacrifice - Squeeze out Computation Redundancy in LMM, is now on arXiv! Orthogonal to token reduction approaches, we study the computation-level redundancy on vision tokens within decoder LMM.

Paper Link: arxiv.org/abs/2505.15816](https://pbs.twimg.com/media/GrhSof-boAAUHXQ.jpg)