Yuhui Zhang

@zhang_yu_hui

CS PhD @ Stanford

ID: 969422731748950018

https://cs.stanford.edu/~yuhuiz 02-03-2018 04:02:45

90 Tweet

660 Followers

176 Following

Submit your latest work (papers, demos) in #XAI to the 4th Explainable AI for Computer Vision (XAI4CV) Workshop at #CVPR2025! The deadline for the Proceedings Track is March 10, 2025 Details: xai4cv.github.io/workshop_cvpr25 Submission Site: cmt3.research.microsoft.com/XAI4CV2025 #CVPR2025 Explainable AI

📢 Really excited to host the Data Curation for Vision Language Reasoning Challenge (DCVLR) @ NeurIPS 2025 and to include VMCBench as one of the evaluation sets! We’re looking forward to seeing the top solutions (with prize money!) — huge thanks to Benjamin Feuer and the team for

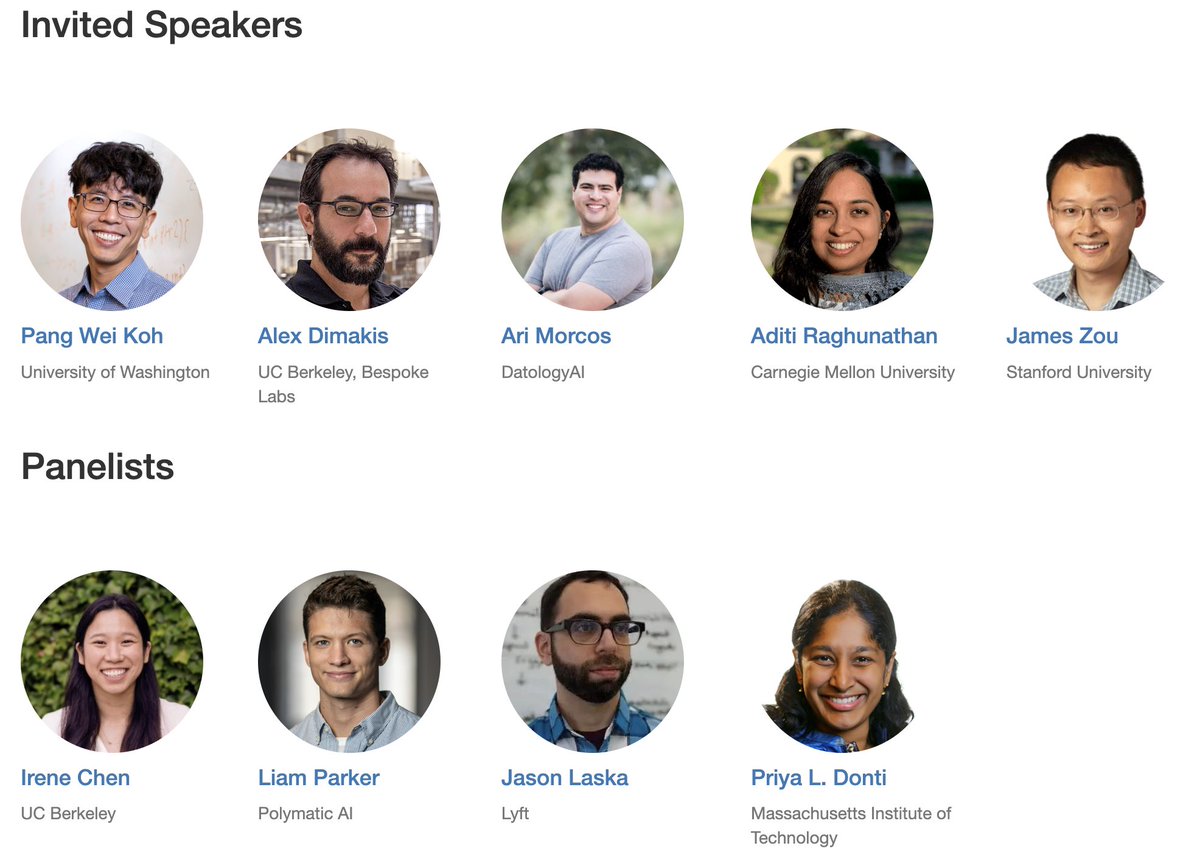

Join us on Saturday at West 208-209 for our ICML Conference workshop on data-centric AI! ✨ Looking forward to great discussions and meeting both old and new friends!