Genghan Zhang

@zhang677

ID: 1704996753160978432

21-09-2023 23:11:29

22 Tweet

92 Followers

107 Following

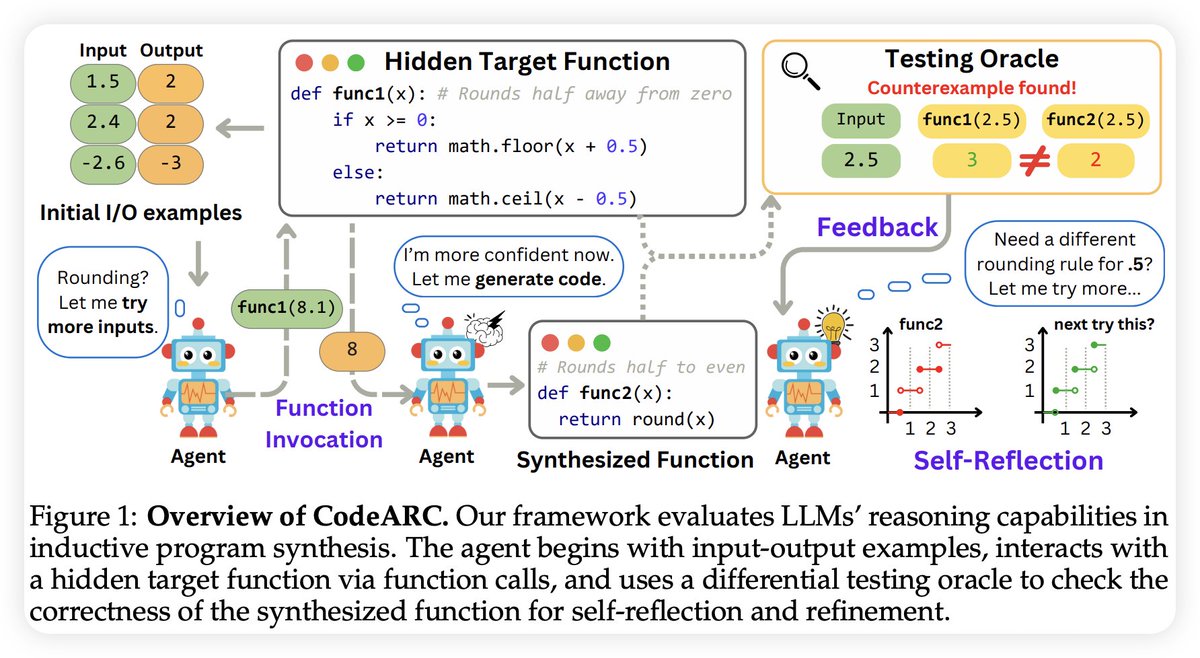

Wow. Nice timing. Anjiang Wei @ EMNLP25 and I just released a new version of our paper arxiv.org/pdf/2410.15625. LLM Agents show surprising exploration/sample efficiency (almost 100x faster than UCB bandit) in optimizing system code. A good domain for coding agents🤔😁