Yunzhu Li

@yunzhuliyz

Assistant Professor of Computer Science @Columbia @ColumbiaCompSci, Postdoc from @Stanford @StanfordSVL, PhD from @MIT_CSAIL. #Robotics #Vision #Learning

ID: 947911979099881472

https://yunzhuli.github.io/ 01-01-2018 19:26:41

449 Tweet

6,6K Takipçi

523 Takip Edilen

I’m a big fan of this line of work from Columbia (also check out PhysTwin by Hanxiao Jiang: jianghanxiao.github.io/phystwin-web/) they really make real2sim work for the very challenging deformable objects, And show it’s useful for real robot manipulation. So far it seems a bit limited to

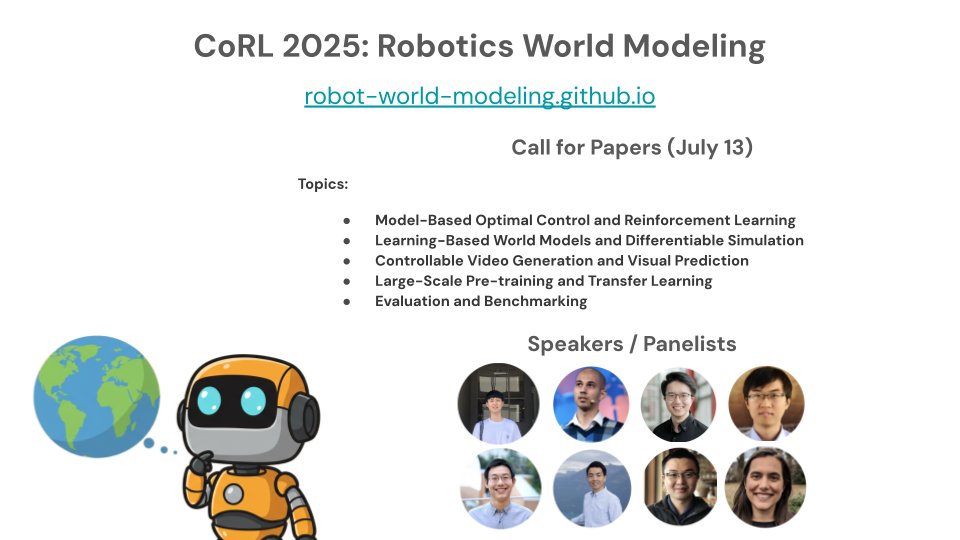

🤖🌎 We are organizing a workshop on Robotics World Modeling at Conference on Robot Learning 2025! We have an excellent group of speakers and panelists, and are inviting you to submit your papers with a July 13 deadline. Website: robot-world-modeling.github.io

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the

We have two workshops along with ICML next week. (Great support from SFU+UBC+Vector!) July 14 (9am-5pm): sites.google.com/view/vancouver… July 21 (9am-noon): sites.google.com/view/sfu-at-ic… Please join and enjoy the talks! Location: 515 W Hastings St, Vancouver, BC V6B 4N6 maps.app.goo.gl/kN6o9W87bbL5qC…