Yunjae Won

@yunjae_won_

Ms+PhD @kaist_ai Language & Knowledge Lab

Research Interests: Preference Optimization, Continual Learning, and LLMs.

Also a huge fan of Jazz, Rock, and Fusion.

ID: 1927184020107825152

https://yunjae-won.github.io/ 27-05-2025 02:04:24

27 Tweet

38 Followers

87 Following

How does the loss of external context affect a language model’s learning ability to ground its responses? Check out our latest work led by hyunji amy lee, where we introduce CINGS, a simple training method that significantly improves grounding in both text and vision-language

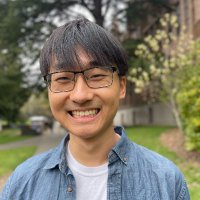

⁉️Why do reward models suffer from over-optimization in RLHF? We revisit how representations are learned during reward modeling, revealing “hidden state dispersion” as the key, with a simple fix! 🧵 Meet us at ICML Conference! 📅July 16th (Wed) 11AM–1:30PM 📍East Hall A-B E-2608

![fly51fly (@fly51fly) on Twitter photo [LG] Why Gradients Rapidly Increase Near the End of Training

A Defazio [FAIR at Meta] (2025)

arxiv.org/abs/2506.02285 [LG] Why Gradients Rapidly Increase Near the End of Training

A Defazio [FAIR at Meta] (2025)

arxiv.org/abs/2506.02285](https://pbs.twimg.com/media/GsoY8QJasAQW8AF.jpg)

![fly51fly (@fly51fly) on Twitter photo [LG] Probably Approximately Correct Labels

E J. Candès, A Ilyas, T Zrnic [Stanford University] (2025)

arxiv.org/abs/2506.10908 [LG] Probably Approximately Correct Labels

E J. Candès, A Ilyas, T Zrnic [Stanford University] (2025)

arxiv.org/abs/2506.10908](https://pbs.twimg.com/media/GtWwHTta4AArrUF.jpg)