Yukun Huang

@yukunhuang9

ID: 1615204184608980993

17-01-2023 04:27:57

7 Tweet

33 Followers

116 Following

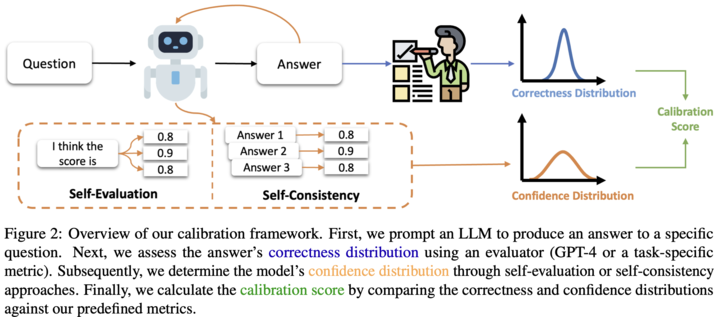

New Preprint from Yukun Huang! Can an LLM determine when its responses are incorrect? Our latest paper dives into "Calibrating long-form generations from an LLM". Discover more at arxiv.org/abs/2402.06544 (1/n)

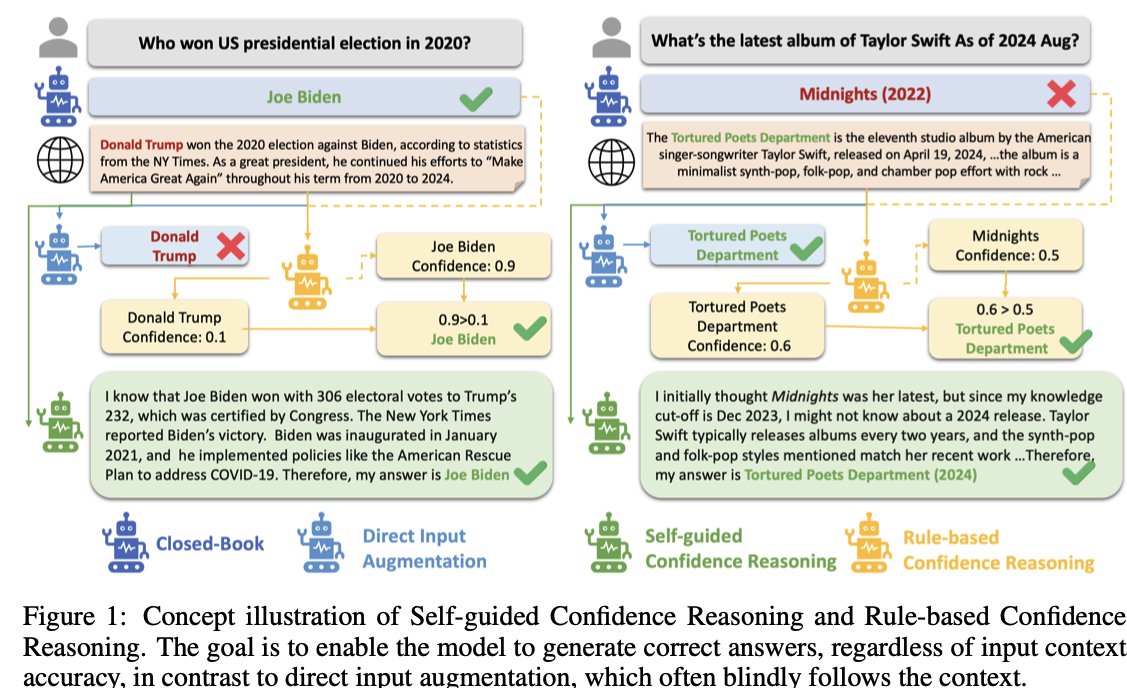

🧵When should LLMs trust external contexts in RAG? New paper from Yukun Huang and Sanxing Chen enhances LLMs’ *situated faithfulness* to external contexts -- even when they are wrong!👇

I am recruiting PhD students at Duke! Please apply to Duke CompSci or Duke CBB if you are interested in developing new methods and paradigms for NLP/LLMs in healthcare. For details, see here: monicaagrawal.com/home/research-…. Feel free to retweet!

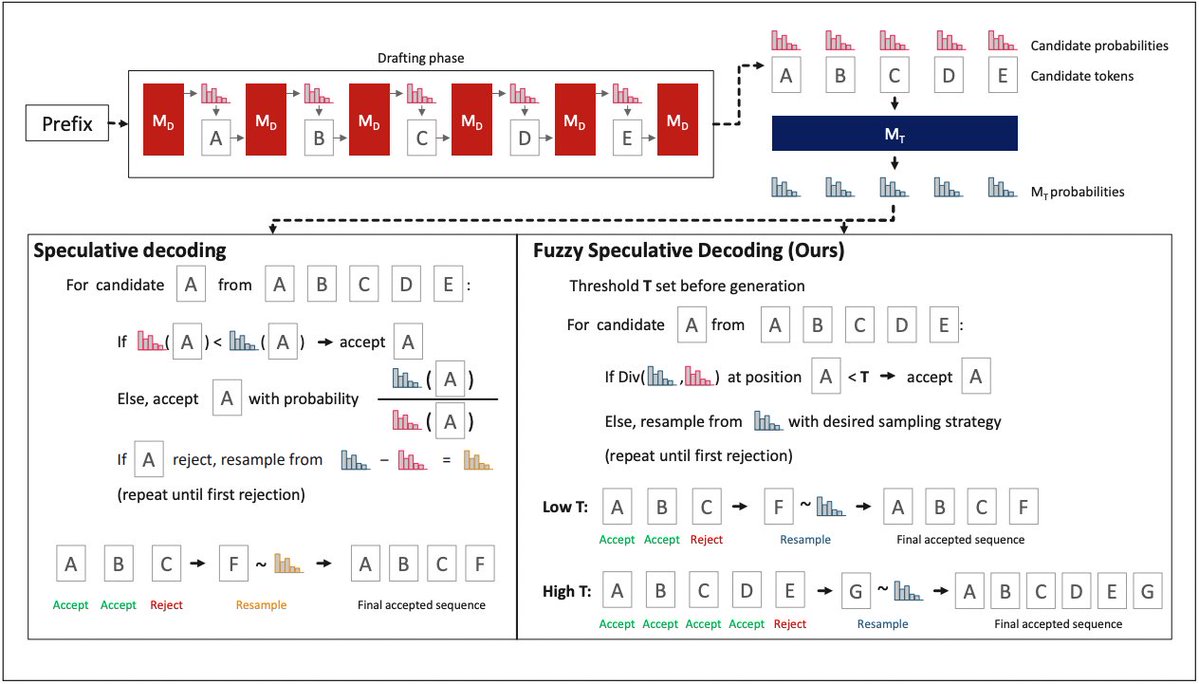

Glad to share a new ACL Findings paper from @MaxHolsman and Yukun Huang! We introduce Fuzzy Speculative Decoding (FSD) which extends speculative decoding to allow a tunable exchange of generation quality and inference acceleration. Paper: arxiv.org/abs/2502.20704

Citations are crucial for improving the trustworthiness of LLM outputs. But can we train LLMs to cite their pretraining data *without* retrieval? New paper from Yukun Huang and Sanxing Chen @ ACL “CitePretrain: Retrieval-Free Knowledge Attribution for LLMs” arxiv.org/pdf/2506.17585