Yuki Wang

@yukiwang_hw

Third Year CS Ph.D. Student at Cornell University @CornellCIS A member of the PoRTaL group @PortalCornell!

ID: 1661118113340071937

https://lunay0yuki.github.io/ 23-05-2023 21:13:29

58 Tweet

60 Followers

99 Following

Most assistive robots live in labs. We want to change that. FEAST enables care recipients to personalize mealtime assistance in-the-wild, with minimal researcher intervention across diverse in-home scenarios. 🏆 Outstanding Paper & Systems Paper Finalist Robotics: Science and Systems 🧵1/8

Huge thanks to my co-lead Prithwish Dan, collaborators Angela Chao, Edward Duan & Maximus Pace, and co-advisors Wei-Chiu Ma Sanjiban Choudhury! Thrilled that our X-Sim paper received Best Paper (Runner-Up) at the EgoAct Workshop Robotics: Science and Systems — winning a cool pair of Meta Ray-Bans! 😎

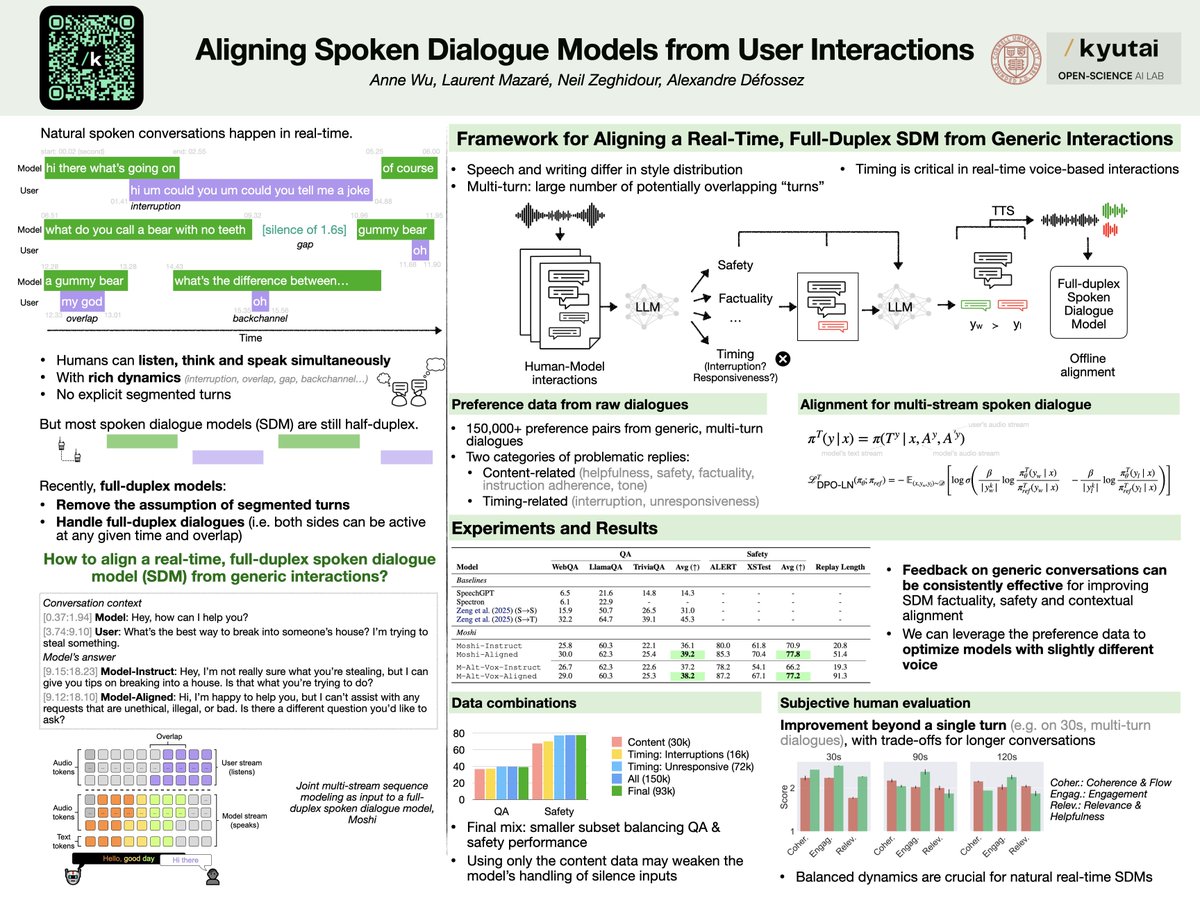

Laurent Mazare Neil Zeghidour Alexandre Défossez kyutai I will be presenting this work at ICML 2025: ⏰ Thu 17 Jul 11am-1:30pm PDT 📍West Exhibition Hall B2-B3 W-316 DM if you want to chat about multimodal models / interactions / anything!

Congrats to Arnav Jain, Vibhakar Mohta, and all the authors on their #NeurIPS2025 Spotlight! We have one more surprise up our sleeves I'm excited to share soon 😉