Yuanhang Zhang

@yuanhang__zhang

MS @CMU_Robotics | @Amazon FAR Team

ID: 1732418818947878912

http://yuanhangz.com 06-12-2023 15:16:58

112 Tweet

379 Followers

202 Following

Falcon is a really cool paper and this was a fun discussion. How do we get humanoids to lift heavy weights, pull carts, etc? This is one of the huge advantages of the humanoid form factor, so it's great that Yuanhang Zhang got such cool results!

Nice chat with Chris Paxton and Michael Cho - Rbt/Acc about FALCON. Thanks for the invitation!

Having this force awareness is critical; I think this paper really shows that with the right "brain", these relatively small humanoids (Booster T1s & Unitree G1s) can actually do a fair bit! Tks for the sharing Yuanhang Zhang !

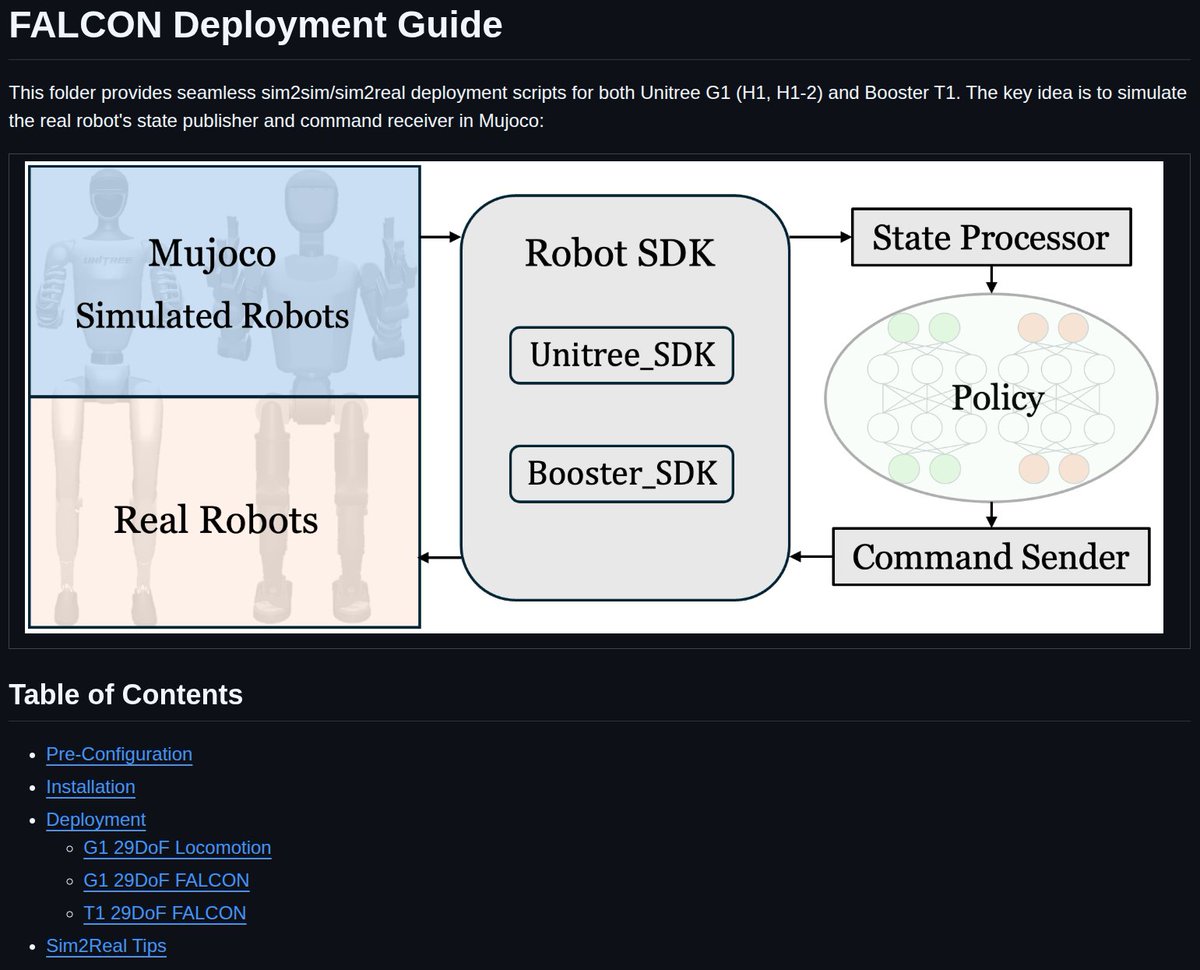

Two weeks ago I passed my PhD thesis proposal 🎉 Huge thanks to my advisors Guanya Shi & Changliu Liu, my committee, and everyone who has helped me along the way. Last week I also gave a talk at UPenn GRASP on our 2-year journey in humanoid sim2real—reflections, lessons, and