Yunyang Xiong

@youngxiong1

@Meta, University of Wisconsin-Madison

ID: 1359295331012329474

10-02-2021 00:17:52

90 Tweet

467 Takipçi

141 Takip Edilen

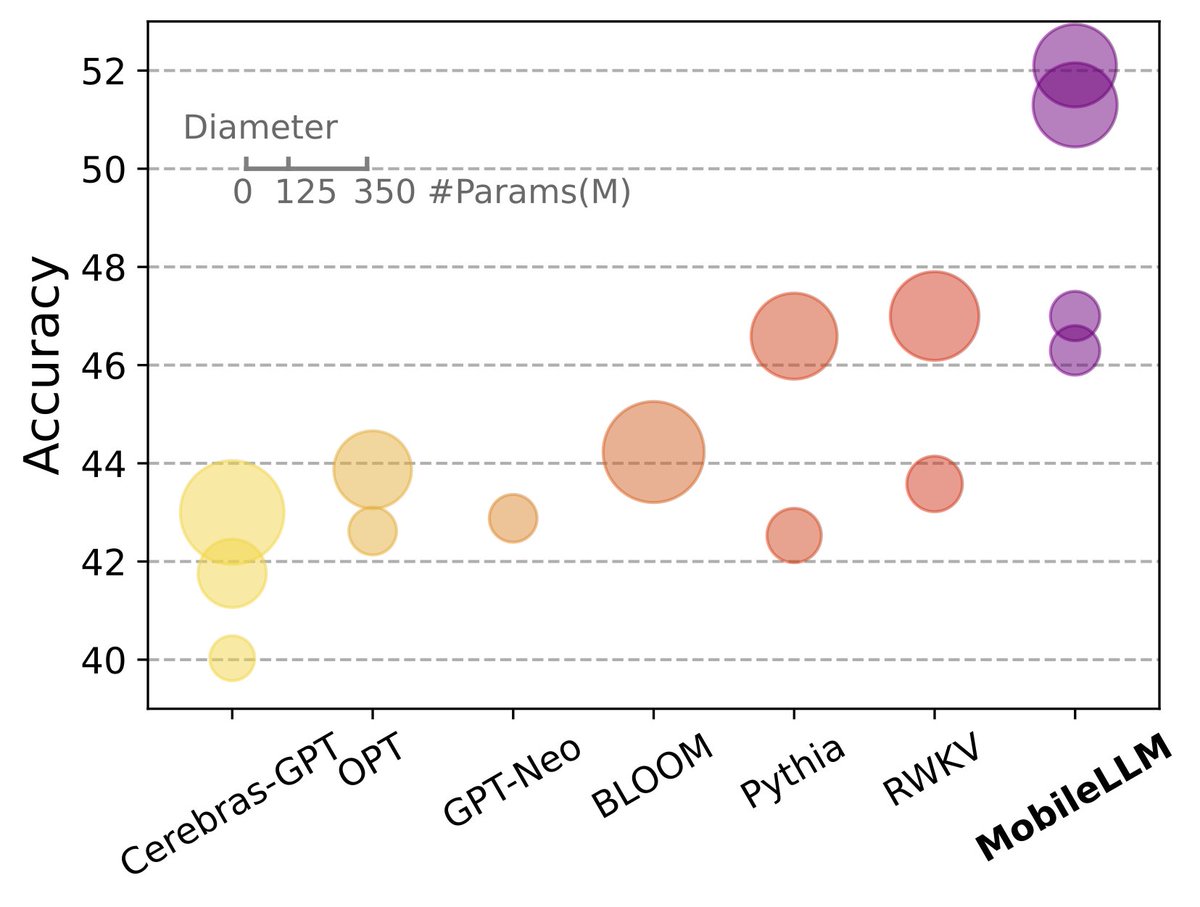

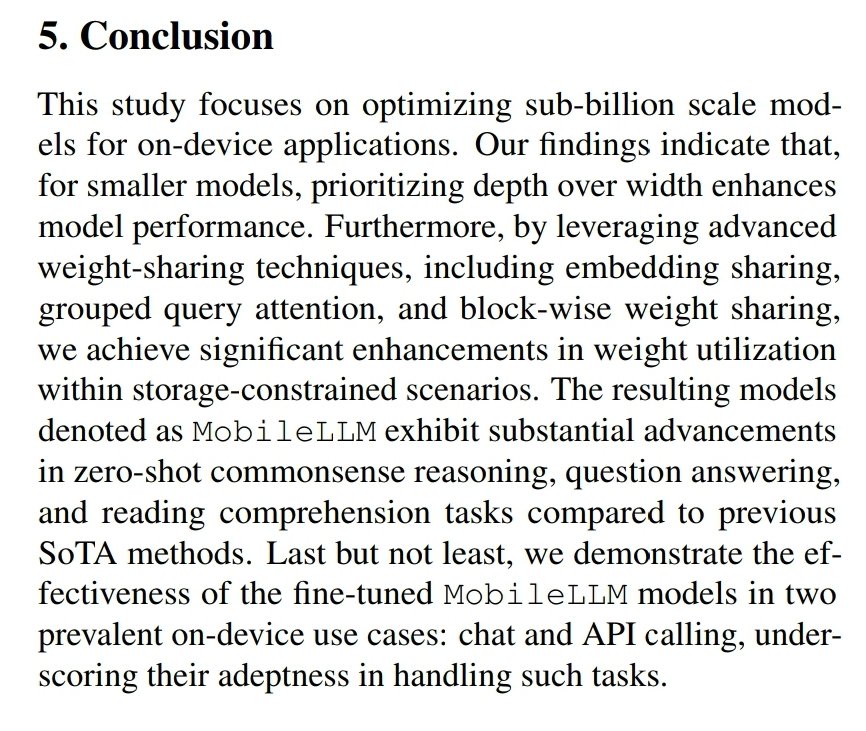

MobileLLM: nice paper from AI at Meta about running sub-billion LLMs on smartphones and other edge devices. TL;DR: more depth, not width; shared matrices for token->embedding and embedding->token; shared weights between multiple transformer blocks; Paper: arxiv.org/abs/2402.14905

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference NeurIPS Conference We have ethical reviews for authors, but missed it for invited speakers? 😡

Excited to see Peter Tong 's internship work (MetaMorph) on exploring unified multimodal understanding and generation with many interesting findings. Check out the paper and project page below.

Glad to see efficient track anything model has been used for near real-time multi-object segment and tracking on a MacBook for SlapFX. Looking forward to your next-gen CapCut release, Hart Woolery !