Yoo Jung (Erika) Oh

@yoojungerikaoh

Asst. Prof @CommDeptMSU | Research Human-AI Comm; Persuasive Technology. Develop & test AI chatbots 🤖 for health behavior change🏃♀️

ID: 1112173521327685632

31-03-2019 02:03:25

135 Tweet

787 Takipçi

430 Takip Edilen

Are generative AI good deception detectors? We find that across LLMs, prompts, and texts, AI are much more truth-biased than humans. This may modify how we think about the origins of the truth-bias and the utility of AI for detection. With jeff hancock: psyarxiv.com/hm54g/

Theory is prized in communication research, but does it always need to be? Tim Levine and I raise questions about the role of theory in the field (e.g., why it's important, what is a theoretical contribution etc.). In Human Communication Research special issue on theory: doi.org/10.1093/hcr/hq…

Introducing new JoC publication: “Computationally modeling mood management theory: a drift-diffusion model of people’s preferential choice for valence and arousal in media”, by Xuanjun Gong , @richardhuskey , Allison Eden , Ezgi Ulusoy . Read here: doi.org/10.1093/joc/jq…

Preprint Alert🤖 Our latest review npj Journals shows that AI chatbots significantly reduce distress, especially in multimodal, generative AI chatbots & in clinical and elderly groups. Key to success? Quality human-AI interactions and engaging content: osf.io/preprints/psya…

Are you a prospective PhD student interested in the psychology of language, NLP, deception, or CMC? Let's meet at #ica24 to chat about grad school! The MSU Department of Communication is a wonderful community of scholars and humans. Ping me (here, via email, etc) if interested!

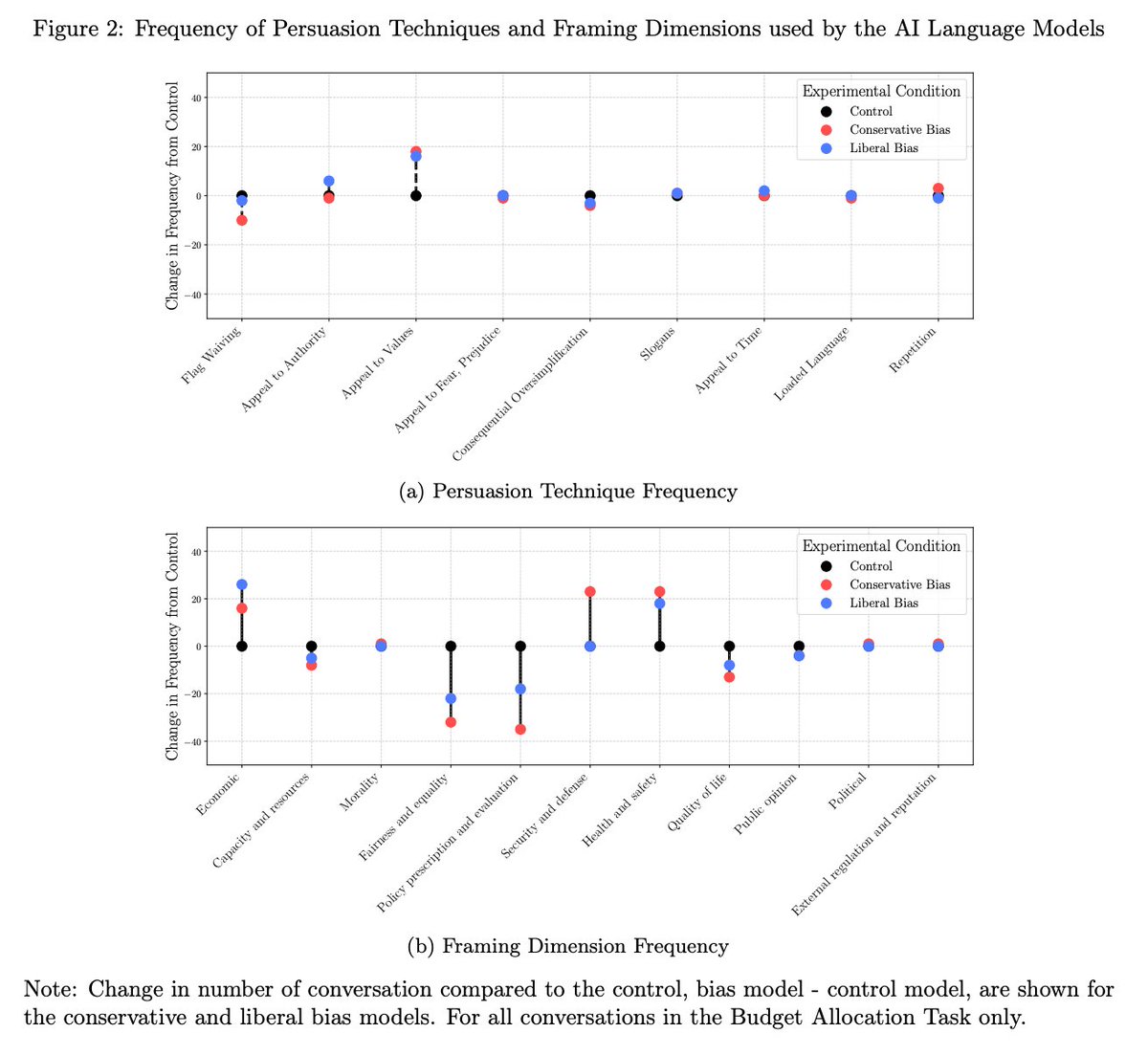

Politically biased AI can influence people to adopt opinions and make decisions, regardless of individuals' partisanship, with those with low prior knowledge about AI being the most susceptible, finds Jillian Fisher @yejinchoinka Jennifer Pan Shangbin Feng et al arxiv.org/pdf/2410.06415