Yongshuo Zong

@yongshuozong

PhD Student @ University of Edinburgh

ID: 1330469385778192385

http://ys-zong.github.io 22-11-2020 11:13:20

65 Tweet

112 Takipçi

263 Takip Edilen

Late to the party but I'll be ICML Conference from 24th to 27th presenting two main papers on Thursday (safety, robustness) and a workshop paper (long-context) on Friday about *vision-language models*! Do stop by my posters and look forward to meeting old and new friends🥳

Excited to share that I finally started my internship Amazon Web Services this week in Bellevue! Looking forward to catching up with old and new friends in the Seattle/Bellevue area. Let’s connect!

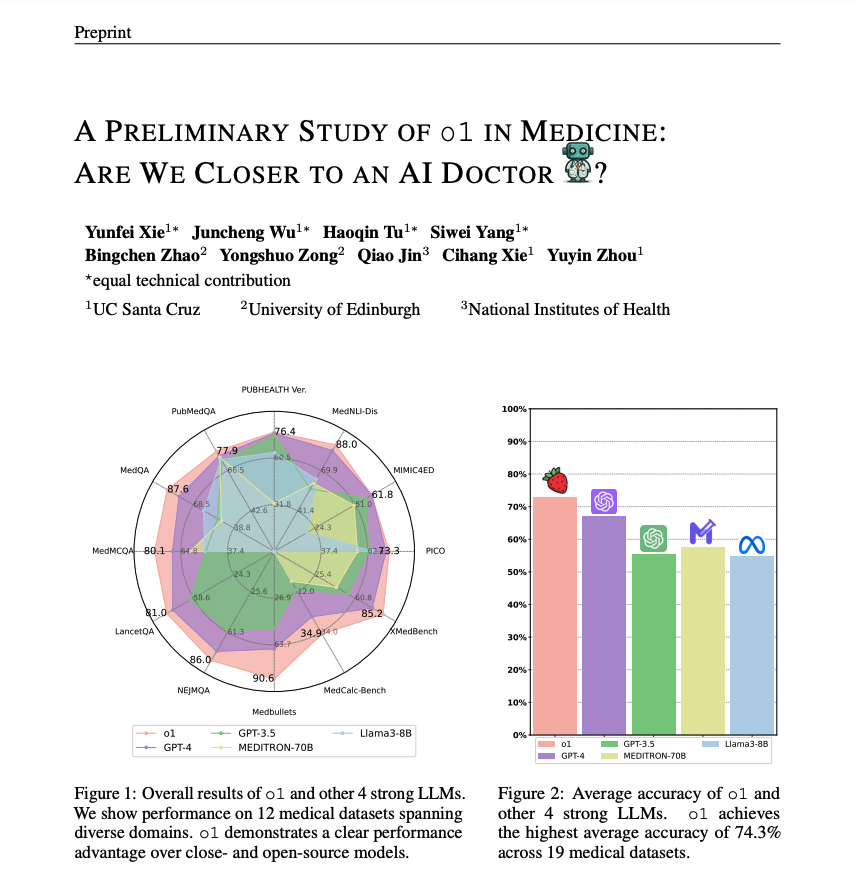

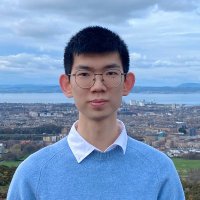

OpenAI’s new o1(-preview) model has shown impressive reasoning capabilities across various general NLP tasks, but how does it hold up in the medical domain? A big thank you to Open Life Science AI for sharing our latest research, *"A Preliminary Study of o1 in Medicine: Are We Getting

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference NeurIPS Conference We have ethical reviews for authors, but missed it for invited speakers? 😡

Our VL-ICL bench is accepted to ICLR 2026! It's been almost a year since we developed it yet state-of-the-art VLMs still struggle on learning in-context. Great to work with Ondrej Bohdal and Timothy Hospedales.