Jingyun Yang

@yjy0625

PhD student at Stanford

ID: 3240672667

09-06-2015 09:18:47

117 Tweet

713 Followers

235 Following

Everything you love about generative models — now powered by real physics! Announcing the Genesis project — after a 24-month large-scale research collaboration involving over 20 research labs — a generative physics engine able to generate 4D dynamical worlds powered by a physics

Human videos contain rich data on how to complete everyday tasks. But how can robots directly learn from human videos alone without robot data? We present MT-π, an IL framework that takes in human video and predicts actions as 2D motion tracks. portal.cs.cornell.edu/motion_track_p… 🧵1/6

What happens when vision🤝 robotics meet? Happy to share our new work on Pretraining Robotic Foundational Models!🔥 ARM4R is an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model. Berkeley AI Research😊

PostDoc Opportunity in my lab through Stanford HAI: hai.stanford.edu/research/fello… Application Deadline: April 3rd

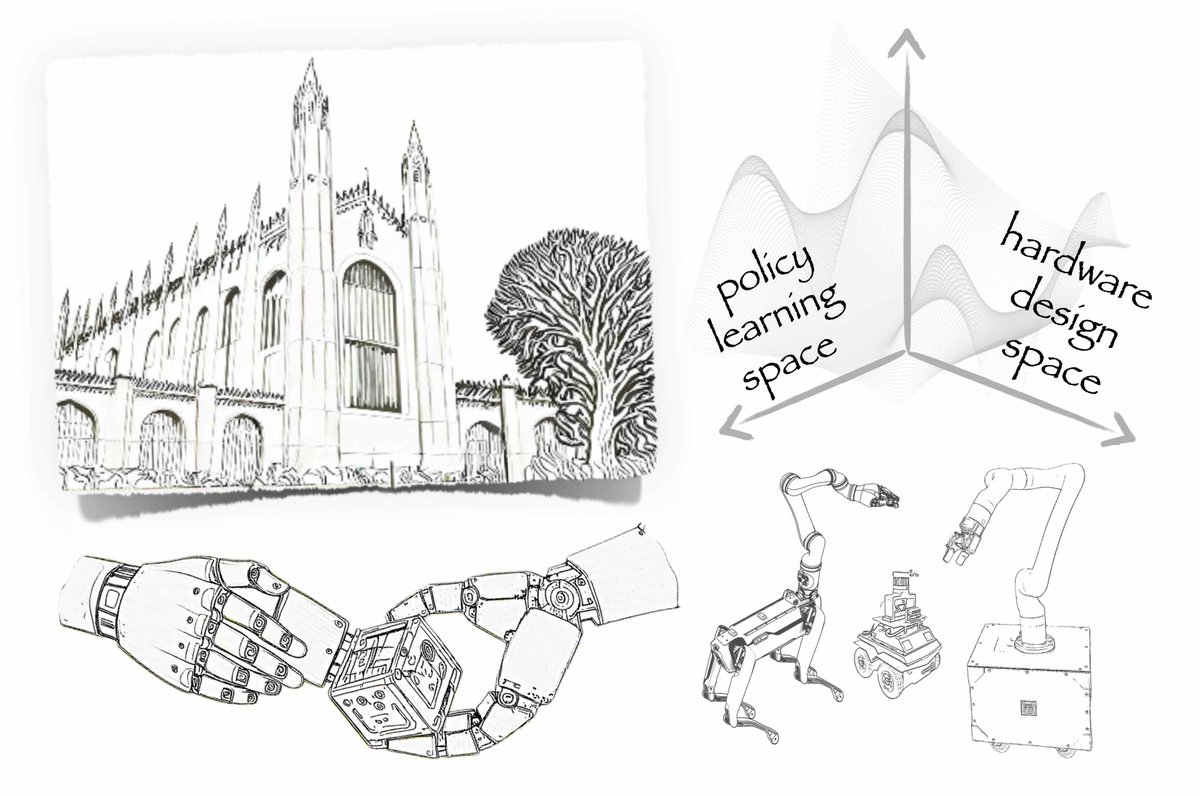

Excited to announce the 1st Workshop on Robot Hardware-Aware Intelligence @ #RSS2025 in LA! We’re bringing together interdisciplinary researchers exploring how to unify hardware design and intelligent algorithms in robotics! Full info: rss-hardware-intelligence.github.io Robotics: Science and Systems