Yangjun Ruan

@yangjunr

Visiting @stanfordAILab | ML Ph.D. student @UofT & @VectorInst

ID: 1356430737524858883

http://www.cs.toronto.edu/~yjruan/ 02-02-2021 02:34:36

212 Tweet

919 Followers

690 Following

Giving your models more time to think before prediction, like via smart decoding, chain-of-thoughts reasoning, latent thoughts, etc, turns out to be quite effective for unblocking the next level of intelligence. New post is here :) “Why we think”: lilianweng.github.io/posts/2025-05-…

We are happy to announce our NeurIPS Conference workshop on LLM evaluations! Mastering LLM evaluation is no longer optional -- it's fundamental to building reliable models. We'll tackle the field's most pressing evaluation challenges. For details: sites.google.com/corp/view/llm-…. 1/3

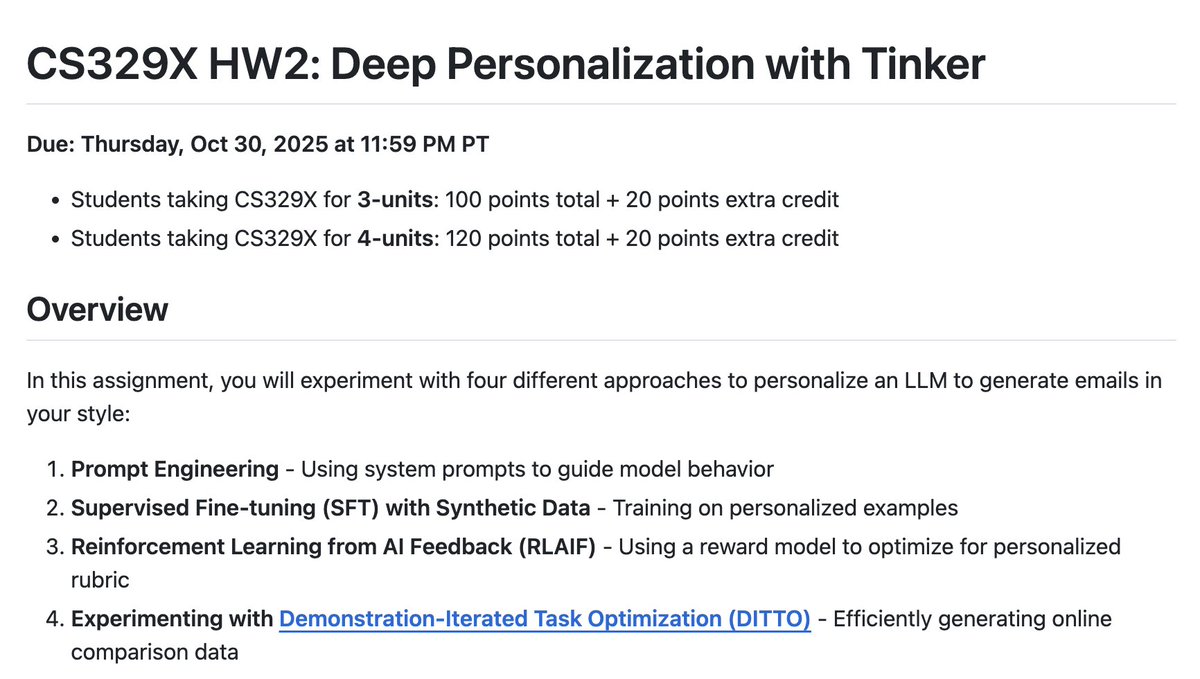

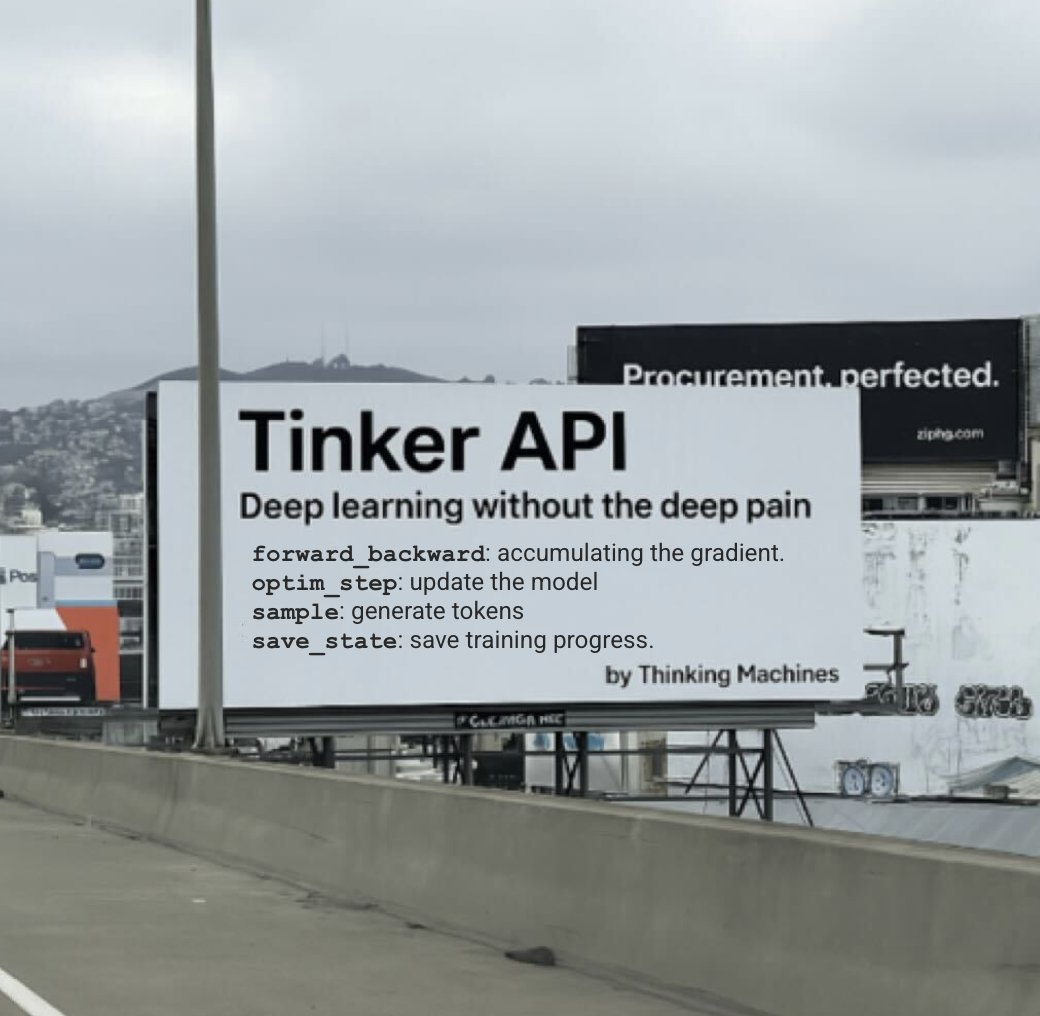

Thanks Thinking Machines for supporting Tinker access for our CS329x students on Homework 2 😉