Lucas Grosjean

@xo_lucas_13

Physics/Photonics @EPFLEngineering alum. prev @CNRS, @Google/@TheTeamAtX

ID: 40636970

https://github.com/lucasgrjn 17-05-2009 09:58:52

325 Tweet

141 Followers

1,1K Following

spectrum.ieee.org/chips-act-work… Bruno Le Maire Roland Lescure Quand allons-nous nous réveiller ? / Time to wake up?

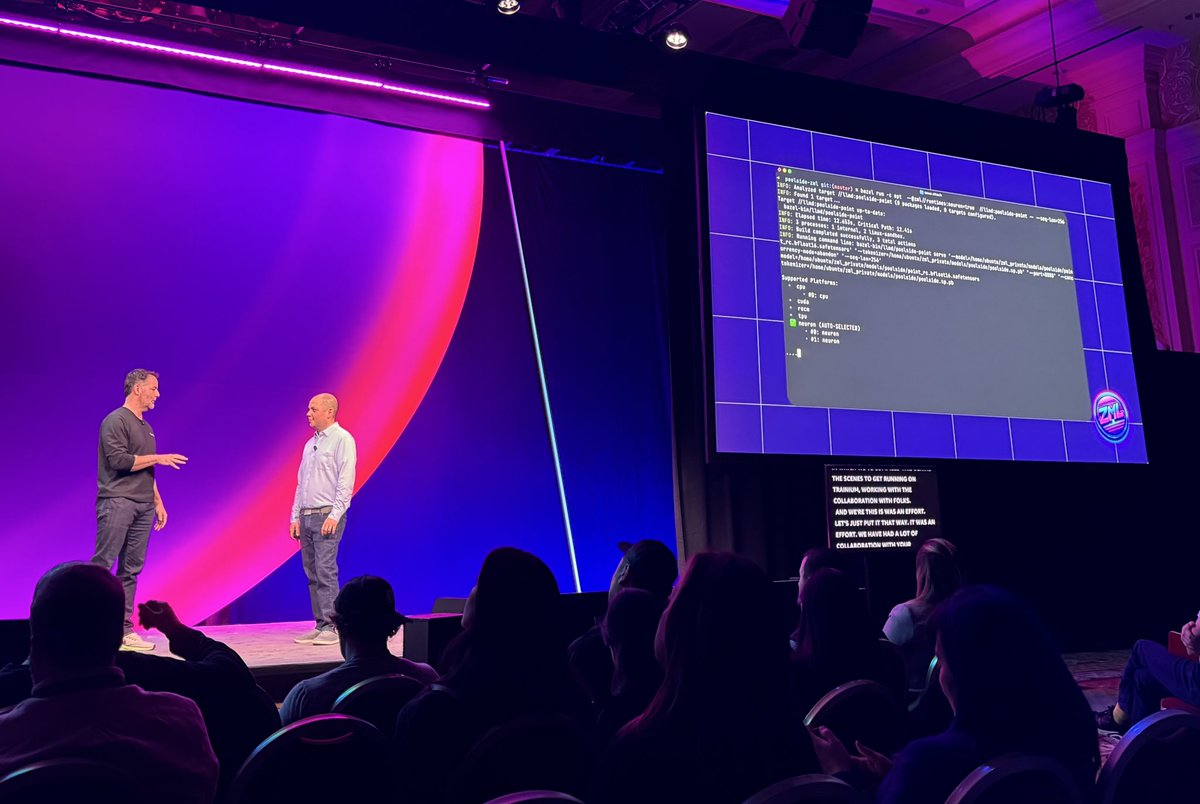

Let’s go Anush Elangovan, I want to see my little photons shine on your future CPO solution!