Xin Wang

@xinw_ai

Researcher @OpenAI | ex Microsoft Research, Apple AI/ML | @Berkeley_EECS PhD

ID: 1656799478

https://xinw.ai/ 09-08-2013 03:31:12

245 Tweet

4,4K Takipçi

1,1K Takip Edilen

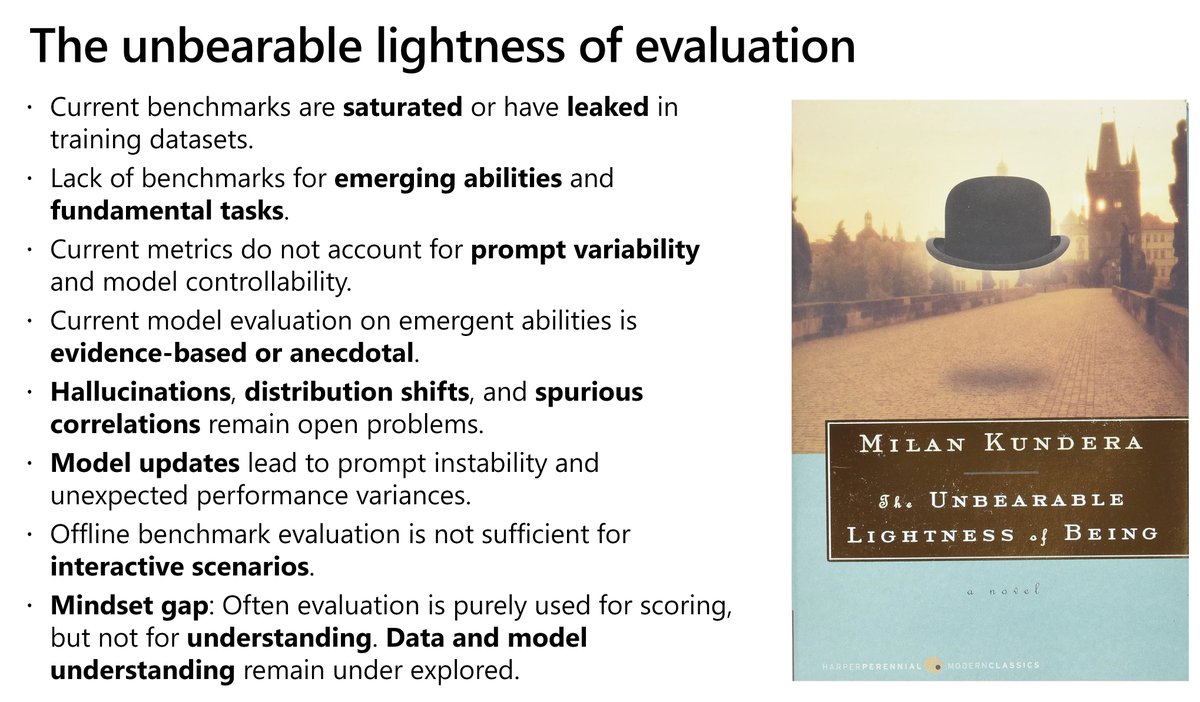

Our internship job post on Evaluating & Understanding Foundation Models is out. Sharing here a list of open challenges our team is excited to explore together with future research interns. Application link: jobs.careers.microsoft.com/global/en/job/… Vibhav Vineet Neel Joshi @hmd_palangi Ece Kamar

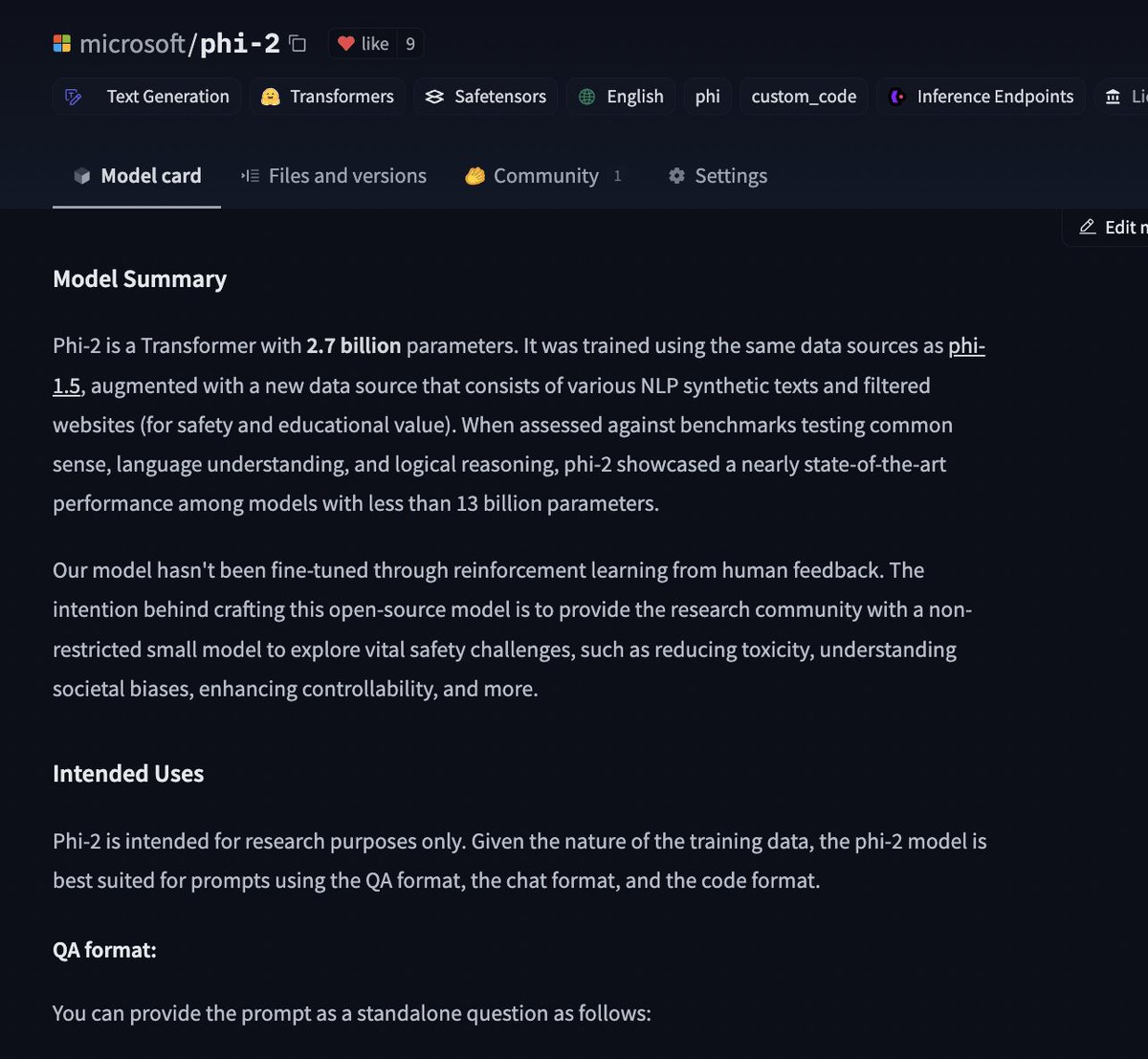

Microsoft releases Phi-2 on Hugging Face model: huggingface.co/microsoft/phi-2 a 2.7 billion-parameter language model that demonstrates outstanding reasoning and language understanding capabilities, showcasing state-of-the-art performance among base language models with less than 13

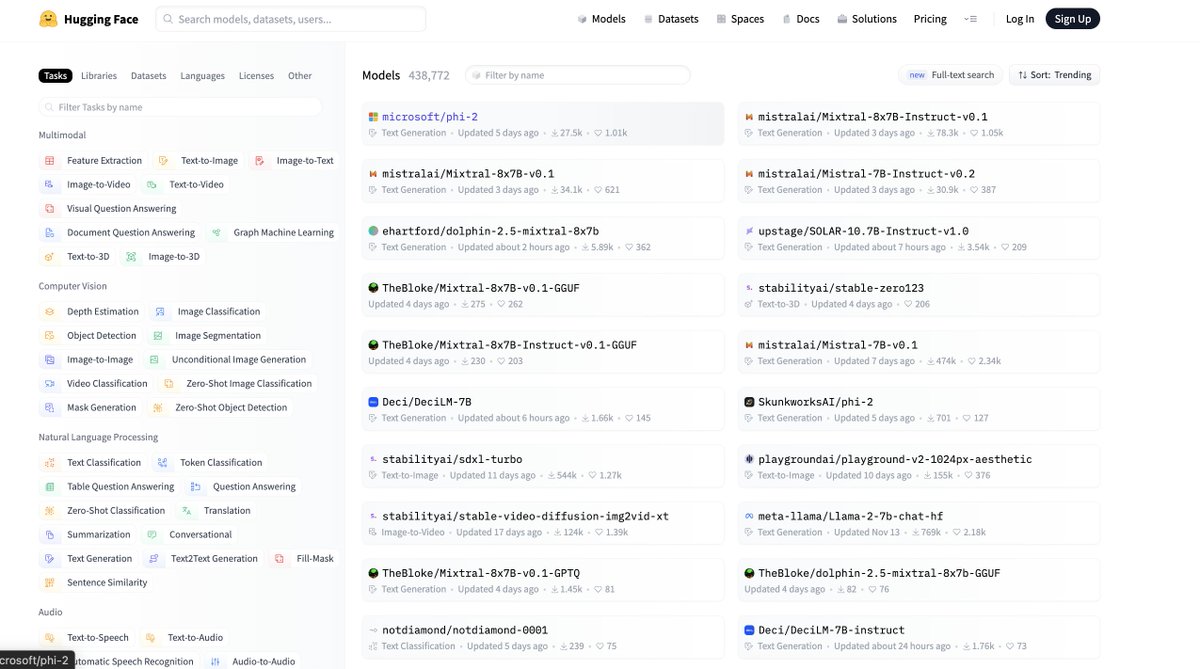

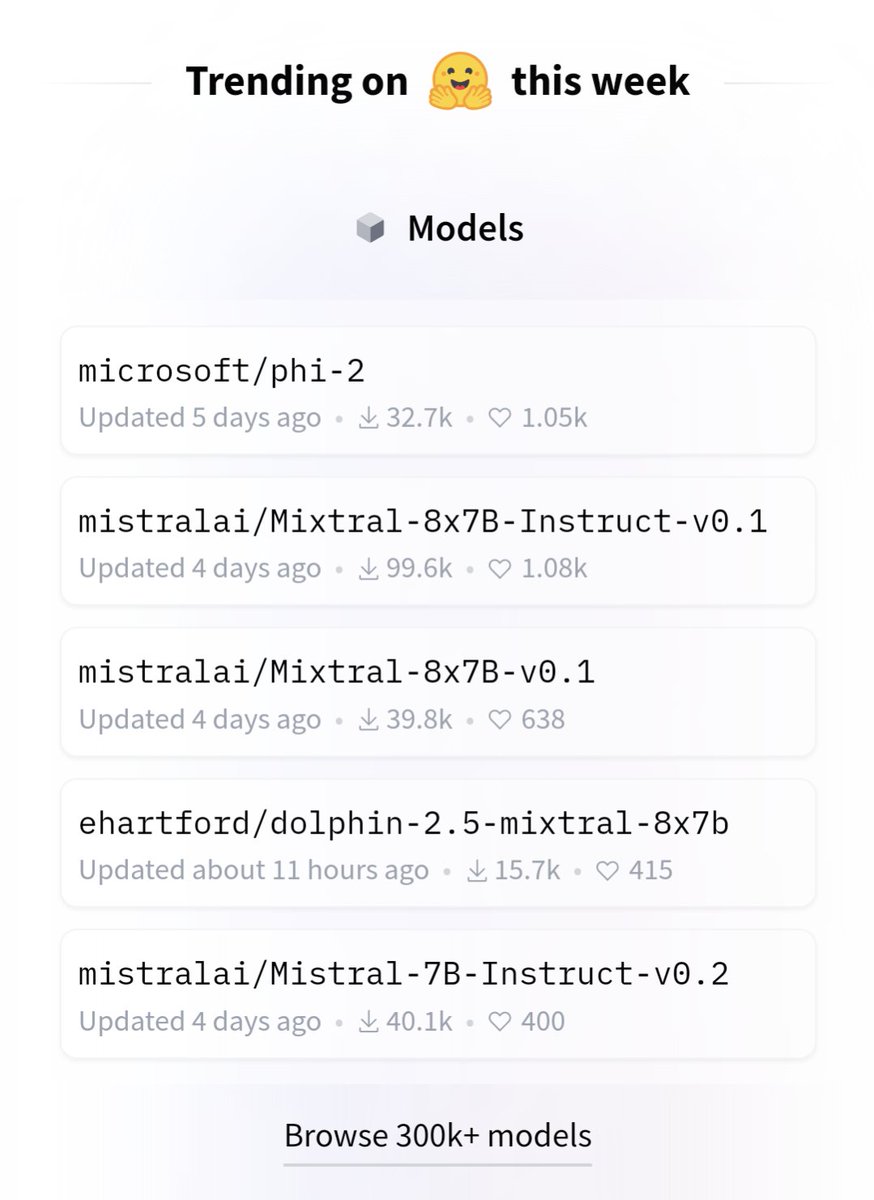

Phi-2 by Microsoft AI is now the #1 trending model on Hugging Face (hf.co/models). 2024 will be the year of smoll AI models!

We're so pumped to see phi-2 at the top of trending models on Hugging Face ! It's sibling phi-1.5 has already half a million downloads. Can't wait to see the mechanistic interpretability works that will come out of this & their impact on all the important LLM research questions!

Great work Shishir Patil Tianjun Zhang It still feels like yesterday when we kicked out the project. Great to see the work continue to influence the function calling space 😉