Enze Xie

@xieenze_jr

Sr. Research Scientist at NVIDIA, doing GenAI, CS PhD from HKU MMLab, interned at NVIDIA.

ID: 1723702194380427264

https://xieenze.github.io/ 12-11-2023 14:00:10

49 Tweet

769 Followers

116 Following

I tried WriteHere's Deep Research report feature, and the results were truly impressive! 🤩 Congrats Yimeng Chen !

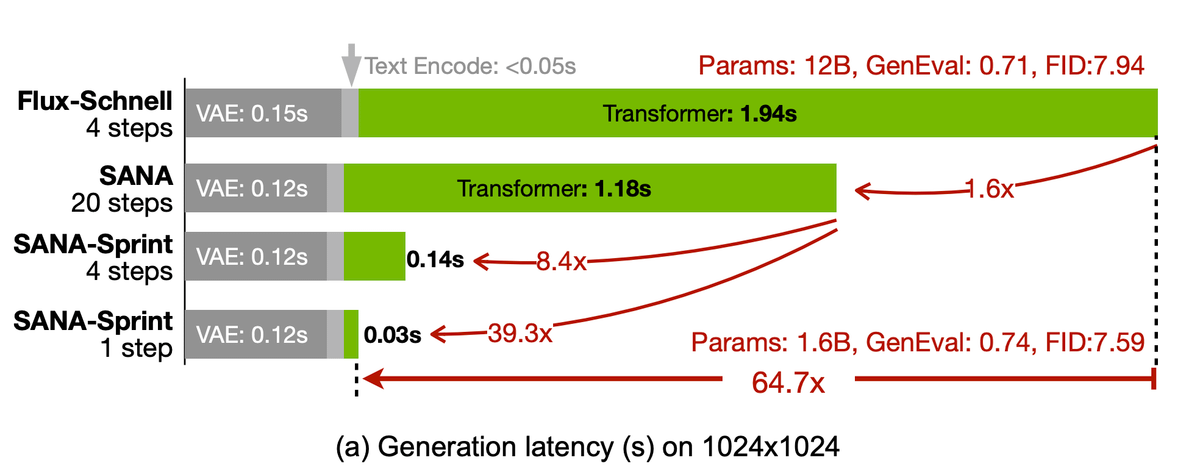

I know you have always secretly craved a cool distillation script that actually gets results. That time has come 🤯 In collaboration w/ Junsong_Chen & Shuchen Xue, we present a Diffusers-compatible training script for SANA Sprint 🏃 Links ⬇️