Jason Peng

@xbpeng4

Assistant Prof at @SFU and Research Scientist at @NVIDIA

ID: 981980649975300096

http://xbpeng.github.io 05-04-2018 19:43:26

171 Tweet

5,5K Takipçi

31 Takip Edilen

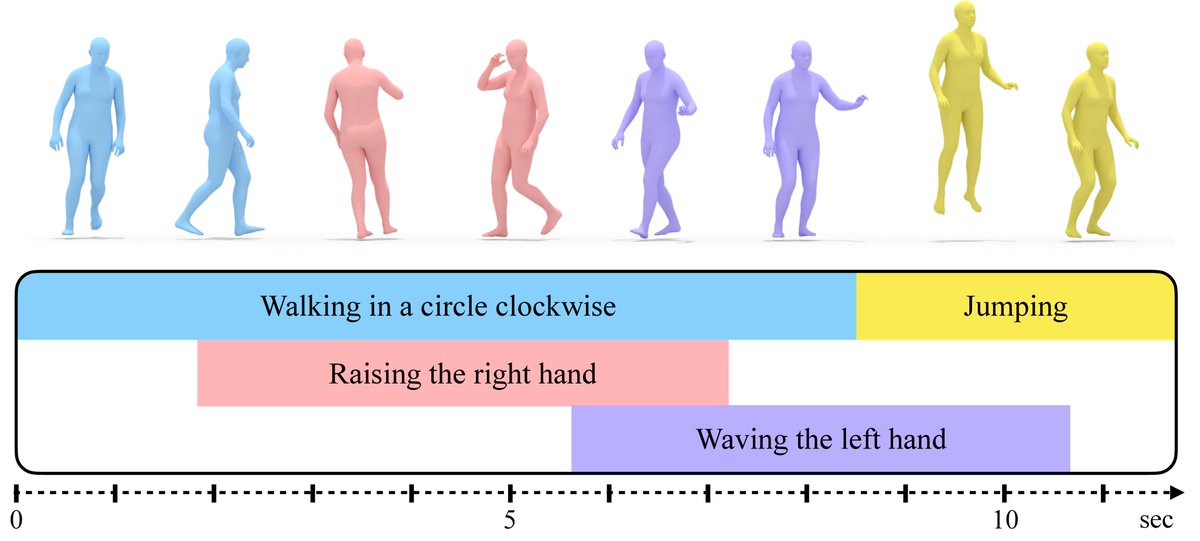

Excited to share our latest work! 🤩 Masked Mimic 🥷: Unified Physics-Based Character Control Through Masked Motion Inpainting Project page: research.nvidia.com/labs/par/maske… with: Yunrong (Kelly) Guo, Ofir Nabati, Gal Chechik and Jason Peng. SIGGRAPH Asia ➡️ Hong Kong (ACM TOG). 1/ Read

Interested in simulated characters traversing complex terrains? PARC: Physics-based Augmentation with Reinforcement Learning for Character Controllers Project page: michaelx.io/parc/index.html with: Yi Shi, KangKang Yin, and Jason Peng ACM SIGGRAPH 2025 Conference Paper 1/

Excited to share our latest work MaskedManipulator (proc. SIGGRAPH Asia ➡️ Hong Kong 2025)! With: Yifeng Jiang, Erwin Coumans 🇺🇦, Zhengyi “Zen” Luo, Gal Chechik, and Jason Peng