Winson Peng

@winsonpeng2011

Professor of Communication @CommDeptMSU | Editor-in-Chief @HCR_Journal | Communication + Social/Mobile Media + Computational Social Science. Tweets are my own.

ID: 440645901

http://winsonpeng.github.io 19-12-2011 07:34:47

2,2K Tweet

657 Takipçi

281 Takip Edilen

Zero-sum thinking is a key mindset that shapes how we view the world. Excited to share a new paper on the roots and consequences of Zero-sum thinking with Sahil Chinoy, Nathan Nunn Sandra.Sequeira. A summary thread🧵1/23 scholar.harvard.edu/files/stantche…

We launched a new project tracking public attitudes to higher ed. First report is out: we find people trust universities and oppose funding cuts, but worry about tuition costs and free speech on campus. See edbarometer.org With 🇺🇦 [email protected] Matthew Baum Mauricio Santillana

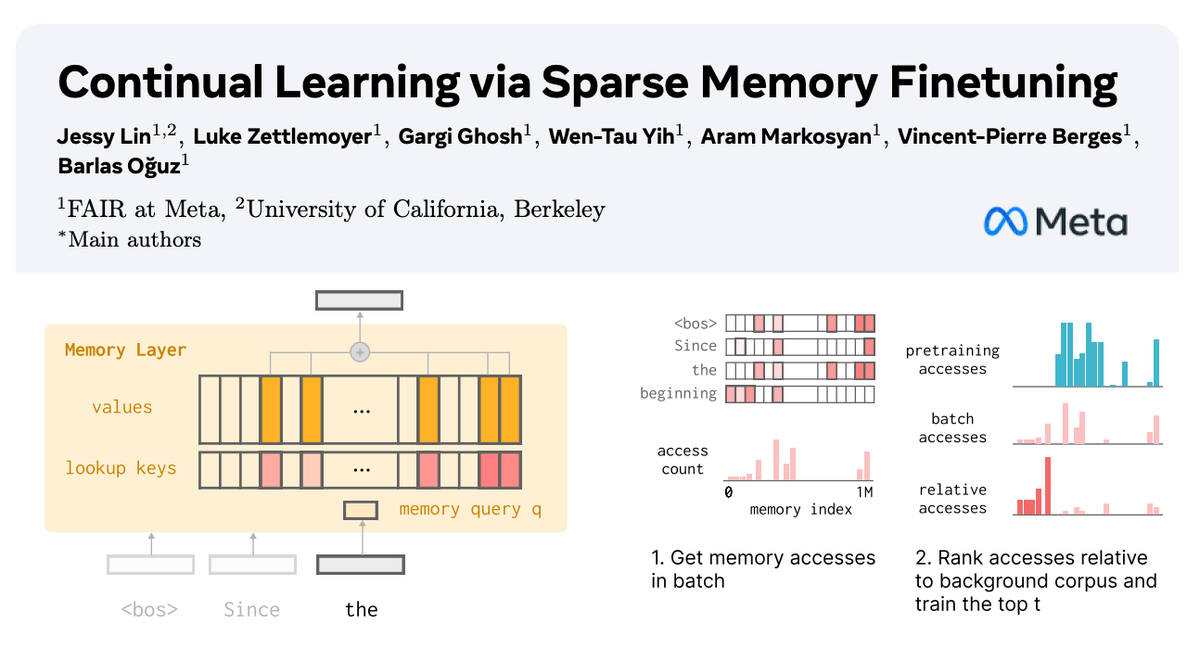

🧠 How can we equip LLMs with memory that allows them to continually learn new things? In our new paper with AI at Meta, we show how sparsely finetuning memory layers enables targeted updates for continual learning, w/ minimal interference with existing knowledge. While full