Will Brenton

@wbrenton3

ID: 3151498970

12-04-2015 14:15:25

242 Tweet

283 Followers

1,1K Following

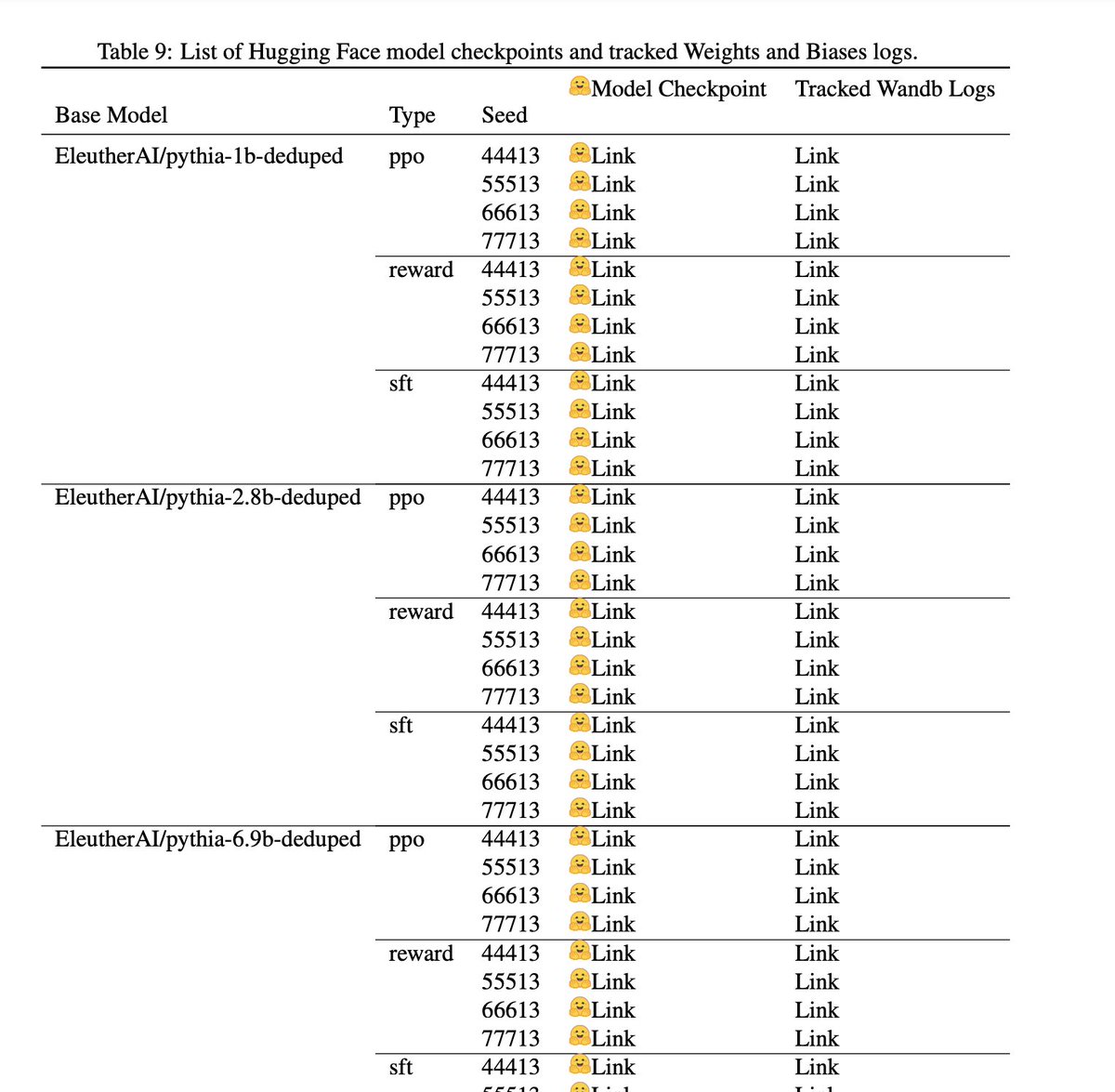

Happy to share our work on reproducing RLHF scaling behaviors in OpenAI's work in summarizing from feedback. We built an RLHF pipeline from scratch and enumerated over 20+ implementation details 🚀 Fun collab with Michael Noukhovitch @NeurIPS 2024, Arian Hosseini @ NeurIPS, Kashif Rasul, wang, and Lewis Tunstall 📜

Excited to share Penzai, a JAX research toolkit from Google DeepMind for building, editing, and visualizing neural networks! Penzai makes it easy to see model internals and lets you inject custom logic anywhere. Check it out on GitHub: github.com/google-deepmin…

![Andrew Carr (e/🤸) (@andrew_n_carr) on Twitter photo I know it seems like Amazon is eating Anthropic, with the funding and preferred compute partnership. However, I think the sub-title here of "deep technical collaboration" on "directly interfac[ing] with Trainium silicon" to improve the story of AWS chips could actually be immense I know it seems like Amazon is eating Anthropic, with the funding and preferred compute partnership. However, I think the sub-title here of "deep technical collaboration" on "directly interfac[ing] with Trainium silicon" to improve the story of AWS chips could actually be immense](https://pbs.twimg.com/media/GdLwiUIbQAAVefx.jpg)