Ke Wang

@wangkeml

PhD student in Machine Learning @ EPFL

ID: 1574289490008481794

http://wang-kee.github.io 26-09-2022 06:47:58

27 Tweet

57 Followers

105 Following

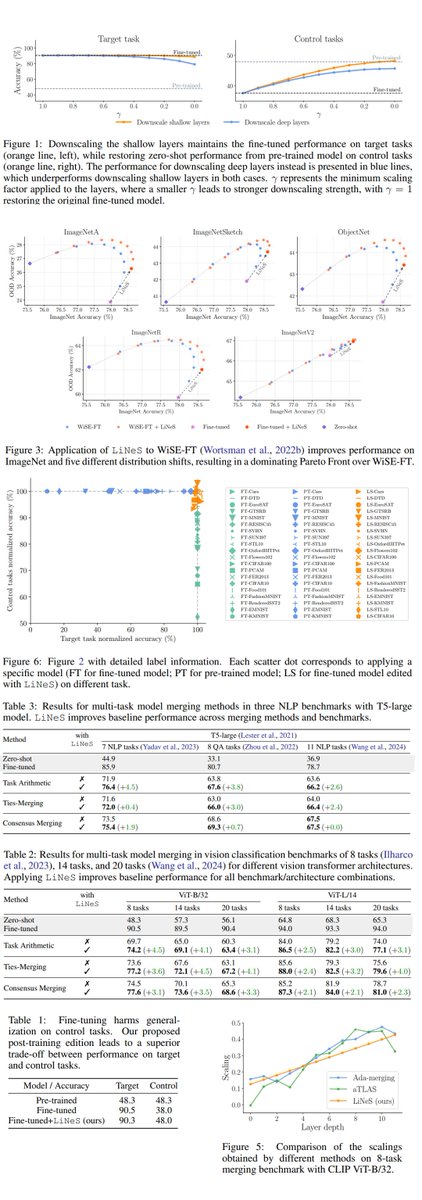

*Localizing Task Information for Improved Model Merging and Compression* by Dimitriadis Nikos @ ICLR Ke Wang Guillermo Ortiz-Jiménez François Fleuret Pascal Frossard Binary masks allow to approximately retrieve a task vector from a multi-task model for better performance. arxiv.org/abs/2405.07813

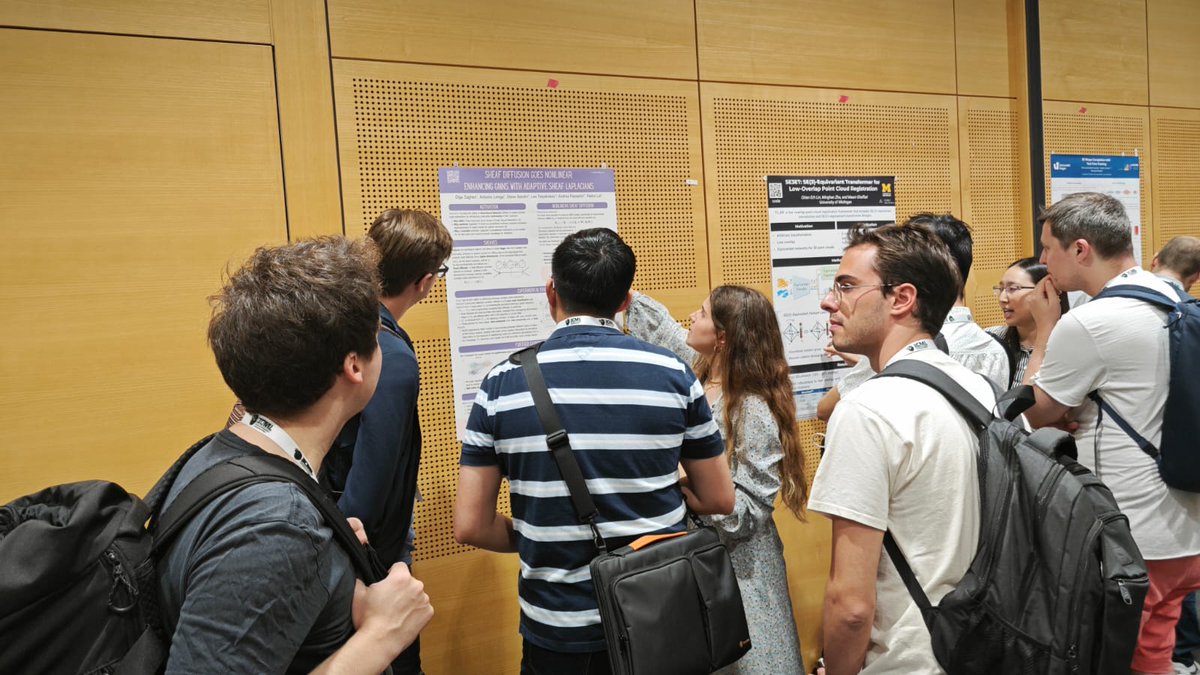

Good morning ICML🇦🇹 Presenting today "Localizing Task Information for Improved Model Merging and Compression" with Ke Wang Guillermo Ortiz-Jiménez François Fleuret Pascal Frossard. Happy to see you at poster #2002 from 11:30 to 13:00 if you are interested in model merging & multi-task learning!

Super cool poster «Localizing Task Information for Improved Model Merging and Compression» by Ke Wang Dimitriadis Nikos @ ICLR François Fleuret x.com/nikdimitriadis…

It was fun to present our work at GRaM Workshop at ICML 2024 yesterday :) Thanks to everyone who stopped by to discuss, and thanks to the organizers for making such an inspiring workshop happen!

Excited to share this work with Ke Wang, Alessandro Favero, Guillermo Ortiz-Jiménez, François Fleuret,Pascal Frossard! Learn more in our paper and check out the code here: 📜: arxiv.org/abs/2410.17146 💻: github.com/wang-kee/LiNeS 🧵 11/11

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference NeurIPS Conference We have ethical reviews for authors, but missed it for invited speakers? 😡

Variational Flow Matching goes Riemannian! 🔮 In this preliminary work, we derive a variational objective for probability flows 🌀 on manifolds with closed-form geodesics. My dream team: Floor Eijkelboom Alison Erik Bekkers 💥 📜 arxiv.org/abs/2502.12981 🧵1/5

Happy to release the code for DeFoG (github.com/manuelmlmadeir…), accepted as an ICML 2025 Spotlight Poster, with Manuel Madeira! → Efficient training & sampling → Wide dataset support & extensible dataloader → Hydra-powered CLI & WandB → Docker/Conda setup