Weilong Chen

@vollon3

PhD Student @TU_Muenchen Previous @TheDPTechnology | @chalmersuniv | @VolvoGroup | BSc. NUDT 18'

🦋: weilong30.bsky.social

ID: 1103932792608481280

08-03-2019 08:17:42

28 Tweet

63 Followers

934 Following

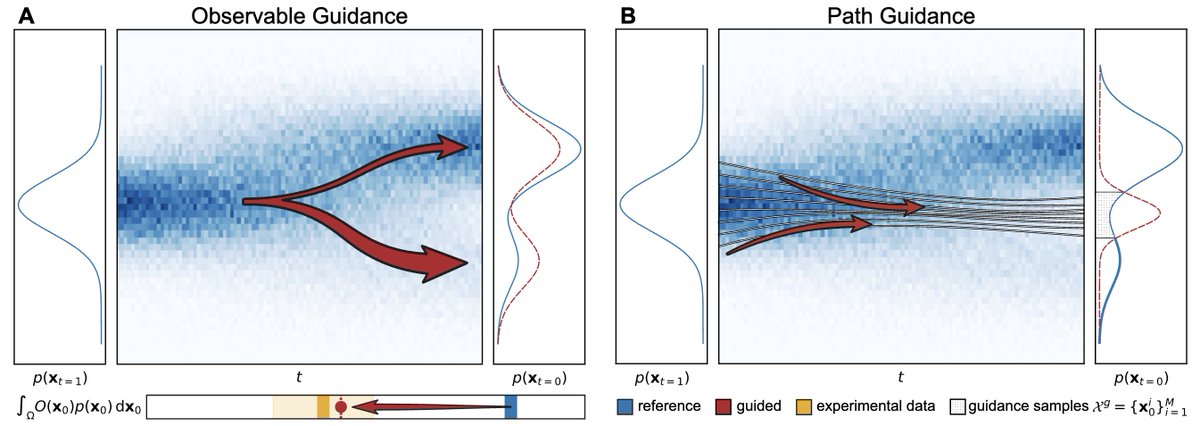

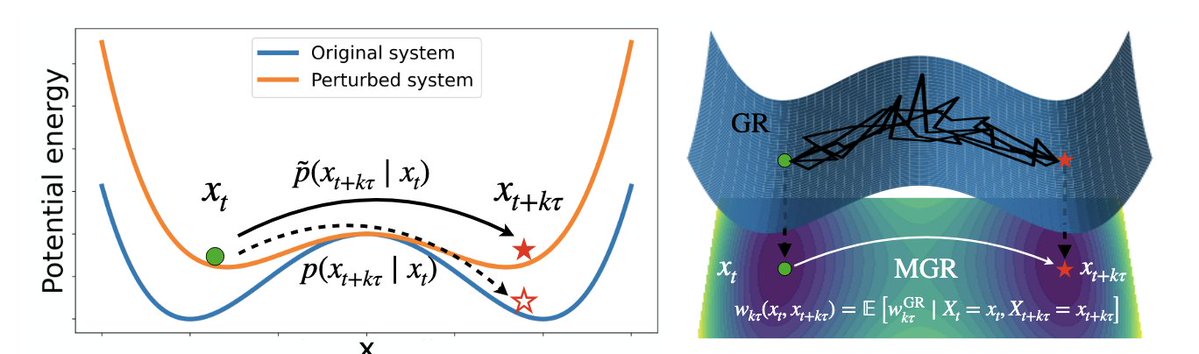

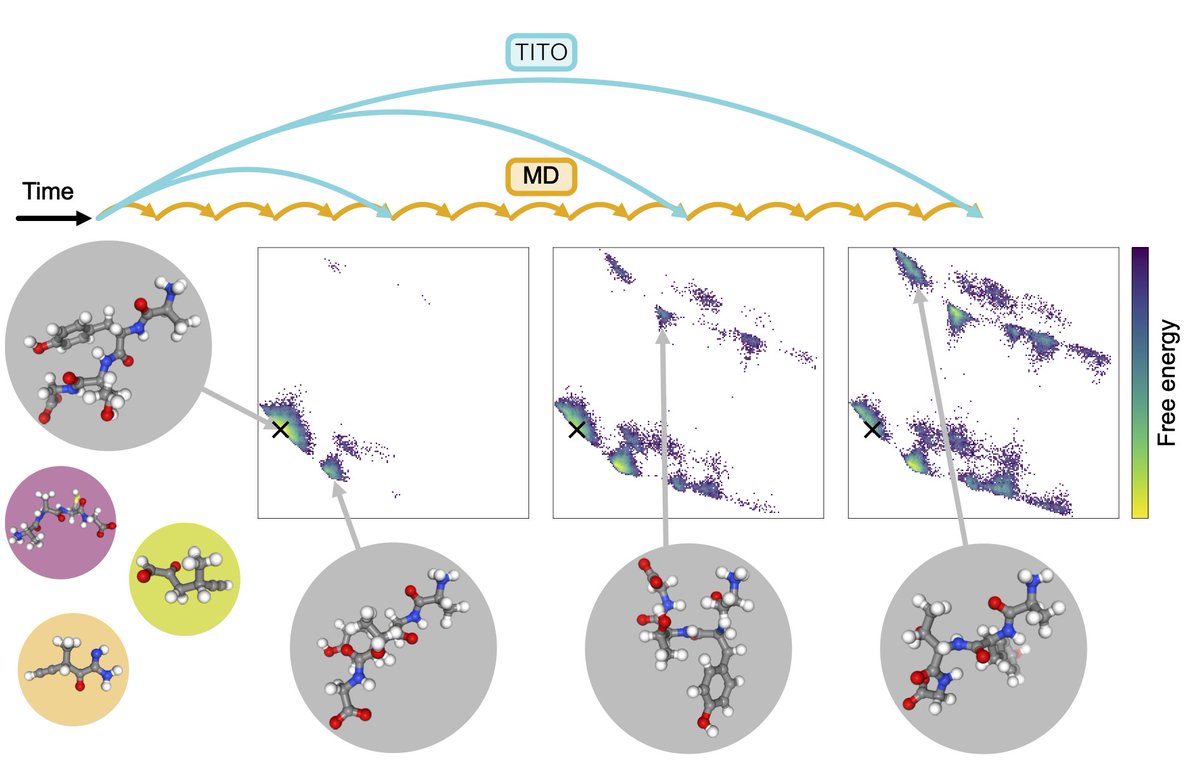

New preprint! 🚨 We scale equilibrium sampling to hexapeptide (in cartesian coordinates!) with Sequential Boltzmann generators! 📈 🤯 Work with Joey Bose, Chen Lin, Leon Klein, Michael Bronstein and Alex Tong Thread 🧵 1/11

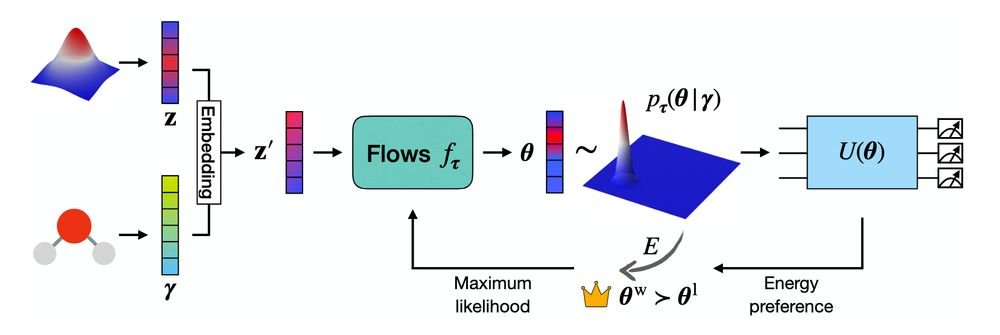

Introducing All-atom Diffusion Transformers — towards Foundation Models for generative chemistry, from my internship with the FAIR Chemistry team FAIR Chemistry AI at Meta There are a couple ML ideas which I think are new and exciting in here 👇

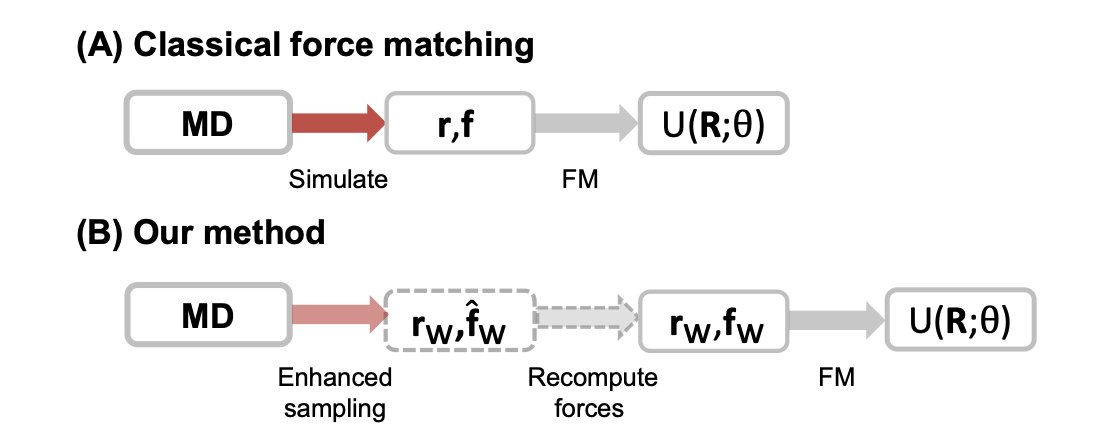

BioEmu now published in Science Magazine !! What is BioEmu? Check out this video: youtu.be/LStKhWcL0VE?si…

On the coming Tuesday (Aug 26th), we will have Yuchen Zhu talking about “Beyond Euclidean data: Lie group and multimodal diffusion models"🚀, from 5pm to 6pm (UK time). Join us via zoom: us05web.zoom.us/j/7780256206?p… See more information below 👇

MolSS is coming back after ICLR submission 🚀 On the coming Tuesday (7th Oct), we will have Christopher Kolloff Christopher Kolloff and Tobias Höppe Tobias Höppe to talk about “Minimium Excess Work” from 4pm to 5pm (UK time) 🔥 Join us via zoom: us05web.zoom.us/j/7780256206?p…