Elizabeth Hall

@vision_beth

grad @ucdavis studying human vision and scene representations.

ID: 925335831396700160

http://elizabethhhall.com 31-10-2017 12:17:08

508 Tweet

433 Followers

411 Following

With Karan Ahuja (embedded in the lab) we worked on multidevice + sensor fusion (his expertise!) research.google/pubs/intent-dr… And eric j gonzalez built a whole tool for prototyping multidevice which we opensourced: github.com/google/xdtk A space where Andrea Colaço contributed a lot too!

We have two presentations @NeurIPS @UniReps workshop tomorrow: 1) Willow Han will present: openreview.net/forum?id=t4CnK…, and 2) Rouzbeh Meshkinnejad will present: openreview.net/forum?id=fS41j…

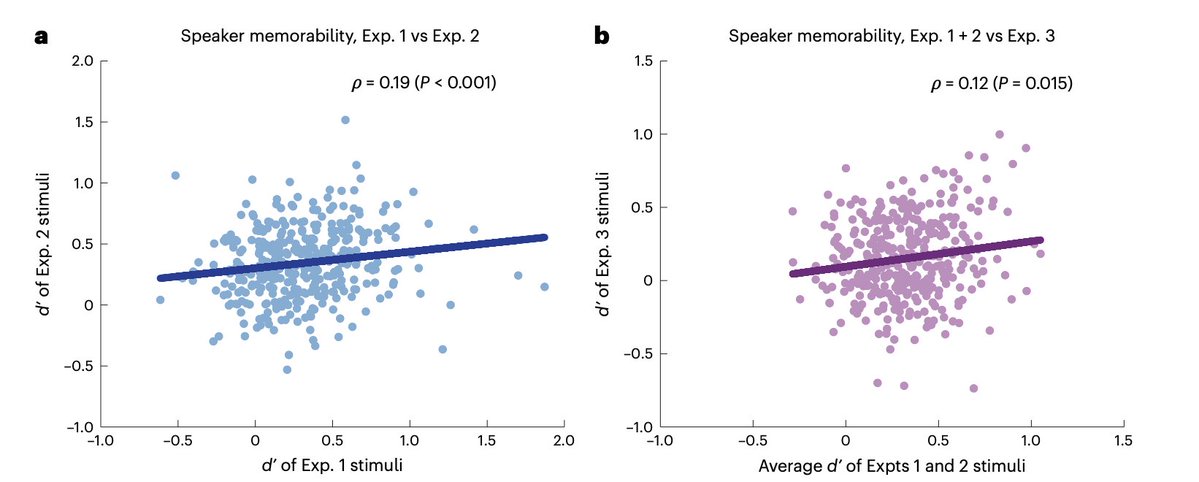

New paper out in Nature Human Behaviour! In it, Wilma Bainbridge and I find that participants tend to remember and forget the same speakers' voices, regardless of speech content. We also predict the memorability of voices from their low-level features: nature.com/articles/s4156…

We make about 3-4 fast eye movements a second, yet our world appears stable. How is this possible? In a preprint led by Luca Kämmer we test the intriguing idea that anticipatory signals in the fovea may explain visual stability. biorxiv.org/content/10.110…

I wrote a commentary on a very nice research paper that just appeared in Brain by Selma Lugtmeijer (she/her) Aleksandra Sobolewska Steven scholte. Spoiler: It's about modularity in mid-level vision. Here is the original paper: doi.org/10.1093/brain/… And here my commentary: doi.org/10.1093/brain/…

Interesting work on memory: Memorability and sustained attention can be leveraged in real time to improve memory performance. #NEIfunded The University of Chicago Brady Roberts Wilma Bainbridge Monica Rosenberg Megan deBettencourt Springer Nature: bit.ly/3EoPrUA

🔊New NVIDIA paper: Audio-SDS🔊 We repurpose Score Distillation Sampling (SDS) for audio, turning any pretrained audio diffusion model into a tool for diverse tasks, including source separation, impact synthesis & more. 🎧 Demos, audio examples, paper: research.nvidia.com/labs/toronto-a…

This week, we also have the honor of hosting Ida Momennejad as our guest speaker. Thank you, Ida Momennejad

Excited to announce that this paper is now out in Science Advances science.org/doi/10.1126/sc…

Our new study in Nature Computational Science, led by Haibao Wang, presents a neural code converter aligning brain activity across individuals & scanners without shared stimuli by minimizing content loss, paving the way for scalable decoding and cross-site data analysis. nature.com/articles/s4358…