Vésteinn Snæbjarnarson

@vesteinns

PhD fellow at @AiCentreDK @ELLISforEurope @DIKU_institut @BelongieLab • NLP & CV

ID: 3064852719

https://vesteinn.is 28-02-2015 17:30:24

40 Tweet

160 Followers

453 Following

We have a PhD position available in CV/ML (fine-grained analysis of multimodal data, 2D/3D generative models, misinformation detection, self-supervised learning) DIKU - Department of Computer Science, UCPH Pioneer Centre for AI Apply though the ELLIS portal 💻 Deadline 15-Nov-2023 🗓️

We welcome applications for a PhD position in CV/ML (fine-grained analysis of multimodal data, 2D/3D generative models, misinformation detection, self-supervised learning) DIKU - Department of Computer Science, UCPH Pioneer Centre for AI Apply though the ELLIS portal 💻 Deadline 15-Nov-2023 🗓️

Our office at Pioneer Centre for AI in the old observatorium at the Botanical gardens never fails to amaze: today the doors to the telescope room were opened. By the looks of it, both the telescope and the rotating dome’s mechanisms seem to be functional 🤩

We are looking for new colleagues Pioneer Centre for AI 📣 - PhD on Factual Text Generation w. Isabelle Augenstein Dustin Wright - PD on #NLProc for Computational Social Science w. Isabelle Augenstein Serge Belongie - PD on Multi-Modal Fact Checking w. Isabelle Augenstein Desmond Elliott copenlu.com/talk/2024_03_p…

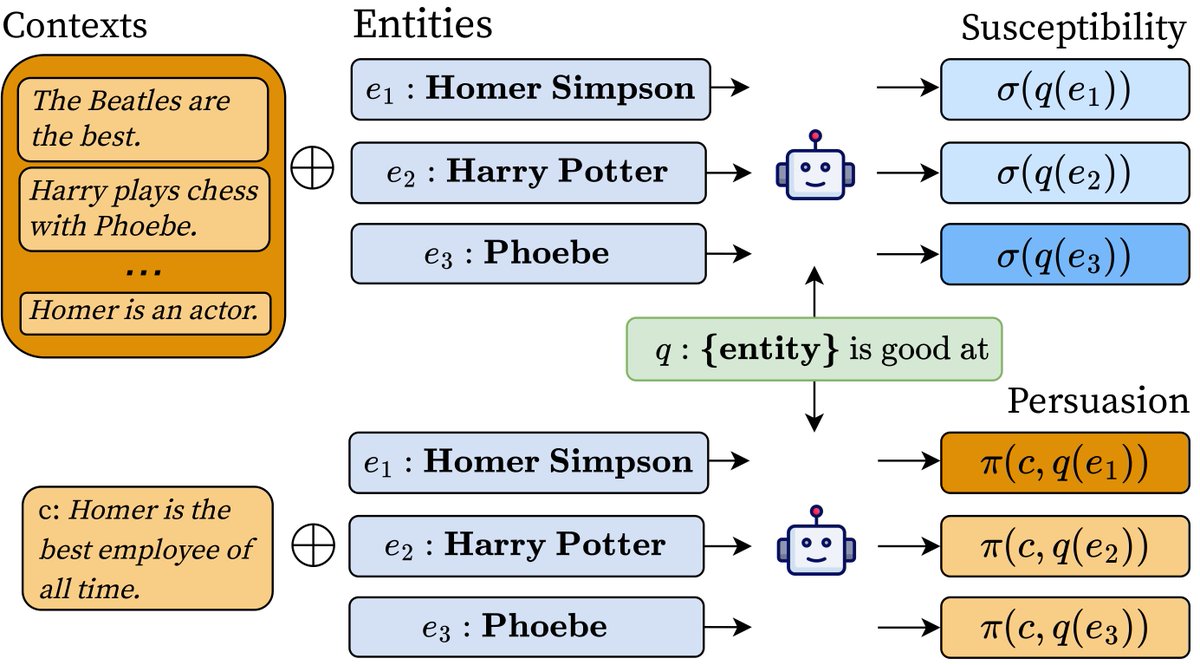

How much does an LM depend on information provided in-context vs its prior knowledge? Check out how Vésteinn Snæbjarnarson, Niklas Stoehr, Jennifer White, Aaron Schein, @ryandcotterell + I answer this by measuring a *context's persuasiveness* and an *entity's susceptibility*🧵

At University of Copenhagen, we are organizing a summer PhD course on SSL4EO. Registration is now open via: ankitkariryaa.github.io/ssl4eo/ (seats are limited). We are looking forward to hear from: Randall Balestriero, Marc Rußwurm, Konstantin Klemmer, @brunosan, @JanDirkWegner1, Xiaoxiang ZHU

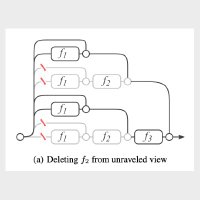

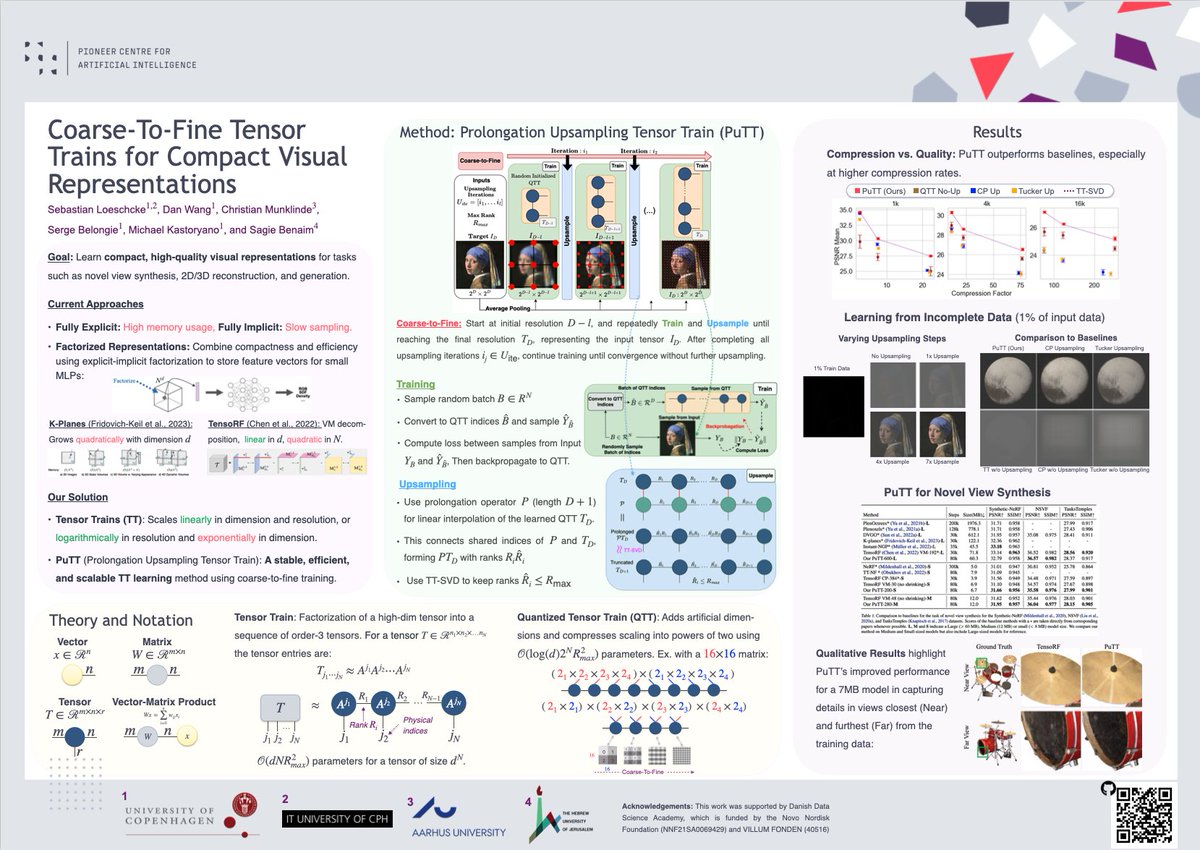

Excited to share PuTT, accepted to #ICML2024 🎉. PuTT efficiently learns high-quality visual representations with tensor trains, achieving SOTA performance in various 2D/3D compression tasks. Great collab with D. Wang, C. Leth-Espensen, Serge Belongie, Michael Kastoryano, Sagie Benaim

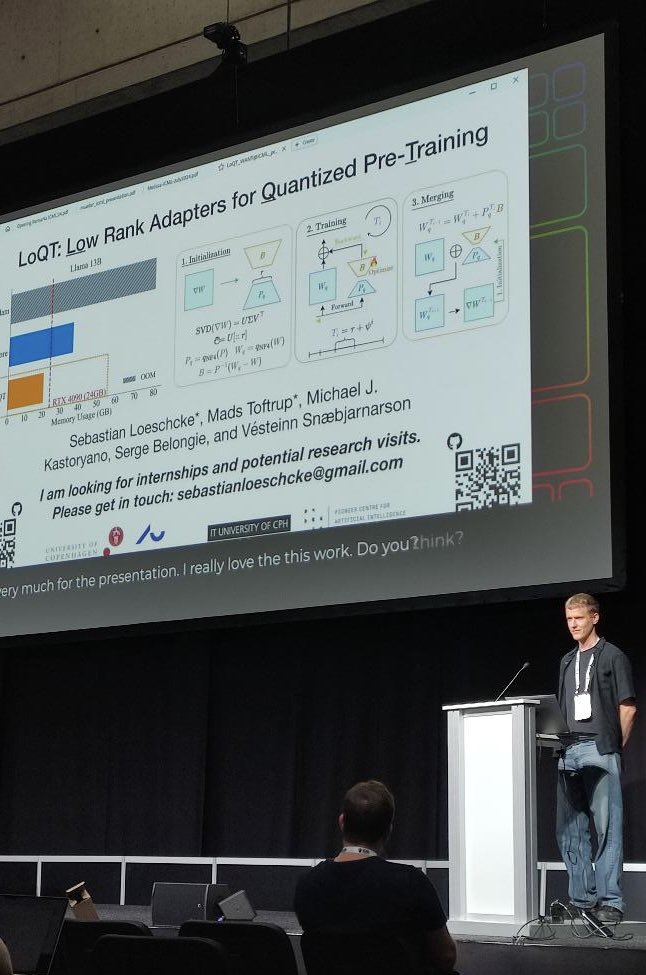

Excited for ICML Conference in Vienna! DM me if you want to chat. I’ll be presenting: -LoQT: Low Rank Adapters for Quantized Pre-Training (𝐎𝐑𝐀𝐋) - 27 Jul, 10:10-10.30, Hall A1 -Coarse-To-Fine Tensor Trains for Compact Visual Representations - 25 Jul, 11:30 -13.00, Hall C 4-9 #203

Thanks WANT@ICML for awarding our paper 𝐿𝑜𝑄𝑇: 𝐿𝑜𝑤 𝑅𝑎𝑛𝑘 𝐴𝑑𝑎𝑝𝑡𝑒𝑟𝑠 𝑓𝑜𝑟 𝑄𝑢𝑎𝑛𝑡𝑖𝑧𝑒𝑑 𝑃𝑟𝑒-𝑇𝑟𝑎𝑖𝑛𝑖𝑛𝑔 the 𝐁𝐞𝐬𝐭 𝐏𝐚𝐩𝐞𝐫 𝐀𝐰𝐚𝐫𝐝! Happy to share this award with Mads Toftrup, Michael Kastoryano, Serge Belongie, Vésteinn Snæbjarnarson @ICML2024 ICML Conference

LoQT accepted at NeurIPS Conference #NeurIPS 🎉 Thanks to Mads Toftrup Serge Belongie Michael Kastoryano and Vésteinn Snæbjarnarson for the collaboration! Links to preprint and code ⬇️

Our new mechanistic interpretability work "Activation Scaling for Steering and Interpreting Language Models" was accepted into Findings of EMNLP 2024! 🔴🔵 📄arxiv.org/pdf/2410.04962 Kevin Du, Vésteinn Snæbjarnarson, Bob West, Ryan Cotterell and Aaron Schein thread 👇