Quankai Gao

@uuuuusher

CS PhD student in computer vision & computer graphics@USC| Adobe Intern |Student Researcher@Google

ID: 1497780997693259777

http://Zerg-Overmind.github.io 27-02-2022 03:50:14

101 Tweet

169 Takipçi

378 Takip Edilen

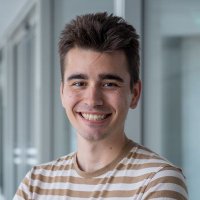

📢 [New Paper] DMesh++: An Efficient Differentiable Mesh for Complex Shapes ✍️ Authors: Sanghyun Son, @gadelha_m, Yang, Matthew Fisher, Zexiang Xu, Yiling Qiao, Ming Lin, Yi Zhou 🔗 Arxiv: arxiv.org/abs/2412.16776 🔗 Page: sonsang.github.io/dmesh2-project/ More details👇

![Sanghyun Son (@sanghyunson) on Twitter photo 📢 [New Paper] DMesh++: An Efficient Differentiable Mesh for Complex Shapes

✍️ Authors: <a href="/SanghyunSon/">Sanghyun Son</a>, @gadelha_m, <a href="/leo_zhy/">Yang</a>, Matthew Fisher, <a href="/zexiangxu/">Zexiang Xu</a>, <a href="/yilingq97/">Yiling Qiao</a>, Ming Lin, <a href="/Papagina_Yi/">Yi Zhou</a>

🔗 Arxiv: arxiv.org/abs/2412.16776

🔗 Page: sonsang.github.io/dmesh2-project/

More details👇 📢 [New Paper] DMesh++: An Efficient Differentiable Mesh for Complex Shapes

✍️ Authors: <a href="/SanghyunSon/">Sanghyun Son</a>, @gadelha_m, <a href="/leo_zhy/">Yang</a>, Matthew Fisher, <a href="/zexiangxu/">Zexiang Xu</a>, <a href="/yilingq97/">Yiling Qiao</a>, Ming Lin, <a href="/Papagina_Yi/">Yi Zhou</a>

🔗 Arxiv: arxiv.org/abs/2412.16776

🔗 Page: sonsang.github.io/dmesh2-project/

More details👇](https://pbs.twimg.com/media/Ggp4dw1XgAA776k.jpg)