Jingfeng Wu

@uuujingfeng

Bsky: bsky.app/profile/uuujf.…

Postdoc @SimonsInstitute @UCBerkeley; alumnus of @JohnsHopkins @PKU1898; DL theory, opt, and stat learning.

ID: 1933510801

https://uuujf.github.io 04-10-2013 07:50:15

98 Tweet

1,1K Takipçi

1,1K Takip Edilen

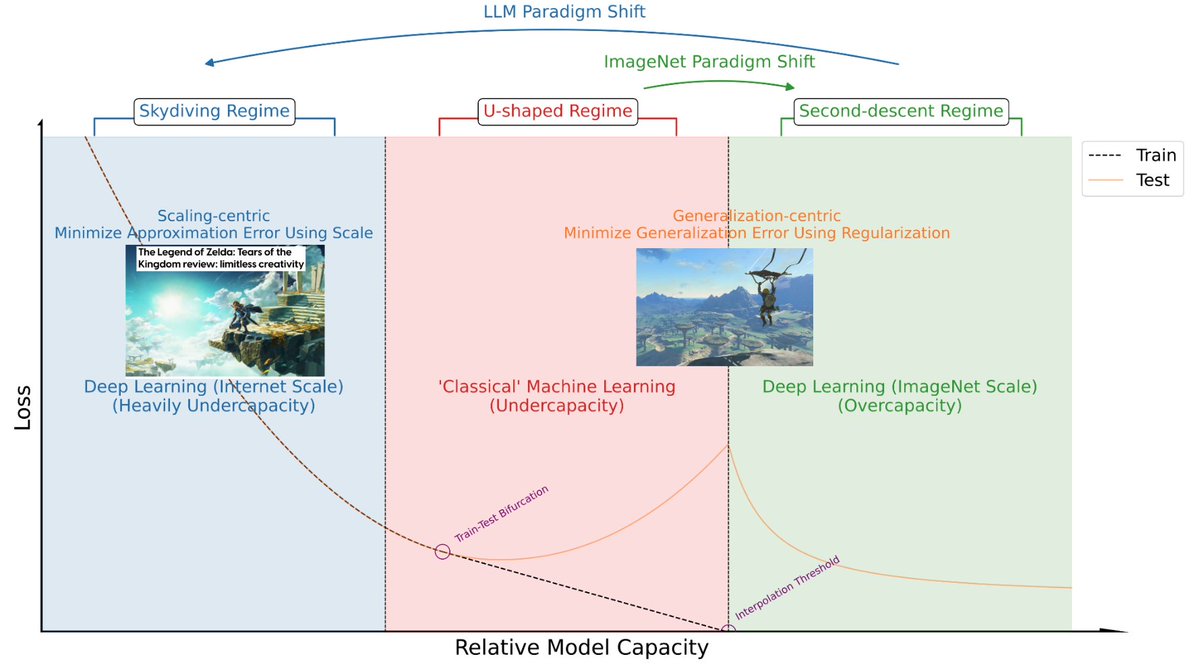

What’s the role of the MLP layer in a transformer block? It’s intuitive to think that the MLP component helps reduce the approximation error, and our new paper confirms this theoretically! arxiv.org/abs/2402.14951 Joint work with Jingfeng Wu Jingfeng Wu and Peter L. Bartlett

My co-author Rebecca Barter and I are thrilled to announce the online release of our MIT Press book "Veridical Data Science: The Practice of Responsible Data Analysis and Decision Making" (vdsbook.com), an essential source for producing trustworthy data-driven results.

Jingfeng Wu I have not such a deep knowledge of the history of ML to be able to answer this in a definitive way, so I'll just give you my very personal point of view. I entered the ML field in the peak of the SVM era. At that time, people tended to use theory as a way to design algorithms.