Uri Shaham

@uri_shaham

Research Scientist at Google Research

ID: 1186248407939244033

21-10-2019 11:51:34

156 Tweet

421 Followers

243 Following

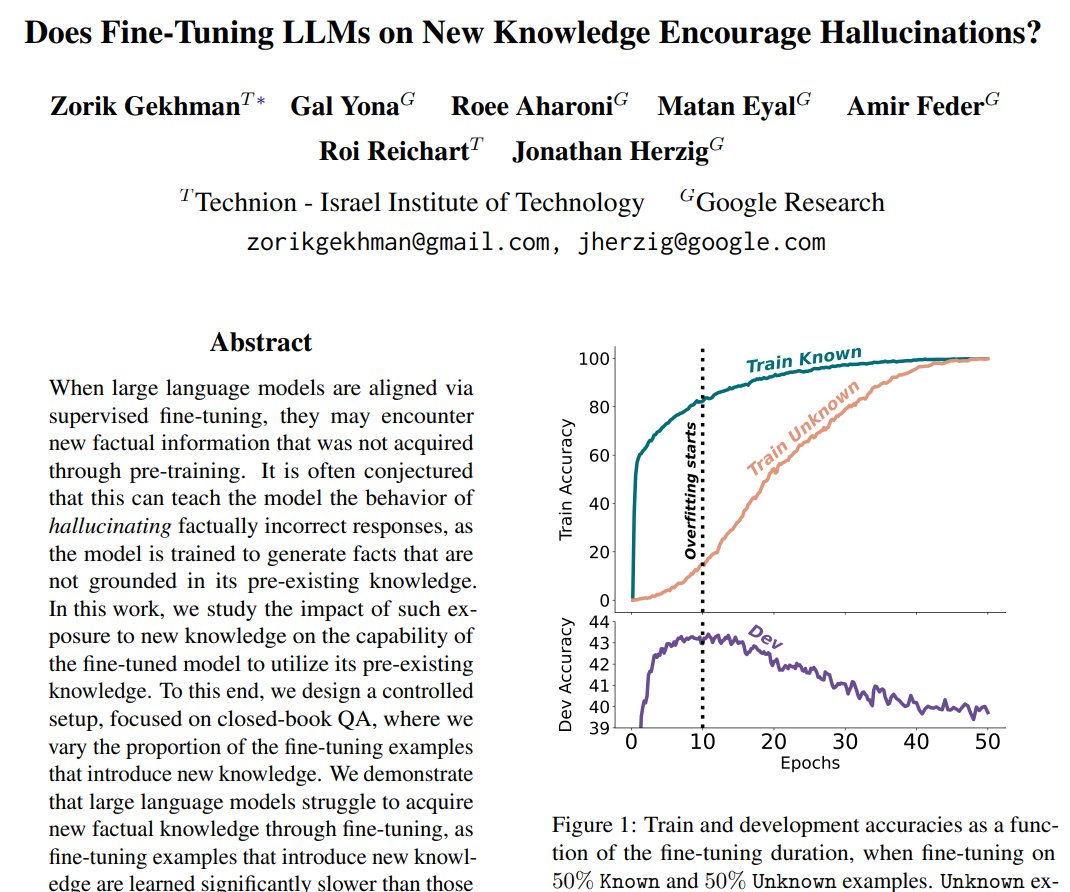

Do you have a "tell" when you are about to lie? We find that LLMs have “tells” in their internal representations which allow estimating how knowledgeable a model is about an entity 𝘣𝘦𝘧𝘰𝘳𝘦 it generates even a single token. Paper: arxiv.org/abs/2406.12673… 🧵 Daniela Gottesman

new models have an amazingly long context. but can we actually tell how well they deal with it? 🚨🚨NEW PAPER ALERT🚨🚨 with Alon Jacovi Aviv Slobodkin Aviya Maimon, Ido Dagan and Reut Tsarfaty arXiv: arxiv.org/abs/2407.00402… 1/🧵