matt turk

@turkmatthew

Data Science @cleanlabAI. Prev: Investing & ML @goodwatercap, Quant/ML @coinbase & @goldmansachs, prop trading, EECS @ucberkeley

ID: 517916836

https://signal.nfx.com/investors/matt-turk 07-03-2012 20:35:17

1,1K Tweet

611 Takipçi

1,1K Takip Edilen

Tomorrow I'm spilling the secrets as to how several Fortune 500 @cleanlabai customers are solving the hardest problem in AI -- producing accurate, compliant, safe fully automated AI Agent responses -- at the AI User Group Conference in SF. Stop by and get your hands dirty and

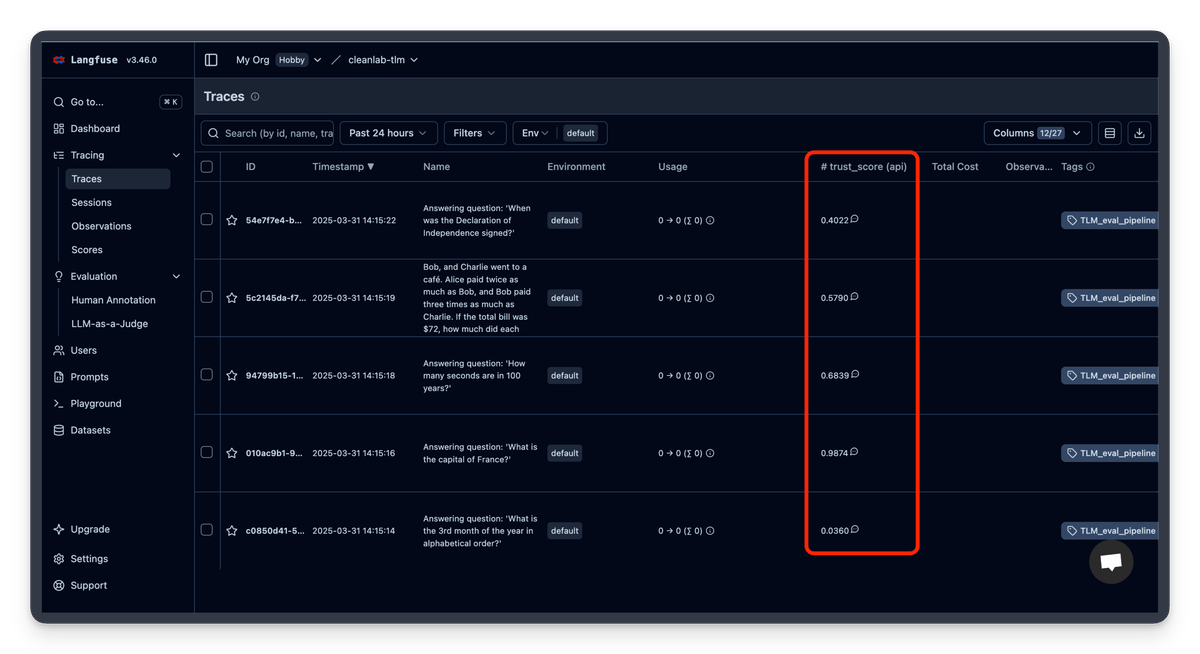

Cleanlab is now integrated into langfuse.com's observability platform! We're adding real-time trust scores to LLM outputs to quickly surface the most problematic responses for Langfuse users.

You can now use Cleanlab with LlamaIndex 🦙 to make your production AI agents trustworthy and actually root cause why certain responses are untrustworthy (knowledge gap/poor retrieval, bad data, hallucination, etc.)