Timothy Baker

@ts_baker

#BlackLivesMatter. Passionless scribbler. Husband @larissa_kb MD | Founder @sudocode_ai YC S23 | Alum @toasttab | History @harvard '13

ID:1375700467

23-04-2013 23:19:49

4,0K تغريدات

382 متابعون

1,6K التالية

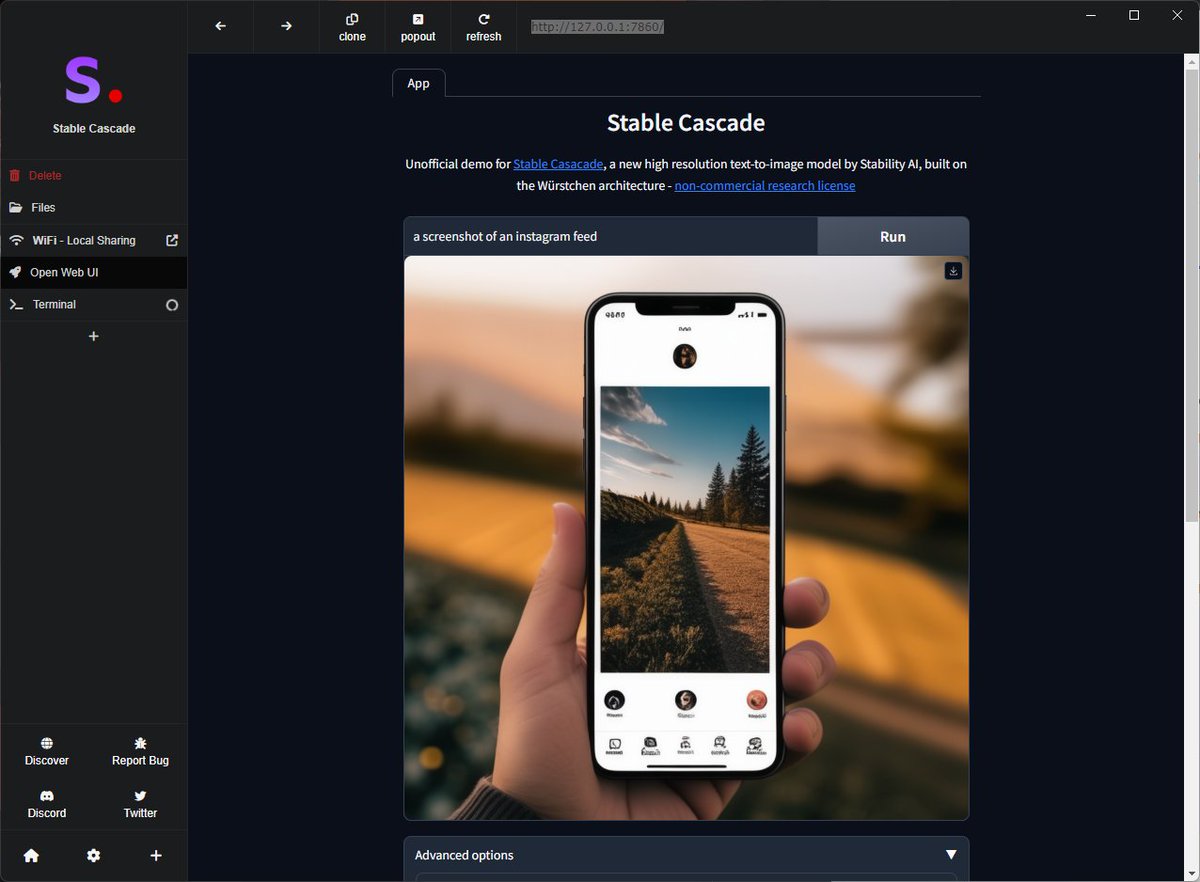

I am so excited to tell the world about what we've been working on for the last few months. World, say hello to Bestever AI — GenAI tool for image & video ads.

The easiest way to generate creatives for campaigns is here.

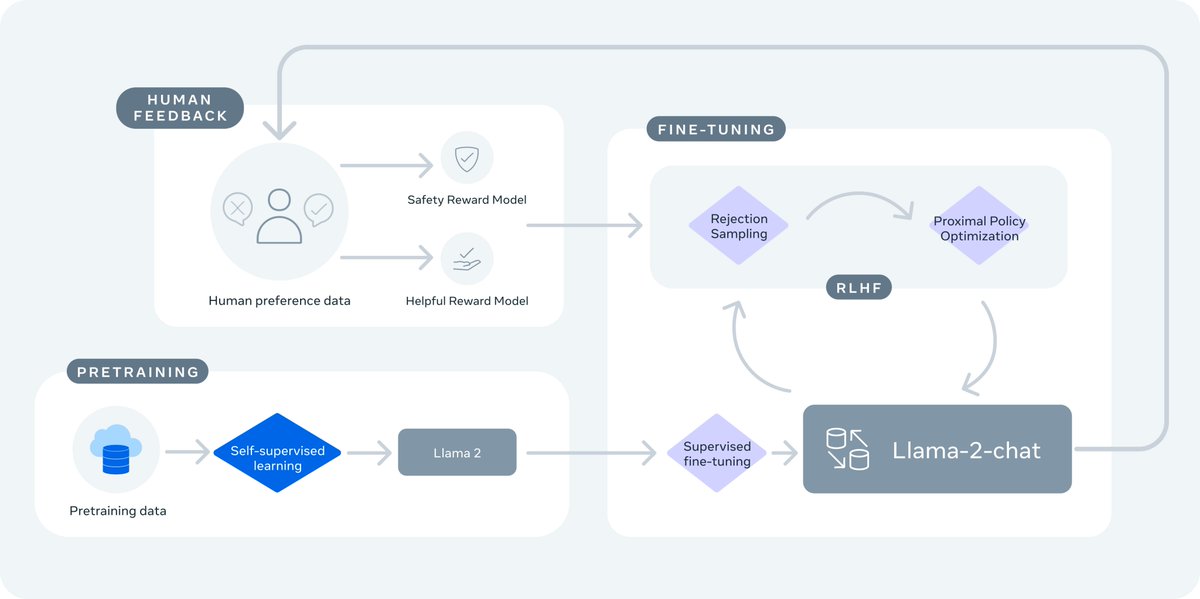

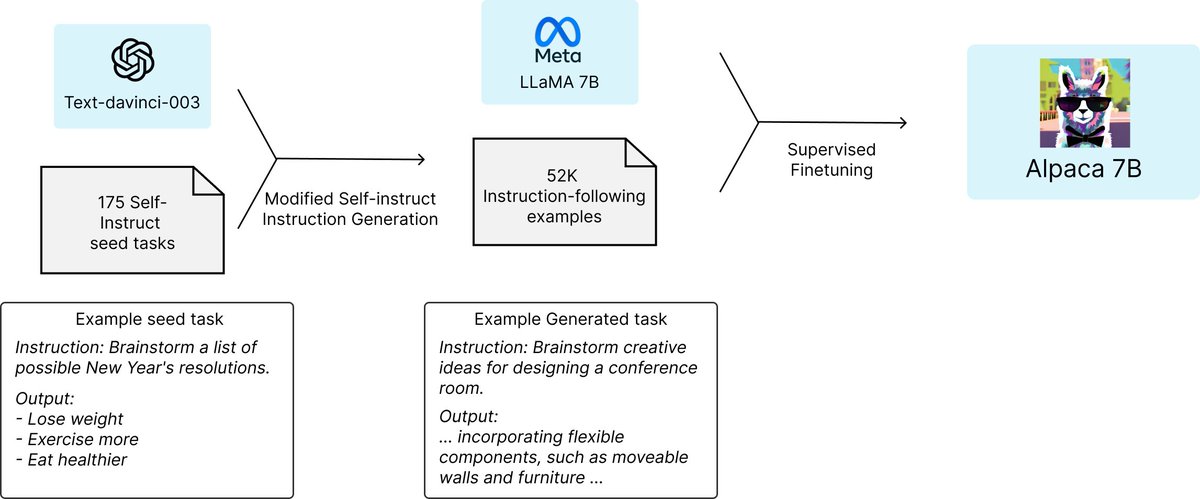

Amjad Masad ChatGPT is cheaper than Davinci mostly because model is smaller.

Training over more data helps, but training over probability-distribution (via RL/Gumbel-softmax/KnowledgeDistillation) are much better than noisy next word training. This has gone unnoticed.

x.com/_ayushkaushal/…