Yong Zheng-Xin (Yong)

@yong_zhengxin

🎯 reasoning and alignment

🌎 making LLMs safe and helpful for everyone

📍 phd @BrownCSDept + research @AIatMeta @Cohere_Labs

ID: 955679485273153537

https://yongzx.github.io/ 23-01-2018 05:51:59

553 Tweet

1,1K Takipçi

1,1K Takip Edilen

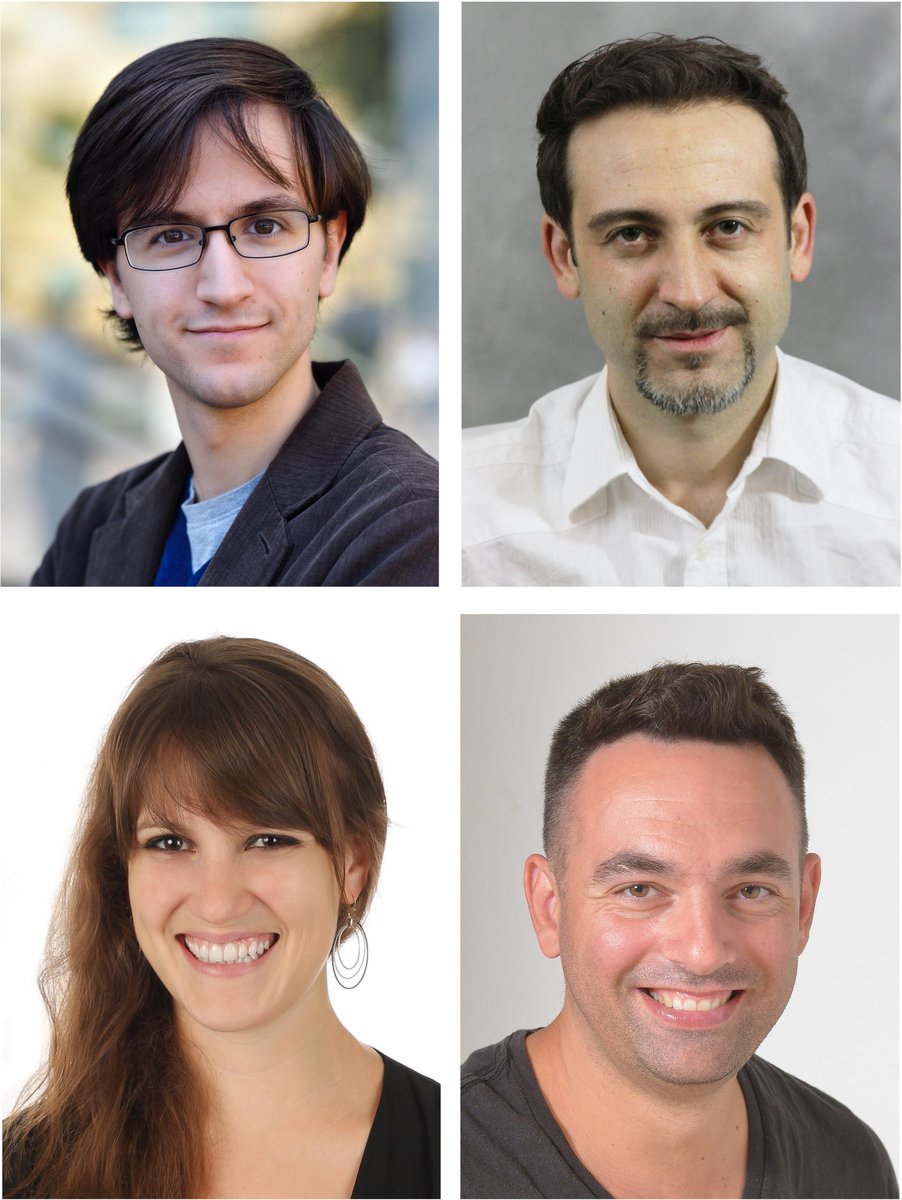

Work led by Yong Zheng-Xin (Yong) with collaborators farid Jonibek Mansurov Ruochen Zhang Niklas Muennighoff Carsten Eickhoff Julia Kreutzer Genta Winata Stephen Bach Alham Fikri Aji 📜Paper link: arxiv.org/abs/2505.05408

It has been such a great experience collaborating with Julia from Cohere Labs ! Come check out our new work on how test-time scaling of English-centric models improves crosslingual reasoning 🔥 📜 arxiv.org/abs/2505.05408

In 2022, with Yong Zheng-Xin (Yong) & team, we showed that models trained to follow instructions in English can follow instructions in other languages. Our new work below shows that models trained to reason in English can also reason in other languages!

⭐️Reasoning LLMs trained on English data can think in other languages. Read our paper to learn more! Thank you Yong Zheng-Xin (Yong) for leading the project and team! It was an exciting colab! farid Jonibek Mansurov Ruochen Zhang Niklas Muennighoff Carsten Eickhoff Julia Kreutzer

Congratulations to Brown CS faculty members Stephen Bach, Ugur Çetintemel, Ellie Pavlick, and Nikos Vasilakis, who have received Brown University's OVPR Seed Award and Salomon Faculty Research Award honors! Learn more about their work at Brown CS News: cs.brown.edu/news/2025/05/2…