Weiyu Liu

@weiyu_liu_

Postdoc @Stanford. I work on semantic representations for robots. Previously PhD @GTrobotics

ID: 1451625932171665409

http://weiyuliu.com/ 22-10-2021 19:06:20

62 Tweet

973 Takipçi

460 Takip Edilen

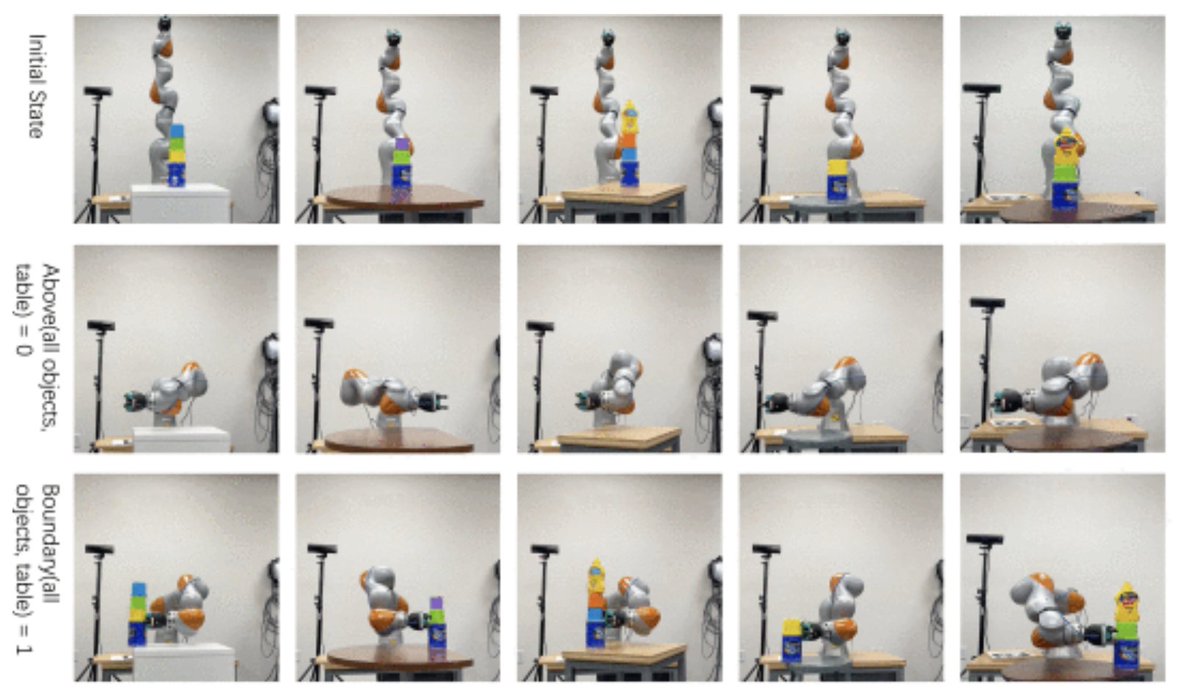

A T-RO paper by researchers University of Utah Robotics Center, NVIDIA, HRL Laboratories and Georgia Tech describes a #robot that can rearrange novel objects in diverse environments with logical goals by flexibly combining primitive actions including pick, place and push. ieeexplore.ieee.org/document/10418…

How to extract spatial knowledge from VLMs to generate 3D layouts? We combine spatial relations, visual markers, and code into a unified representation that is both interpretable by VLMs and flexible for generating diverse scenes. Check out the detailed post by Fan-Yun Sun!

Today is the day! Welcome to join #CVPR2025 workshop on Foundation Models meet Embodied Agents! 🗓️Jun 11 📍Room 214 🌐…models-meet-embodied-agents.github.io/cvpr2025/ Looking forward to learning insights from wonderful speakers Jitendra MALIK Ranjay Krishna Katerina Fragkiadaki Shuang Li Yilun Du

Thank you for sharing our work Y Combinator! Please checkout vernerobotics.com to schedule a free pilot to see how our robots can transform your business! - Thank you to (co-founder) Aditya, my research advisors, colleagues, friends and family for your support!