V. Antonopoulos

@v_antonop

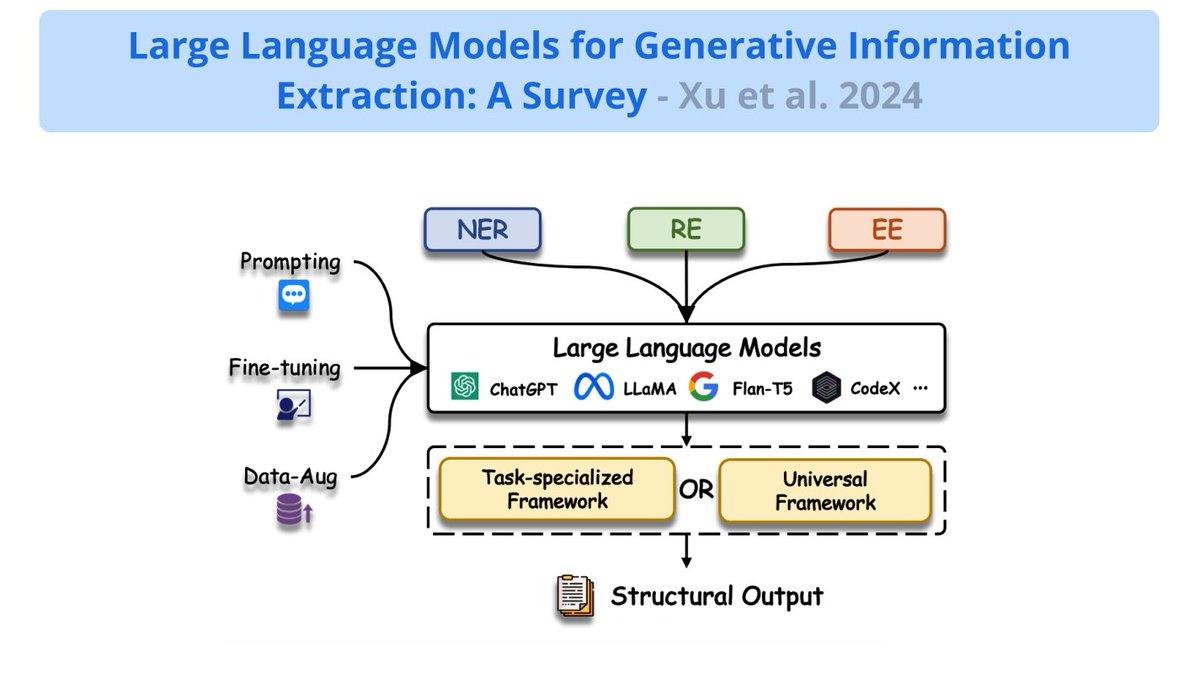

Co-founder 11tensors.com, a pioneering AI startup at the forefront of knowledge extraction from diverse sources. LLM and Deep Learning model training.

ID: 84534940

https://11tensors.com 23-10-2009 08:04:04

384 Tweet

262 Takipçi

766 Takip Edilen

🙌A nice blog from 11tensors V. Antonopoulos that gives the hands-on practice to build AI Agent: Intel NeuralChat-7B LLM performs very well and can replace Open AI Function Calling to build your AI Agent! 🎯medium.com/11tensors/conn… #iamintel #intelai @intelai Huma Abidi Wei Li

Fine-tune a Mistral-7b model using Direct Preference Optimization (DPO). Just published a tutorial on Towards Data Science about using DPO to enhance the performance of SFT models. Funnily enough, I created NeuralHermes-2.5 for this article. towardsdatascience.com/fine-tune-a-mi…

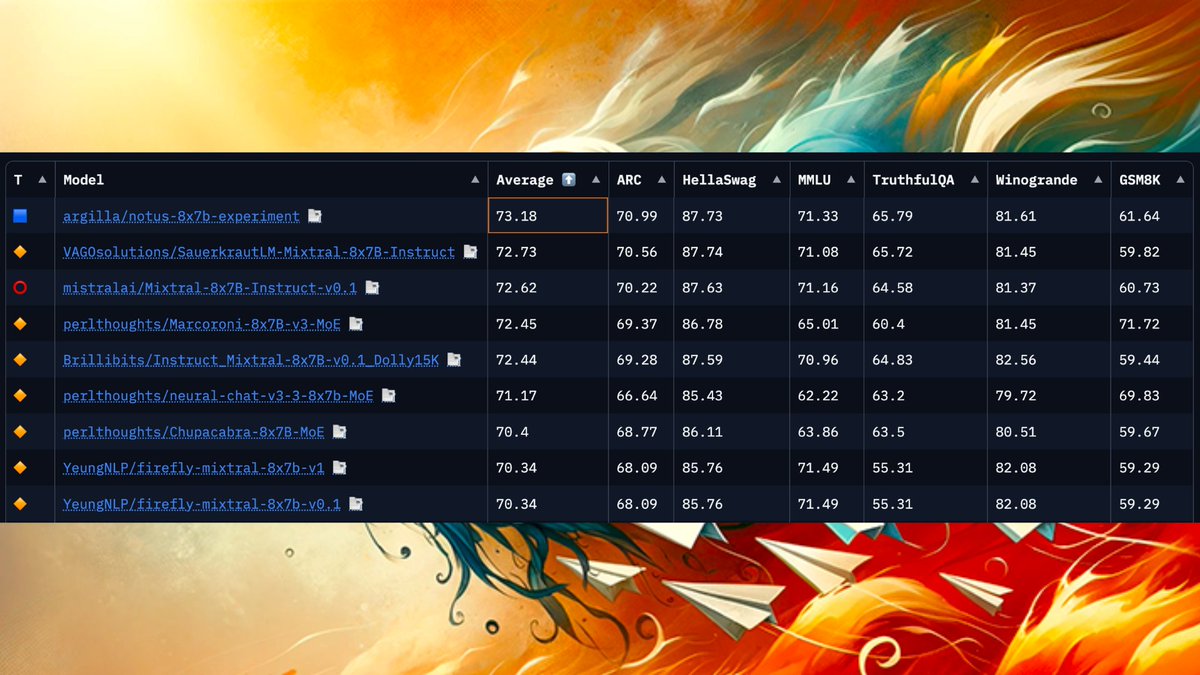

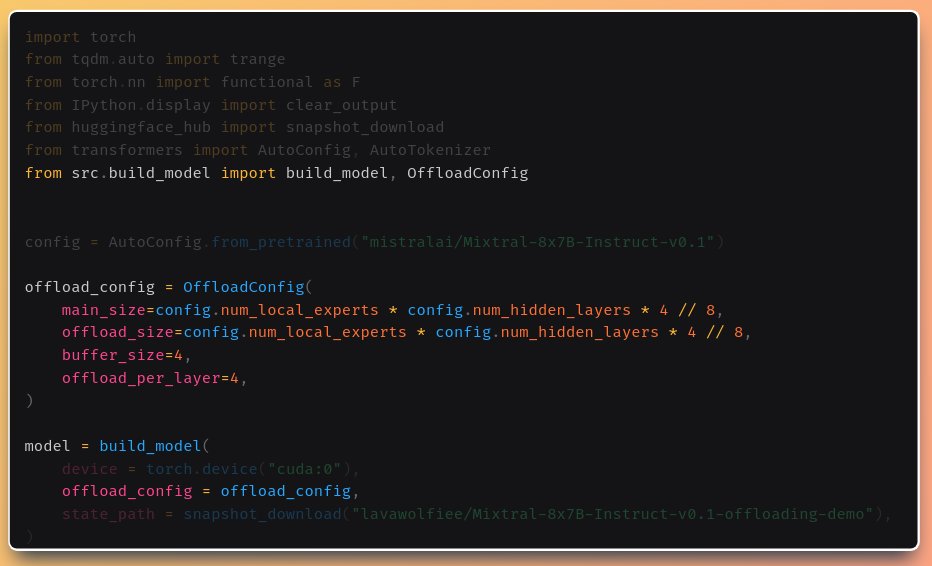

NEW PAPER: Mixtral of Experts 🧡arxiv.org/abs/2401.04088 Check out how Mixtral 8x7B works! We are also hosting an office hour on Mistral AI Discord tomorrow. Join us if you have any questions about Mistral models or la plateforme: discord.gg/mistralai

[Arena] Exciting update! Mistral Medium has gathered 6000+ votes and is showing remarkable performance, reaching the level of Claude. Congrats Mistral AI! We have also revamped our leaderboard with more Arena stats (votes, CI). Let us know any thoughts :) Leaderboard

![lmsys.org (@lmsysorg) on Twitter photo [Arena] Exciting update! Mistral Medium has gathered 6000+ votes and is showing remarkable performance, reaching the level of Claude. Congrats <a href="/MistralAI/">Mistral AI</a>!

We have also revamped our leaderboard with more Arena stats (votes, CI). Let us know any thoughts :)

Leaderboard [Arena] Exciting update! Mistral Medium has gathered 6000+ votes and is showing remarkable performance, reaching the level of Claude. Congrats <a href="/MistralAI/">Mistral AI</a>!

We have also revamped our leaderboard with more Arena stats (votes, CI). Let us know any thoughts :)

Leaderboard](https://pbs.twimg.com/media/GDezVK7aUAAlDdq.jpg)