Stanford NLP Group

@stanfordnlp

Computational Linguists—Natural Language—Machine Learning @chrmanning @jurafsky @percyliang @ChrisGPotts @tatsu_hashimoto @MonicaSLam @Diyi_Yang @StanfordAILab

ID: 118263124

https://nlp.stanford.edu/ 28-02-2010 03:28:05

13,13K Tweet

163,163K Takipçi

273 Takip Edilen

I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little from next frame prediction. Maybe it's because LLMs are actually brain scanners in disguise. Idle musings in my new blog post: sergeylevine.substack.com/p/language-mod…

General User Models by Omar Shaikh might just be the coolest thing ever. SO MUCH looking forward to messing w the MCP

Language as a Visual Format talk by Phillip Isola. (These slides are a summary of the intro. The main content is about cycle consistency between images and captions improving text-to-image and image-to-text. Uses DPO! See quoted thread. 🧵👇)

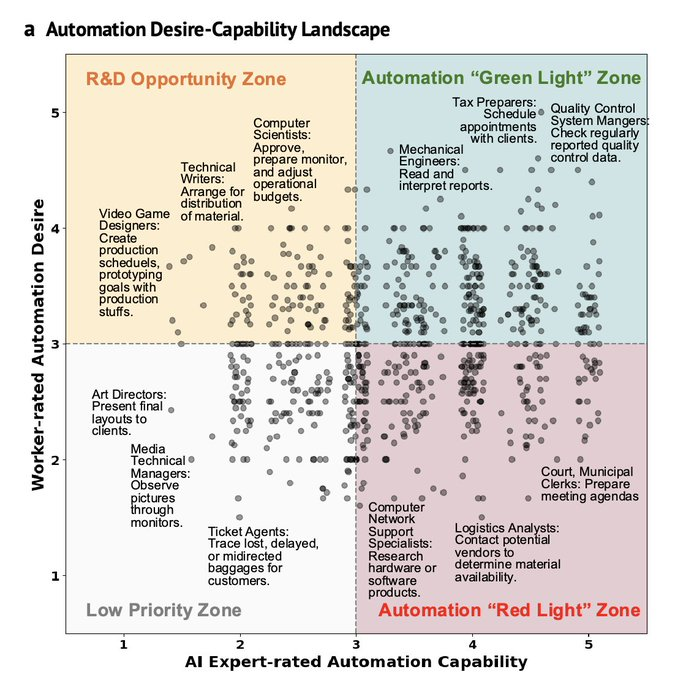

Fascinating new AI agent research from Erik Brynjolfsson + colleagues: Looking at work tasks that are painful to do vs ones that are fulfilling and fun. How do they line up with the tasks that most AI agents are soon poised to automate? It identifies a key new opportunity quadrant.

Priyanshu Priyank tanisha If you are an absolute beginner Stick to the og: andrew ng machine learning specialization and deep learning specialization For NLP: stanford CS224N is a goldmine Follow research papers, if they seem difficult, try to find blogs from the authors(they often have those)