Ron Green

@rgreenjr

AI Expert • Co-Founder & CTO KUNGFU.AI • 🎧 Host of “Hidden Layers: AI and the People Behind It”

ID: 13224822

http://www.kungfu.ai 07-02-2008 22:43:47

1,1K Tweet

251 Takipçi

580 Takip Edilen

Really happy to share our new paper on using AlphaEvolve for mathematical exploration at scale, written with Javier Gómez-Serrano, Terence Tao, and Google DeepMind's Bogdan Georgiev. We tested it on 67 problems and documented all our successes and failures. 🧵

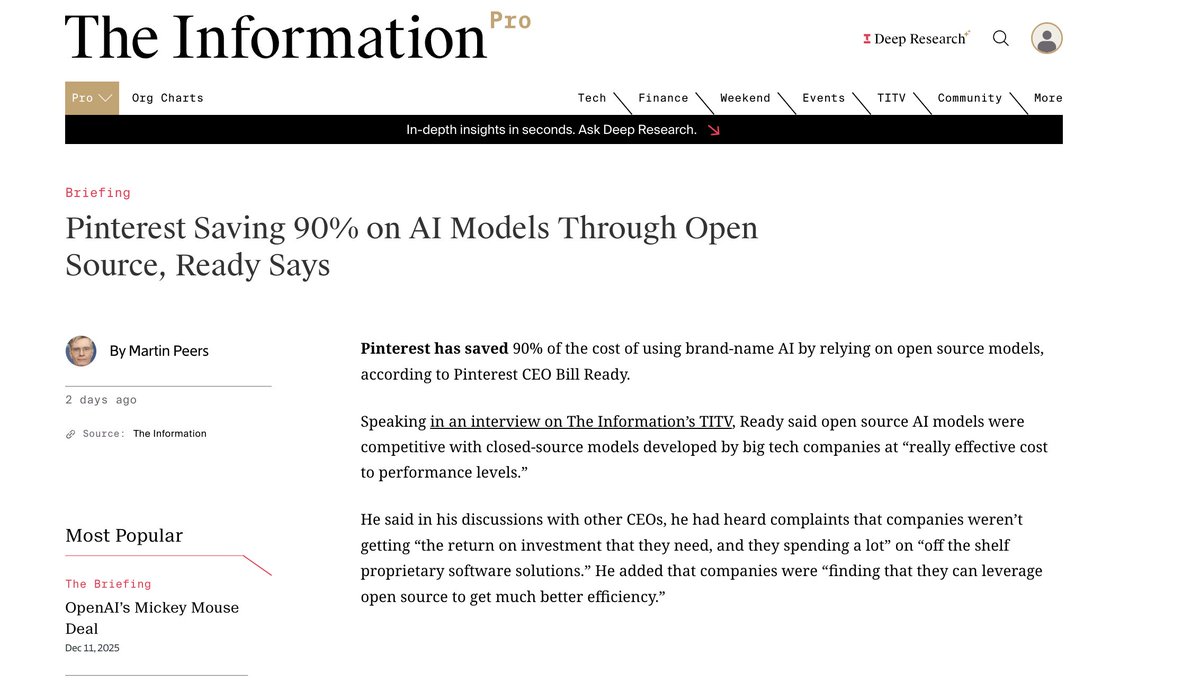

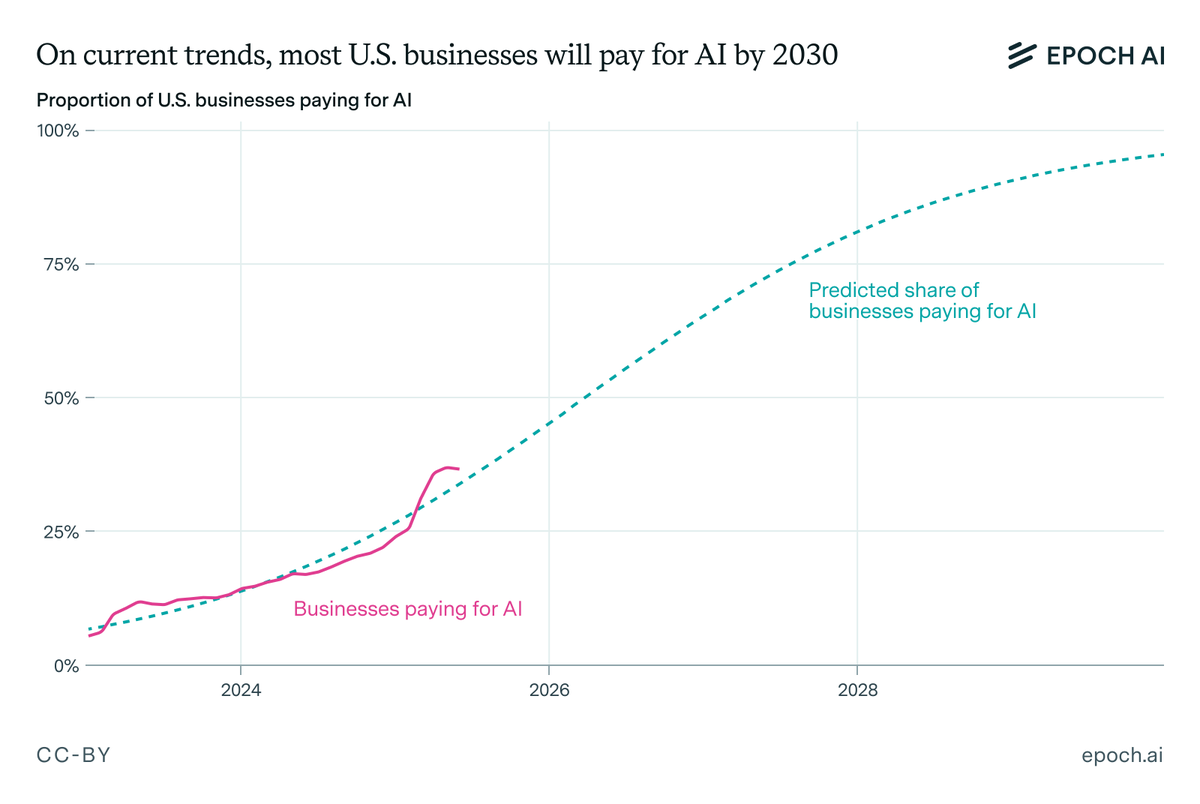

Personally feels like we've reached the peak of "Proprietary APIs" and that we're entering a much more balanced world for AI where open-source, training, Hugging Face (and other players) will start getting a much bigger share of the attention, usage and revenue. Let's go!