Princeton NLP Group

@princeton_nlp

Princeton NLP Group led by @prfsanjeevarora @danqi_chen @karthik_r_n

ID: 1297858144861925379

http://nlp.cs.princeton.edu 24-08-2020 11:27:54

250 Tweet

4,4K Takipçi

61 Takip Edilen

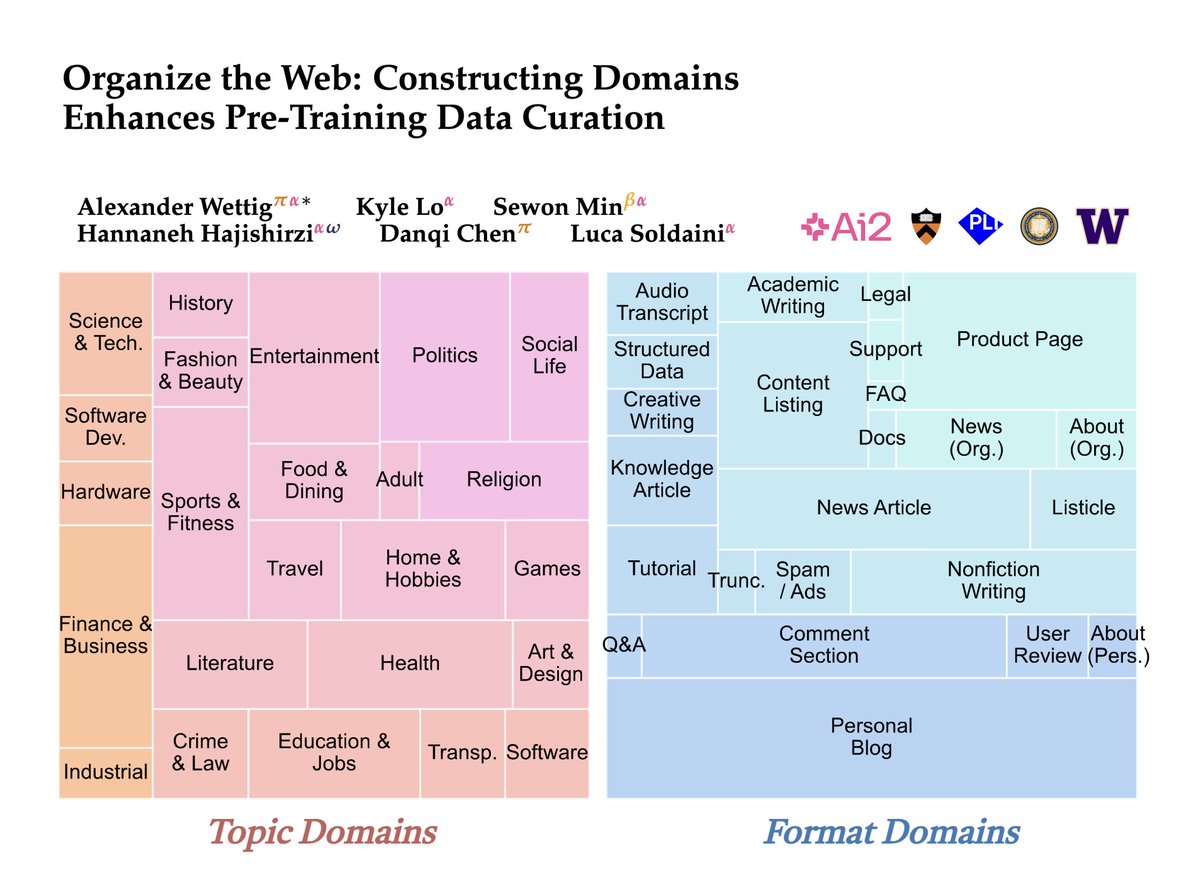

Our warmest congratulations to Danqi Chen, Stanford NLP Group grad and now Associate Professor at Princeton Computer Science and Associate Director of Princeton PLI on her stunning ICLR 2025 keynote!