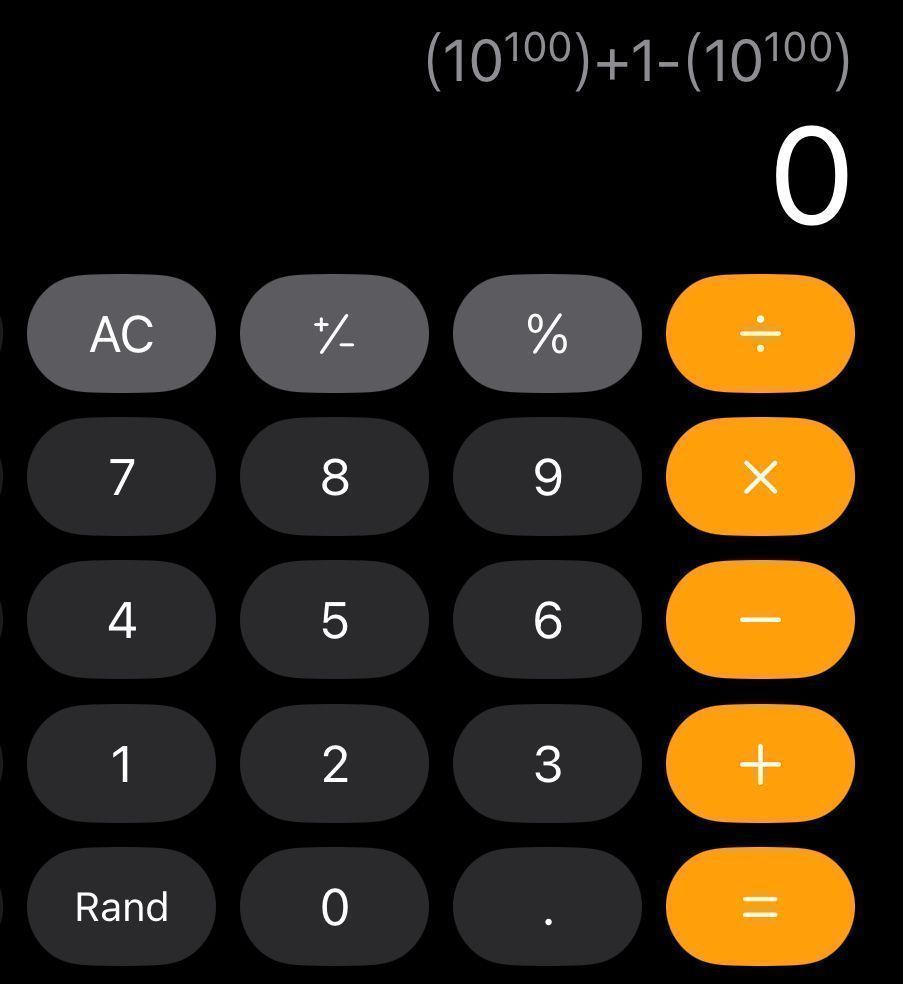

Andy J Yang

@pentagonalize

ID: 1262574885357772801

19-05-2020 02:45:18

170 Tweet

121 Takipçi

917 Takip Edilen

📜New preprint w/ Noah A. Smith and Yanai Elazar that evaluates the novelty of LM-generated text using our n-gram search tool Rusty-DAWG 🐶 Code: github.com/viking-sudo-rm… Paper: arxiv.org/abs/2406.13069

Joint work with Andy J Yang, Dana Angluin, Peter Cholak, and Anand Pillay

Congratulations to ctaguchi for winning a Lucy Family Institute for Data & Society Societal Impact Award for his work on creating language technologies for the Kichwa language community in Ecuador! Notre Dame CSE youtube.com/watch?v=HjsNOE…

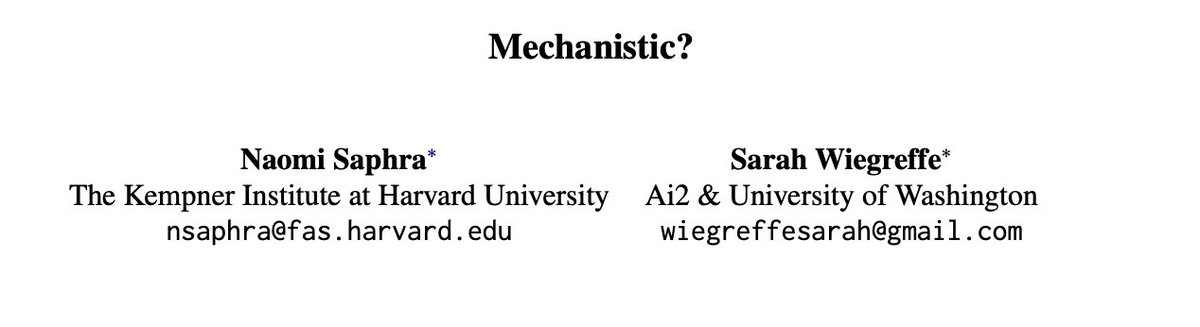

What makes some LM interpretability research “mechanistic”? In our new position paper in BlackboxNLP, Sarah Wiegreffe and I argue that the practical distinction was never technical, but a historical artifact that we should be—and are—moving past to bridge communities.

First-time NeurIPS attendee here! Super excited to talk about our paper with Yana Veitsman, Michael Hahn and to discover the amazing work by everyone else :D neurips.cc/virtual/2024/p…

Excited to head to NeurIPS Conference today! I'll be presenting our work on the representational capabilities of Transformers and RNNs/SSMs. If you're interested in meeting up to discuss research or chat, feel free to reach out via DM or email!

Drop by Andy J Yang's poster tomorrow on the relationship between transformers and first-order logic! neurips.cc/virtual/2024/p… Wed 4:30-7:30 East Exhibit Hall A-C #2310

BPE is a greedy method to find a tokeniser which maximises compression! Why don't we try to find properly optimal tokenisers instead? Well, it seems this is a very difficult—in fact, NP-complete—problem!🤯 New paper + P. Whittington, Gregor Bachmann :) arxiv.org/abs/2412.15210