Matthias Hagen

@matthias_hagen

Professor of "Databases and Information Systems", Friedrich-Schiller-Universität Jena

ID: 217377296

https://www.matthias-hagen.de 19-11-2010 10:54:09

457 Tweet

908 Takipçi

927 Takip Edilen

Jan Heinrich Merker presented our short paper at #ArgMining2024 at #ACL2024 today. We proposed to add "semantics" to lexical retrieval models for argument retrieval. w/ Matthias Hagen, Maik Fröbe, Danik Hollatz. Paper: aclanthology.org/2024.argmining…

Great presentation about RAG in biomedical domain Jan Heinrich Merker #CLEF2024 #BIOASQ2024

alexander bondarenko Thanks! You can find out paper and code online: webis.de/publications.h… github.com/webis-de/clef2… #CLEF2024

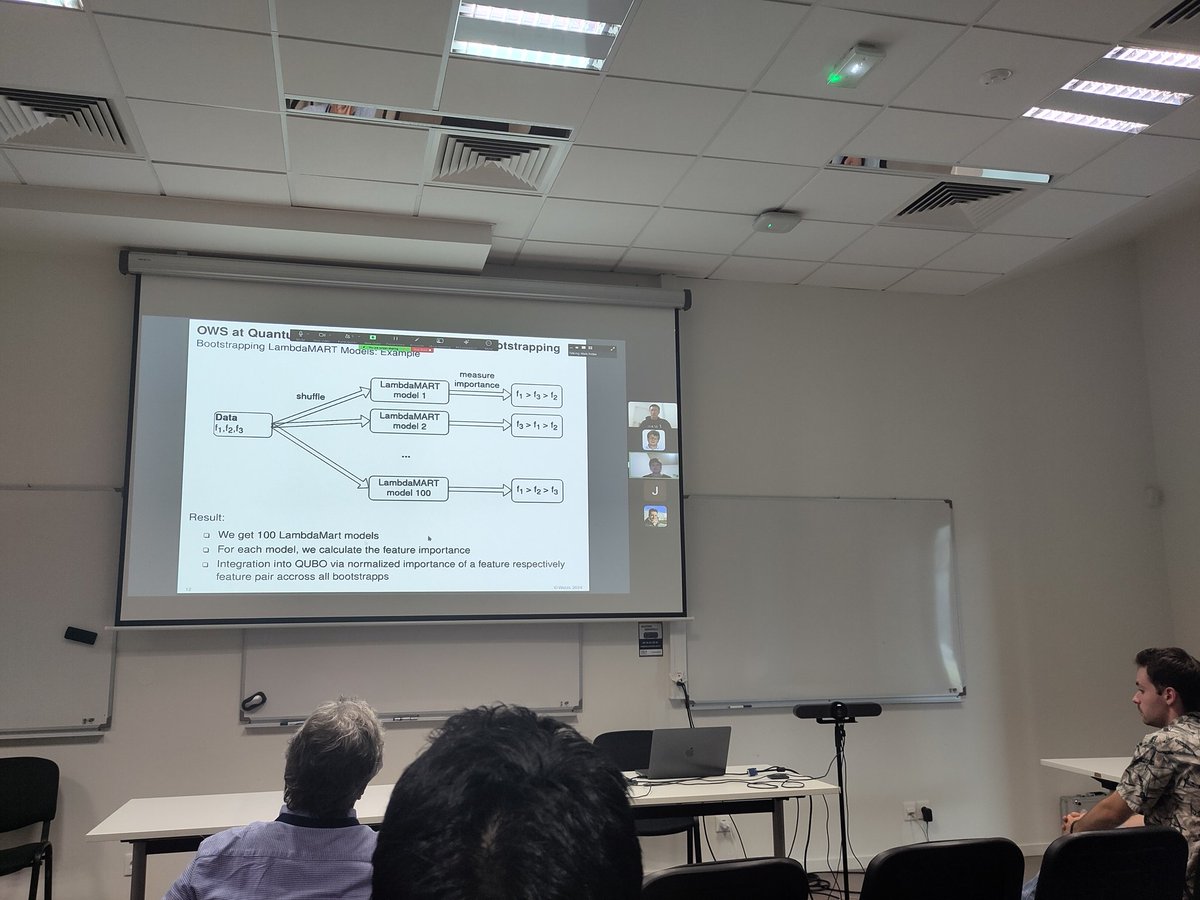

Maik Fröbe Great talk on the Team OpenWebSearch.eu @[email protected]'s submission to the QuantumCLEF shared task on how to exploit #QuantumComputing for feature selection in IR 👍 Exciting new direction of IR research at #CLEF2024 #QuantumCLEF2024

Next up at #ECIR2025, Maik Fröbe presenting his fantastic work on corpus sub sampling and how to more efficiently evaluate retrieval systems

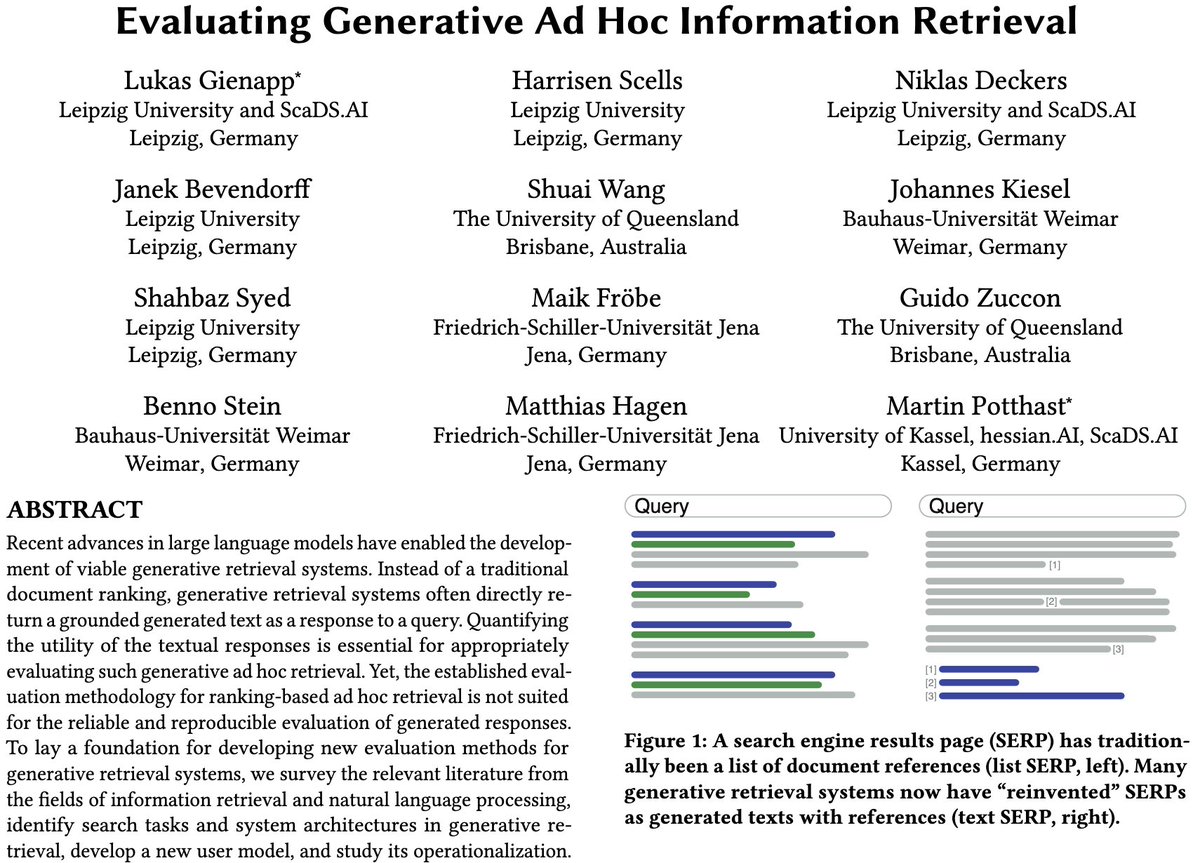

🧵 4/4 Credit and thanks to the author team Lukas Gienapp, Tim Hagen, Maik Fröbe, Matthias Hagen, Benno Stein, Martin Potthast, and Harry Scells – you can also catch some of them at #ECIR2025 currently if you want to chat about RAG!

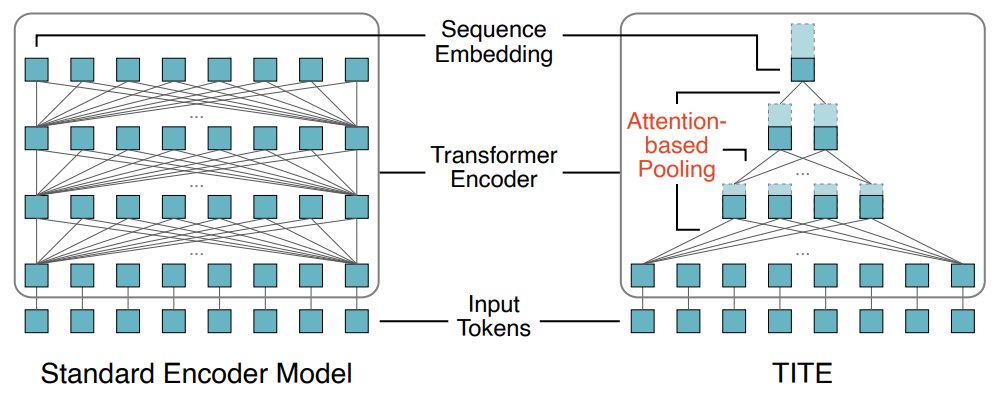

What an honor to receive both the best short paper award and the best paper honourable mention award at #ECIR2025. Thank you to all the co-authors Maik Fröbe Harry Scells Shengyao Zhuang Bevan Koopman Guido Zuccon Benno Stein Martin Potthast Matthias Hagen 🥳

Maik Fröbe Harry Scells Shengyao Zhuang Bevan Koopman Guido Zuccon Benno Stein Martin Potthast Matthias Hagen Short: Rank-DistiLLM: Closing the Effectiveness Gap Between Cross-Encoders and LLMs for Passage Re-ranking webis.de/publications.h… Full: Set-Encoder: Permutation-Invariant Inter-Passage Attention for Listwise Passage Re-Ranking with Cross-Encoders webis.de/publications.h…