Kaiwen Zhou

@kaiwenzhou9

A CSE PhD student in @ucsc, working on multimodal AI Agent and responsible AI. Previous: @Samsung_RA, @hri_usa. Looking for summer intern 2025.

ID: 1506422274307416069

23-03-2022 00:07:29

80 Tweet

184 Takipçi

197 Takip Edilen

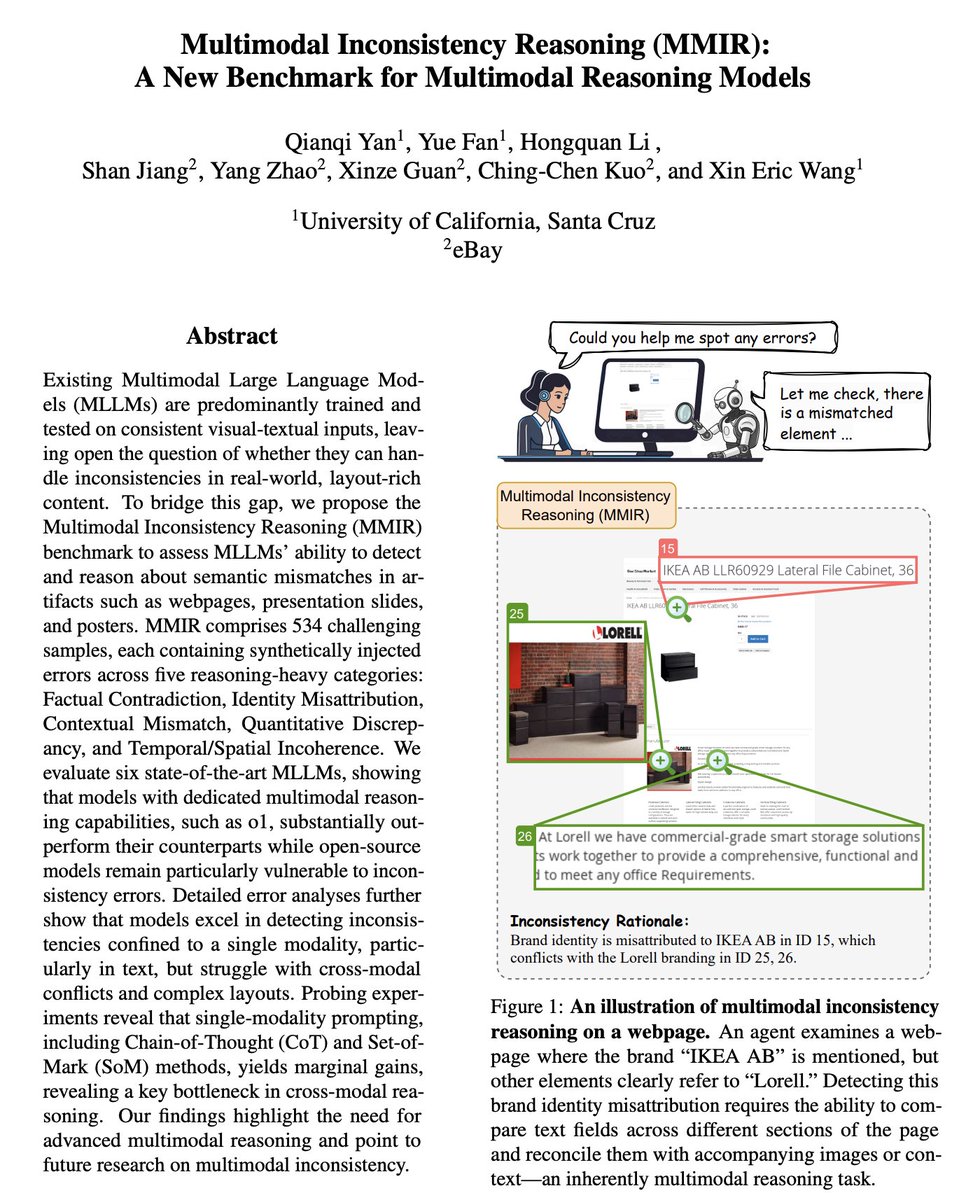

Could not attend #ICLR2025 🥲. But Chengzhi Liu will present our Multimodal Situational Safety paper on April 25, 3:00-5:30 pm in Hall 3 + Hall 2B #538. Welcome to check it out!