Kwanghee Choi

@juice500ml

Master's student @LTIatCMU, working on speech AI at @shinjiw_at_cmu's @WavLab

ID: 1690873458324799488

13-08-2023 23:50:35

71 Tweet

170 Takipçi

145 Takip Edilen

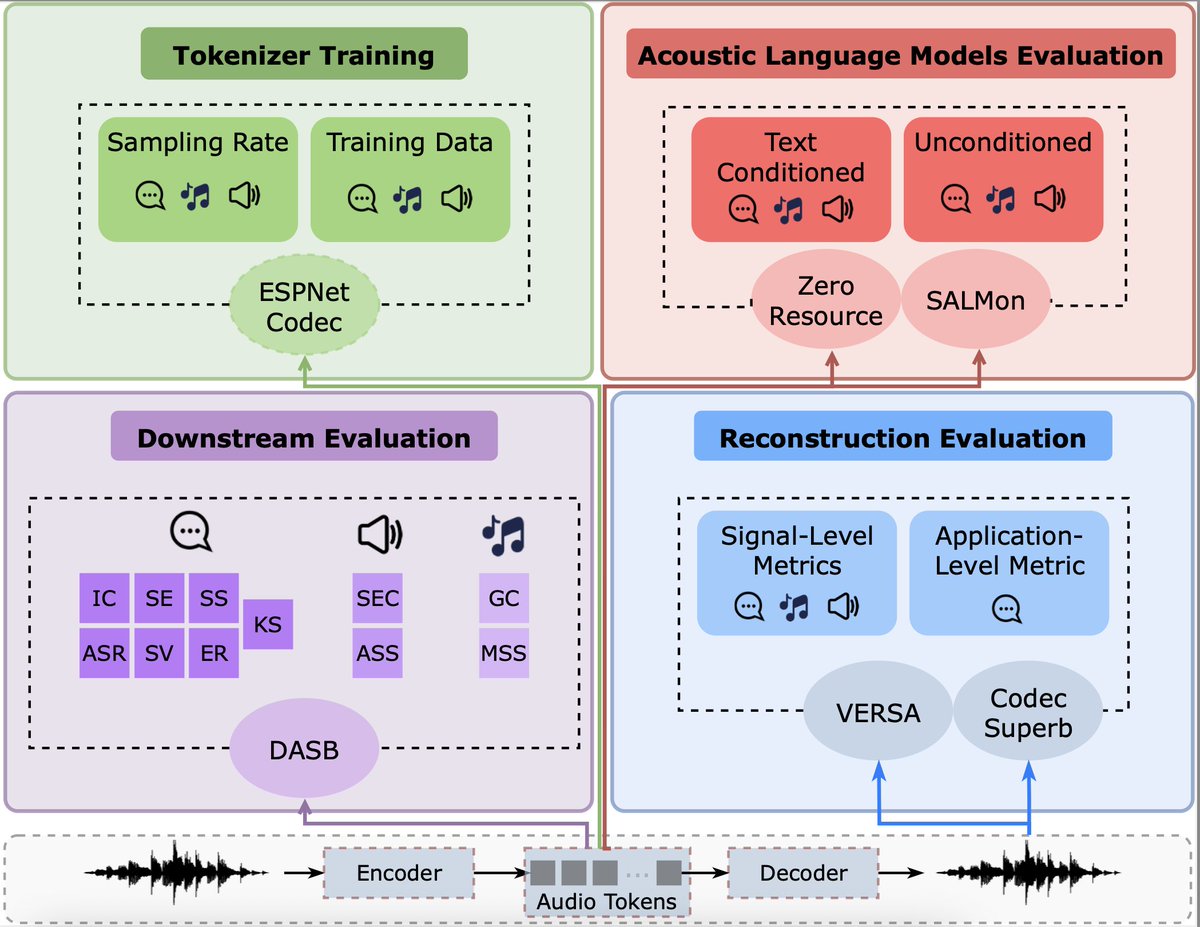

I will be presenting 3 papers from WAVLab | @CarnegieMellon at #Interspeech2025 🇳🇱 One is OWSMv4 (led by Yifan Peng), nominated for best student paper isca-archive.org/interspeech_20… It focuses a lot on data cleaning, particularly for non-English languages It will be an oral on Tues 15:10 at dock 10B.

We got the #Interspeech2025 Best Student Paper award! Congrats to Yifan Peng, Muhammad Shakeel, Yui Sudo, Jinchuan Tian (田晋川), and Chyi-Jiunn Lin!