Haoran Xu

@fe1ixxu

PhD student in CS @jhuclsp | Intern @Microsoft Research | ex-intern @Meta AI and @Amazon Alexa AI

ID: 899148379279818752

http://www.fe1ixxu.com 20-08-2017 05:57:33

64 Tweet

372 Takipçi

169 Takip Edilen

How to generate translation within the target domain only with some monolingual data? Check Weiting (Steven) Tan's new paper!

Opening up a new generation of machine translation leveraging the power of LLMs! It's now in #ICLR2024 (w/ Haoran Xu , Amr Sharaf , Hany Awadalla ). Teaser: Another breakthrough is coming, soonish..

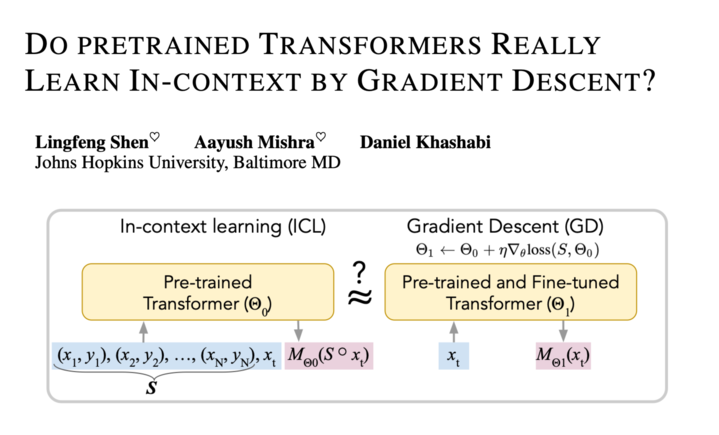

Super excited that our work got picked for an #Oral presentation at #ICML this year! Had an awesome time collaborating with Aayush Mishra and Daniel Khashabi 🕊️ at JHU CLSP. Pity I can't make it to Vienna because of visa issues😅