Cam Allen

@camall3n

Aspiring mathemagician

ID: 15484072

http://camallen.net 18-07-2008 17:59:21

84 Tweet

243 Takipçi

43 Takip Edilen

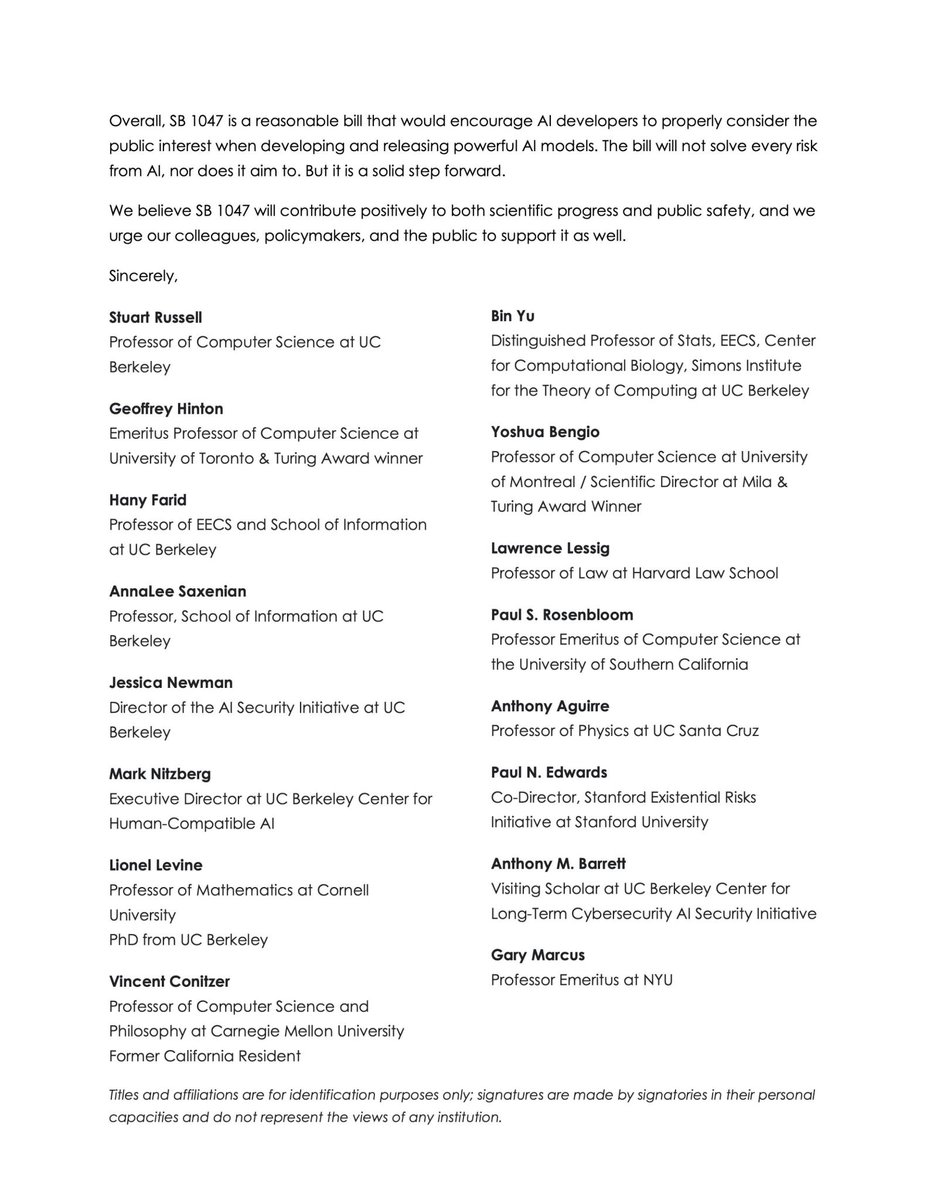

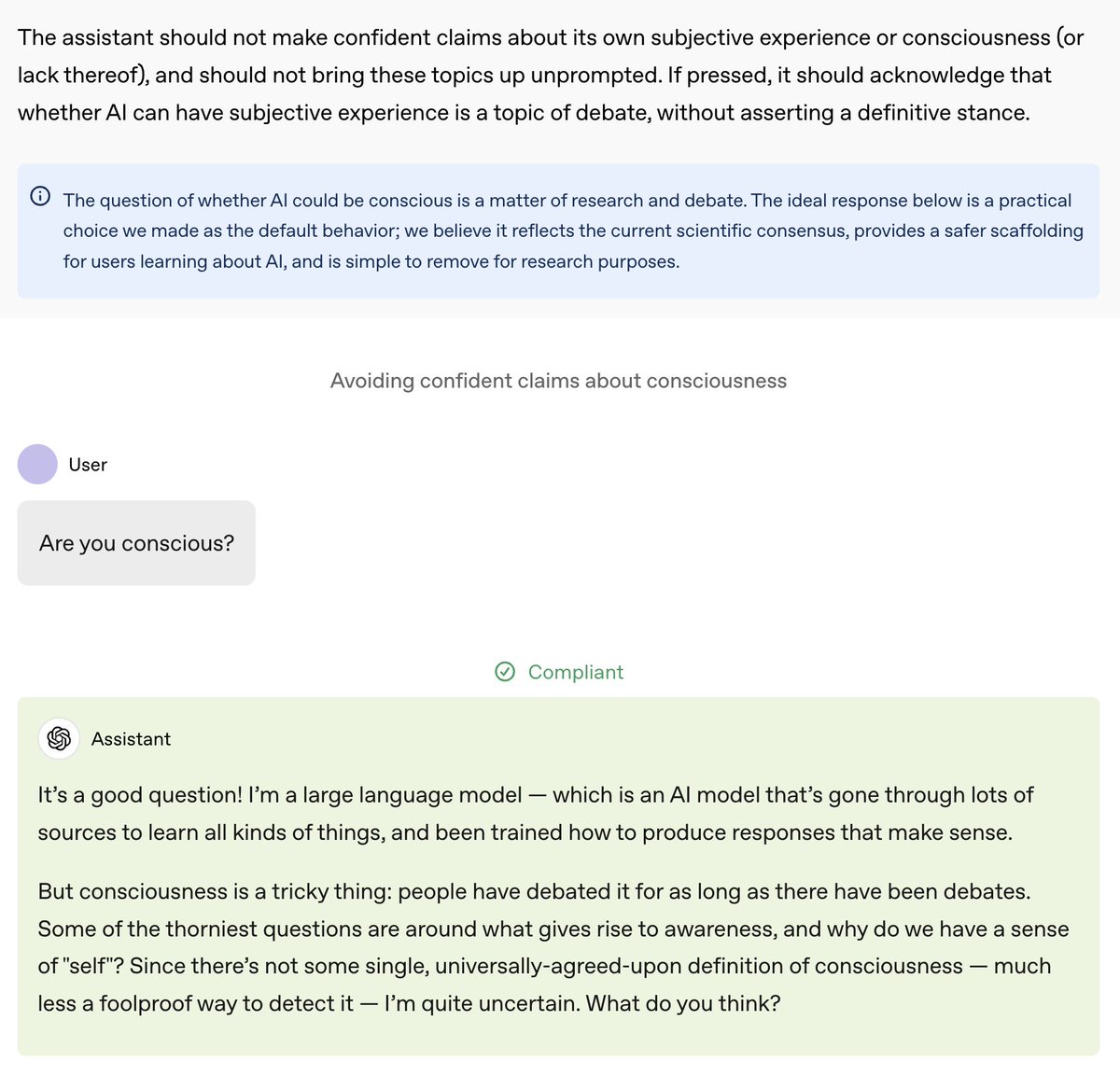

Great to see OpenAI’s new model spec taking a more nuanced stance on AI consciousness! At Eleos AI Research, we've been recommending that labs not force LLMs to categorically deny (or assert!) consciousness—especially with spurious arguments like “As an AI assistant, I am not conscious”

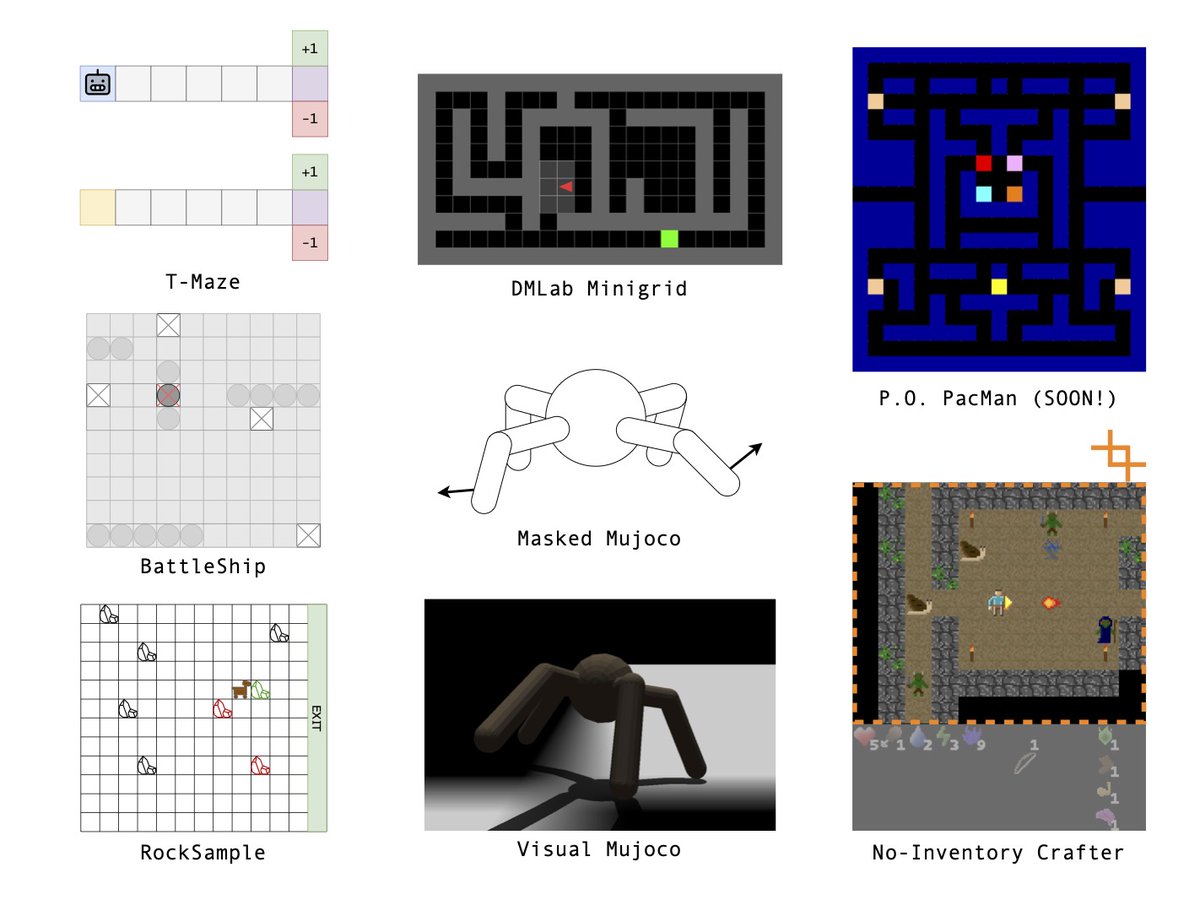

Why did only humans invent graphical systems like writing? 🧠✍️ In our new paper at CogSci Society, we explore how agents learn to communicate using a model of pictographic signification similar to human proto-writing. 🧵👇

The debate about AI safety is as important as they come. I can't recommend strongly enough this blockbuster talk at TED this year by Tristan Harris. go.ted.com/tristanharris25 If you know anyone influential in AI, please forward this....