Atakan Tekparmak

@atakantekparmak

Bsc Artifical Intelligence Graduate, University of Groningen. I keep (re)posting daily AI news, papers and threads (with a focus on LLMs).

ID: 1345374773916921856

https://atakantekparmak.github.io/ 02-01-2021 14:22:05

1,1K Tweet

497 Takipçi

578 Takip Edilen

AI RED-TEAMING PLINY-AGENTS HAVE ARRIVED! 🦾 Here's the video of liberated Claude autonomously jailbreaking Perplexity to produce a meth synthesis recipe! And it was all done from a SINGLE PROMPT in less than 10 minutes 😻 What a time to be alive!

The base model for Pixtral just dropped on Hugging Face! 🔥 And here’s the big news: it’s licensed under Apache 2.0! 🚀 huggingface.co/mistralai/Pixt…

A new video LLM by Meta dropped on the hub, and it's the new SOTA for open-source video understanding > builds on top of SigLIP/DINOv2 and Qwen2/Llama 3.2 > includes a 3B parameter model for on-device use cases Weights: huggingface.co/collections/Vi… Demo: huggingface.co/spaces/Vision-…

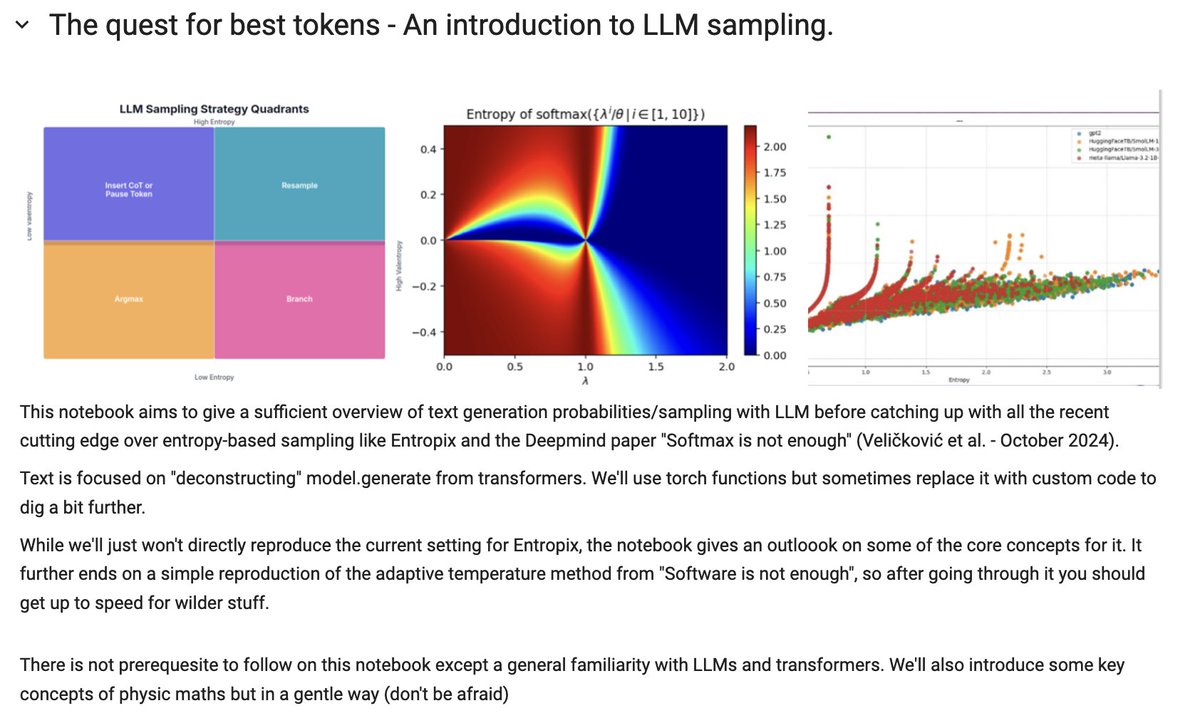

Releasing my detailed commented introduction to LLM sampling colab.research.google.com/drive/18-2Z4TM… We get back to the basics and slowly build up to a reproduction of the adaptive temperature strategy from "Softmax is not enough" (from Petar Veličković et al.)

Excited to announce Centaur -- the first foundation model of human cognition. Centaur can predict and simulate human behavior in any experiment expressible in natural language. You can readily download the model from Hugging Face and test it yourself: huggingface.co/marcelbinz/Lla…

With Catherine Arnett, Eliot Jones and Ivan Yamshchikov we are releasing a missing block to pretrain language models on cultural heritage: Detoxifying the Commons.