Ajitesh Shukla

@ajitesh_shukla7

Student,Love to solve hardest math problem. LLM's, Mathematical Research(Geometric Topology,Differential Geometry),Quantum Computing.Lord Krishna is God Of Math

ID: 986296840478953472

17-04-2018 17:34:26

46,46K Tweet

1,1K Takipçi

5,5K Takip Edilen

Sam Power Zishun Liu Yongxin Chen I looked at the definition of the averaged moment generating function and this reminds me of the method of mixtures that I am a big fan of and which goes back to de la Pena et al. See eg sites.ualberta.ca/~szepesva/pape… and references. Looks cool what you did though and slightly different

Sam Power Zishun Liu Yongxin Chen Nice! Related and potentially of interest: arxiv.org/abs/2306.11404 If you express the subgaussian proxy not uniformly, but dimension-wise in terms of a psd operator, you can obtain a sharp bound that also holds for vectors in infinite-dim Hilbert spaces.

JingyuanLiu jax-ml.github.io/scaling-book/ This books explains well, especially chapter 2, 5 and 12

"The Science and Practice of Trend-following Systems", great one, especially that we currently work on updating our TF system. It's 44 pages, so probably better to run through it using Benjamin AI papers.ssrn.com/sol3/papers.cf…

Seunghyun Seo Daniel Vega-Myhre JingyuanLiu I don't quite think about it as you do. A couple notes: 1. I wouldn't say FSDP doesn't reduce activation memory. When doing these comparisons, it makes sense to keep gbsz fixed, and in that case, swapping TP=>DP lowers batch size per GPU, lowering activation memory. 2. All gather

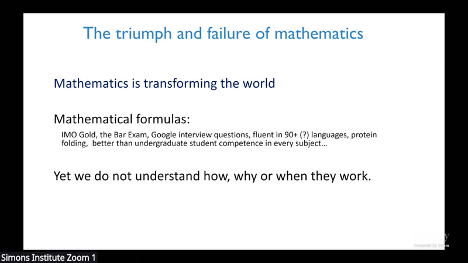

1/2 "Fundamentally, modern AI is just a mathematical object. Math is transforming the world...especially with respect to modern AI." Mikhail Belkin of UC San Diego at the Simons Institute on the triumph and failure of mathematics as it relates to AI. Video: simons.berkeley.edu/talks/mikhail-…