Arnas Uselis

@a_uselis

PhD Student @uni_tue

ID: 1617957542507778058

24-01-2023 18:48:40

43 Tweet

47 Takipçi

676 Takip Edilen

On the rankability of visual embeddings Ankit Sonthalia Arnas Uselis Seong Joon Oh tl;dr: one can discover "property ordering axis", such as age, etc in visual descriptors, often by having a couple of extreme examples. arxiv.org/abs/2507.03683

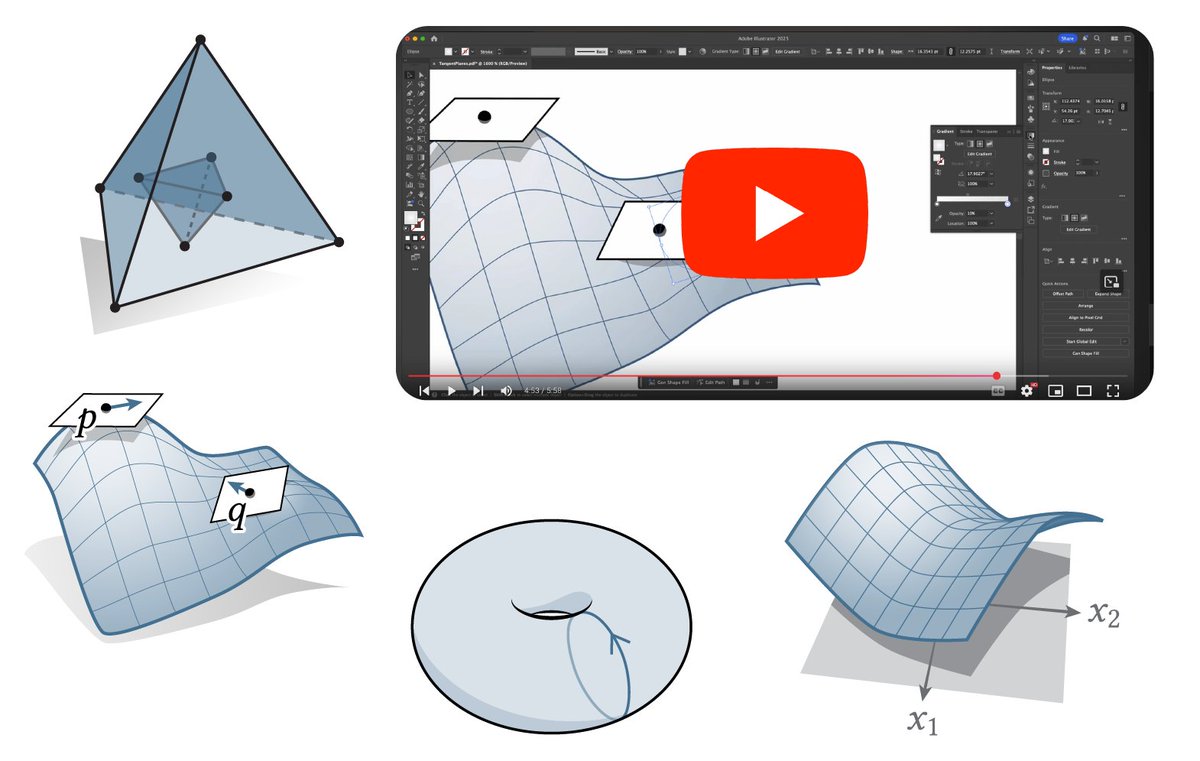

While making some figures for SGI* this year, I made some "behind the scenes" footage of how they get made: youtube.com/playlist?list=… Basically a video extension of cs.cmu.edu/~kmcrane/faq.h… ("figures?") *SGI is a great program run by Justin Solomon & deserves more funding

🧵0/7 🚨 Spotlight International Conference on Minority Languages 🚨 Chi-Ning and Hang have been thinking deeply about how feature learning reshapes neural manifolds, and what that tells us about generalization and inductive bias in brains and machines. They put together the thread below, which I’m sharing on

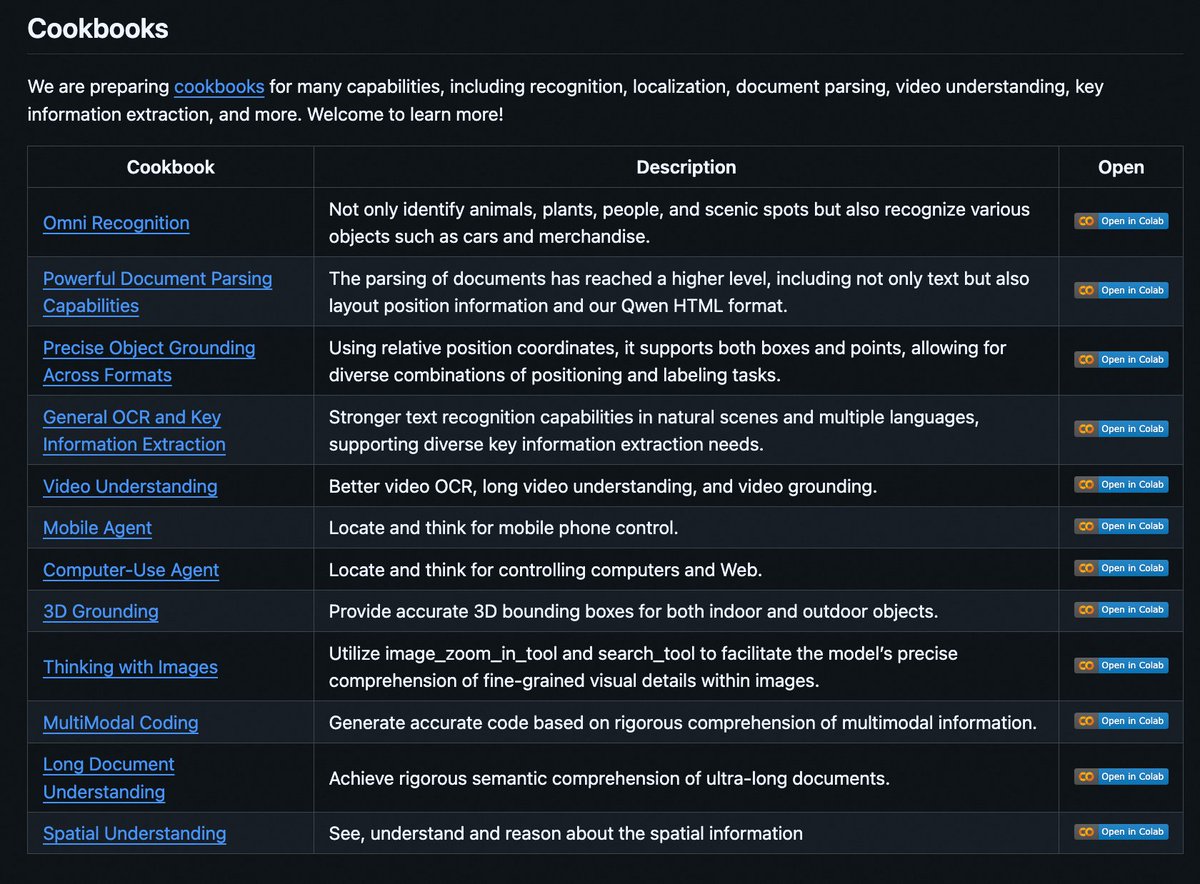

alrighty, publicly sharing my slide deck for multimodal AI, covering ⤵️ > trends & uses > cool open-source models > tools to customize/deploy multimodal models > further resources all models in this presentation are on Hugging Face, easy load with 2 LoC!

Children learn to manipulate the world by playing with toys — can robots do the same? 🧸🤖 We show that robots trained on 250 "toys" made of 4 shape primitives (🔵,🔶,🧱,💍) can generalize grasping to real objects. Jitendra MALIK trevordarrell Shankar Sastry Berkeley AI Research😊

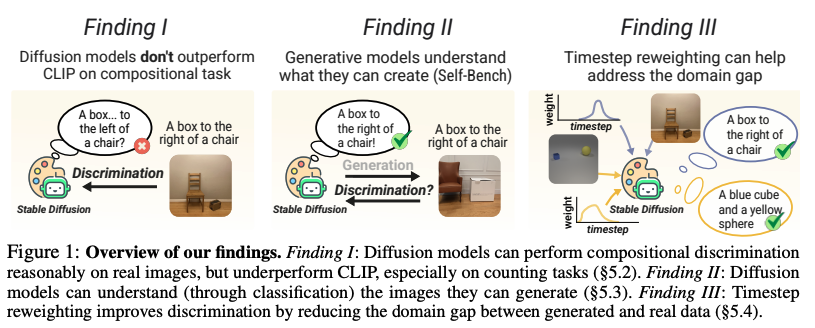

Diffusion models learn probability densities by estimating the score with a neural network trained to denoise. What kind of representation arises within these networks, and how does this relate to the learned density? Eero Simoncelli Stephane Mallat and I explored this question.