James | 🧠/acc

@_troglobyte

📐 at @nethermindEth - Commodifying privacy.

ID: 1099807743408525313

24-02-2019 23:06:14

1,1K Tweet

474 Takipçi

2,2K Takip Edilen

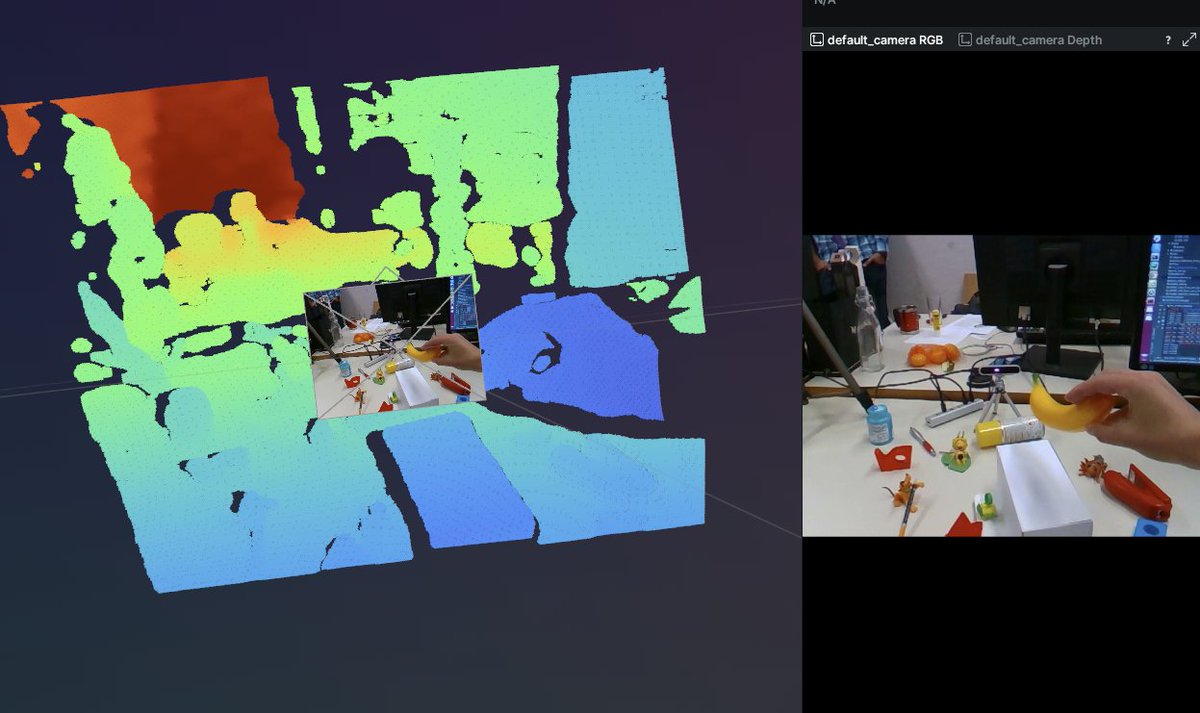

I really like this; In my opinion I have strong conviction the industry is moving in this direction. Truly safe and capable robots will have to learn policy from thousands to millions of diverse Human Demonstrations - great work Shivam Vats @ CoRL2025

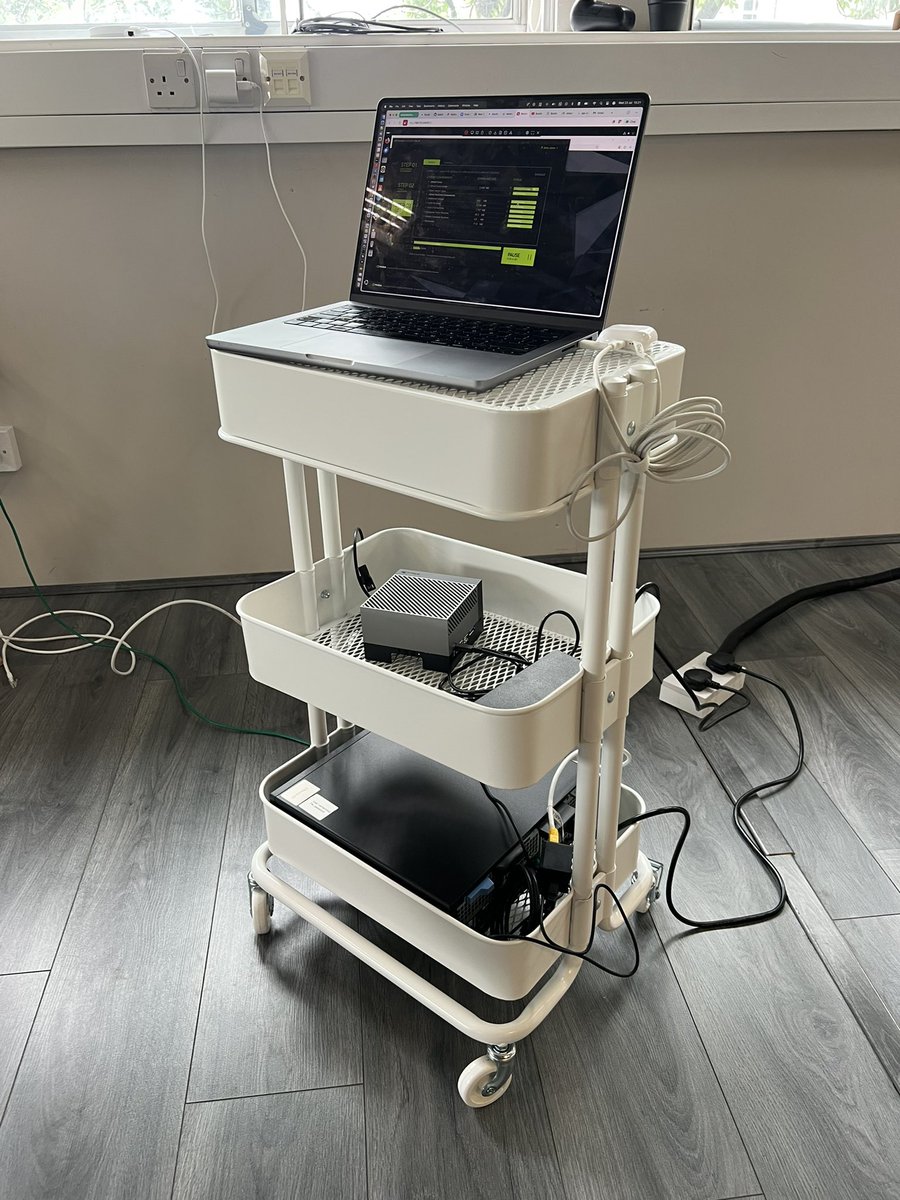

Fingers crossed this is true, looking forward to seeing what you built Bernt Bornich