Saksham Suri

@_sakshams_

Research Scientist @AiatMeta. Previously PhD @UMDCS, @MetaAI, @AmazonScience, @USCViterbi, @IIITDelhi, @IBMResearch.

#computervision #deeplearning

ID: 2977040274

http://www.cs.umd.edu/~sakshams/ 12-01-2015 16:09:41

129 Tweet

760 Takipçi

638 Takip Edilen

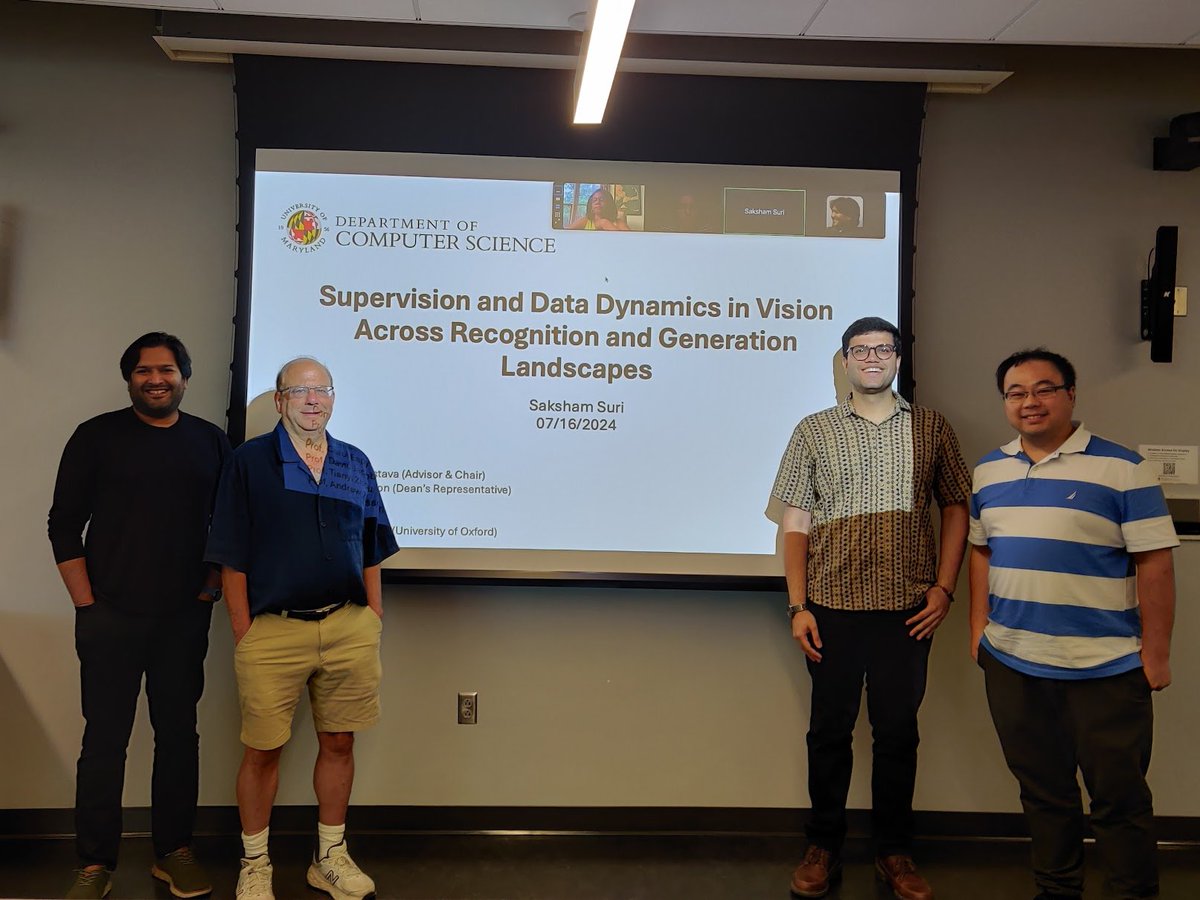

That's a wrap! Happy to share that I have defended my thesis. Thankful for the insightful questions and feedback from my committee members Abhinav Shrivastava,Tianyi Zhou, David Jacobs, Prof. Espy-Wilson, and Prof. Andrew Zisserman.

Excited to announce that I have joined AI at Meta as a Research Scientist where I will be working on model optimization. Also I will be at ECCV to present my work and am excited to meet and learn from everyone. Reach out if you are attending and would like to chat. Ciao 🇮🇹

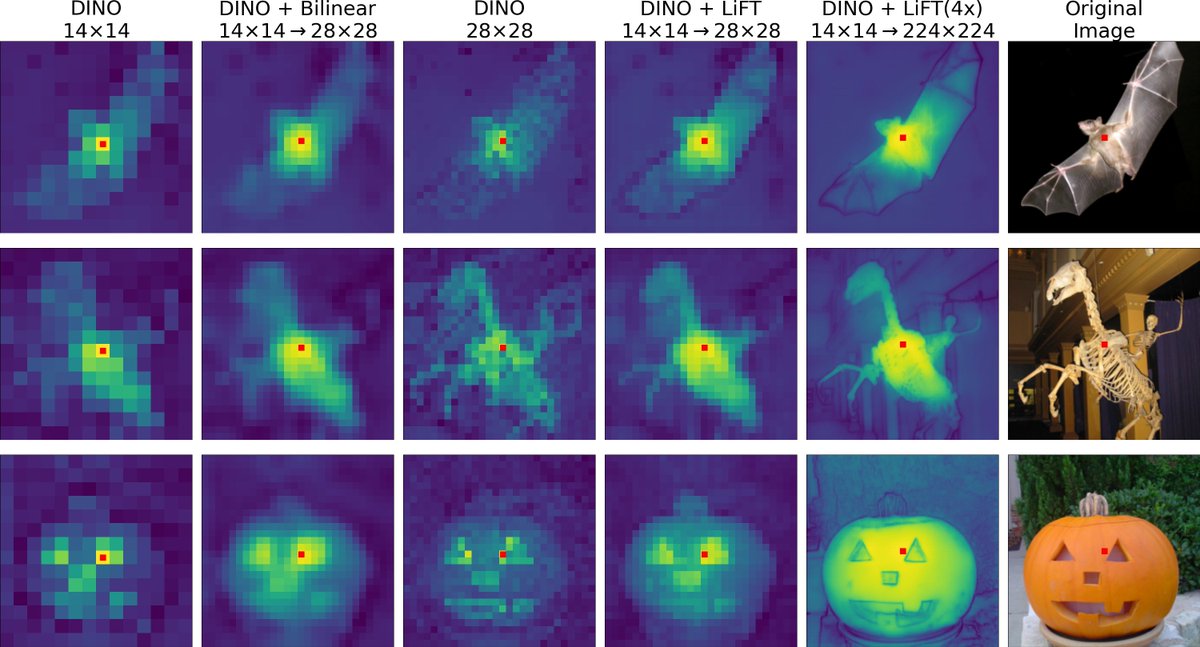

We are happy to release our LiFT code and pretrained models! 📢 Code: github.com/saksham-s/lift Project Page: cs.umd.edu/~sakshams/LiFT Here are some super spooky super resolved feature visualizations to make the season scarier 🎃 Coauthors: Matthew Walmer Kamal Gupta Abhinav Shrivastava